- Splunk Answers

- :

- Splunk Platform Products

- :

- Splunk Enterprise

- :

- Re: Calculate the sum of values that overlap

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calculate the sum of values that overlap

Hello,

The description is not very descriptive. Hopefully, the example and data will be.

I have a list of 1500 numbers. I need to calculate the sum in increments of 5 numbers. However, the numbers will overlap (be used more than once).

Using this code of only 10 values.

| makeresults

| fields - _time

| eval nums="1,2,3,4,5,6,7,8,9,10"

| makemv nums delim=","

| eval cnt=0

| foreach nums

[| eval nums_set_of_3 = mvindex(nums,cnt,+2)

| eval sum_nums_{cnt} = sum(mvindex(nums_set_of_3,cnt,+2))

| eval cnt = cnt + 1]

The first sum (1st value + 2nd value + 3rd value or 1 + 2+ 3) = 6. The second sum (2nd value + 3rd value + 4th value or 2 + 3 + 4) = 9. The third sum would be (3rd value + 4th value + 5th value or 3 + 4 + 5) =12. And so on.

The above code only makes it through one pass, the first sum.

Thanks and God bless,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a really neat problem!

Does doing something like this get you where you are trying to go?

| makeresults

| fields - _time

| eval nums="1,2,3,4,5,6,7,8,9,10"

| makemv nums delim=","

| eval cnt=0

| foreach mode=multivalue nums

[

| eval

moving_window_size=3,

summation_json=if(

mvcount(mvindex(nums,cnt,cnt+('moving_window_size'-1)))=='moving_window_size',

mvappend(

'summation_json',

json_object(

"set", mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1)),

"sum", sum(mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1)))

)

),

'summation_json'

),

cnt='cnt'+1

]

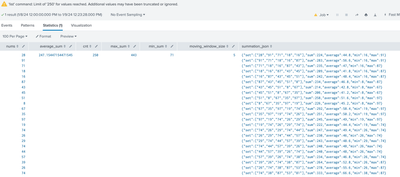

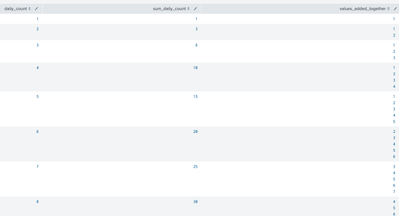

End result looks something like this:

I'm sure this can be standardized more and not sure how you want the final results to be formatted but you should be able to parse out the final MV json objects to get what you need out of them.

Update:

With the addition of the field "moving_window_size" it is a bit more standardized.

And here it is in a slightly different format (summations associated with their own fields):

| makeresults

| fields - _time

| eval nums="1,2,3,4,5,6,7,8,9,10"

| makemv nums delim=","

| eval cnt=0

| foreach mode=multivalue nums

[

| eval

moving_window_size=3,

iter_count="iteration_".'cnt',

summation_json=if(

mvcount(mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1)))=='moving_window_size',

if(

isnull(summation_json),

json_object('iter_count', sum(mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1)))),

json_set(summation_json, 'iter_count', sum(mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1))))

),

'summation_json'

),

cnt='cnt'+1

]

| fromjson summation_json

| fields - summation_json, iter_count, cnt

| fields + nums, iteration_*

And this SPL to try and simulate your original use-case (also added some addition context in the output):

| makeresults count=1500

| eval

low=1,

high=100,

rand=round(((random()%'high')/'high')*('high'-'low')+'low')

| stats

list(rand) as nums

| eval cnt=0

| foreach mode=multivalue nums

[

| eval

moving_window_size=5,

summation_json=if(

mvcount(mvindex(nums,cnt,cnt+('moving_window_size'-1)))=='moving_window_size',

mvappend(

'summation_json',

json_object(

"set", mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1)),

"sum", sum(mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1))),

"average", sum(mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1)))/'moving_window_size',

"min", min(mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1))),

"max", max(mvindex('nums', 'cnt', 'cnt'+('moving_window_size'-1)))

)

),

'summation_json'

),

cnt='cnt'+1

]

| eval

average_sum=sum(mvmap(summation_json, tonumber(spath(summation_json, "sum"))))/mvcount(summation_json),

min_sum=min(mvmap(summation_json, tonumber(spath(summation_json, "sum")))),

max_sum=max(mvmap(summation_json, tonumber(spath(summation_json, "sum"))))You can see by the screenshot below that I hit some Splunk limits when trying to put together a MV field with 1,500 entries (truncates to 250). But other than that it seems to work.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, @dtburrows3

To format the results as we need them, I’m using this code, which works perfectly.

```My code: Part 2```

| rex field="summation_json" "sum\"\:(?<nums_expanded>\d+)\}"

| fields nums_expanded

| mvexpand nums_expandedHowever, when I replace your makeresults with my inputlookup of 3 million records,

```My code: Part 1```

| inputlookup direct_deposit_changes_v4_1_since_01012020.csv

| eval _time = strptime(_time,"%Y-%m-%d")

| stats count as daily_count by _time

| eval daily_count = daily_count.","

| mvcombine daily_count

| eval daily_count = mvjoin(daily_count,"")

```Your code: renamed nums to daily_count.```

| eval cnt=0

| foreach mode=multivalue daily_count

[| eval summation_json=if( mvcount(mvindex(daily_count,cnt,cnt+2))==3,

mvappend( 'summation_json', json_object( "set",

mvindex(daily_count,cnt,cnt+2), "sum",

sum(mvindex(daily_count,cnt,cnt+2)) ) ), 'summation_json' ), cnt='cnt'+1 ]

```My code: Part 2```

| rex field="summation_json" "sum\"\:(?<sum_daily_count>\d+)\}"

| fields sum_daily_count

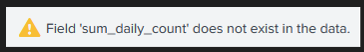

| mvexpand sum_daily_countI end up with this error.

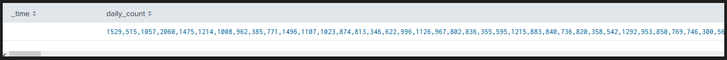

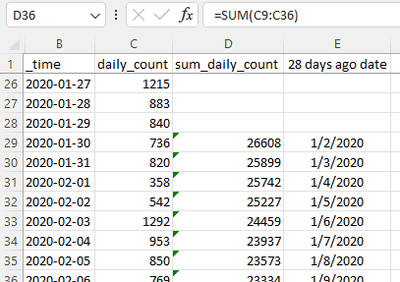

When I run My code: Part 1, these are the results.

Running this at the end of My code: Part 1, proves there are over 1465 values (all values from my stats count by _time command).

| eval mv_cnt = mvcount(split(daily_count,","))-1Thanks and God bless,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And seeing how you will be utilizing the final results I think this query would probably give you the same results and would be much simpler.

| inputlookup direct_deposit_changes_v4_1_since_01012020.csv

| eval

_time=strptime(_time,"%Y-%m-%d")

| stats

count as daily_count

by _time

| sort 0 +_time

| streamstats window=5

sum(daily_count) as sum_daily_count

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think this code would work. streamstats with window=5 would be every 5 events, no overlap.

If I had a total of 15 events, there would be 3 totals created (events 1-5, events 5-10, events 11-15). Where with your previous foreach/json code, 15 events would return 12 totals (events 1-5, events 2-6, events 3-7, and so on, ending with events 11-15).

Thanks and God bless,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

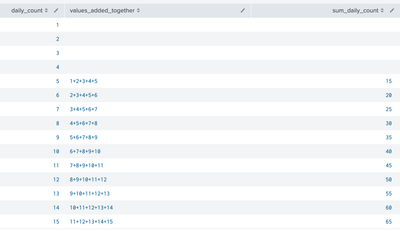

You can check if thats the case with this.

| makeresults count=15

| fields - _time

| streamstats

count as daily_count

| streamstats window=5

list(daily_count) as values_added_together,

sum(daily_count) as sum_daily_count

As you can see in the column "values_added_together" gives you a list of numbers that are being added together on each step.

This is basically the same thing the foreach loop is doing against the multivalue field just using the previous 4 entries to add instead of the next 4 entries. Ultimately, I think you would get the same results, just shifted a couple of rows depending on which method is used. The streamstats method also will include the first few entries summing up less than 5 values because there is not 5 preceeding values until it gets to row 5.

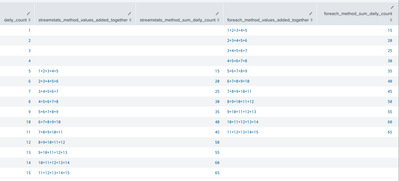

Another screenshot as a POC:

And to really drive it home here is a tabled visual representation of both methods together in comparison to their respective row of the original daily_count value.

Values are all the same just shifted depending on if the moving window is looking back/forward

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

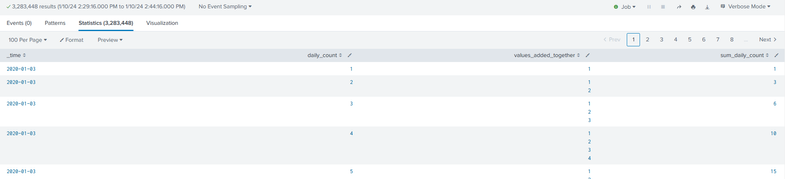

Did I set this up correctly?

Note: I posted 5 days to simplify the use case but I need 28-day sums.

| inputlookup direct_deposit_changes_v4_1_since_01012020.csv

| eval _time = strptime(_time,"%Y-%m-%d")

| sort 0 _time

| streamstats count as daily_count by _time

| streamstats window=28 list(daily_count) as values_added_together, sum(daily_count) as sum_daily_count

| table _time, daily_count, values_added_together, sum_daily_countI ended up with over 3 million rows when it should have been around 1,460.

Because it wasn't grouped by _time (%Y-%m-%d).

However, the foreach code produced the results I was looking for.

| inputlookup direct_deposit_changes_v4_1_since_01012020.csv

| eval _time = strptime(_time,"%Y-%m-%d")

| stats count as daily_count by _time

| mvcombine daily_count

| eval cnt=0

| foreach mode=multivalue daily_count

[| eval summation_json=if( mvcount(mvindex(daily_count,cnt,cnt+27))==28, mvappend( 'summation_json', json_object( "set", mvindex(daily_count,cnt,cnt+27), "sum", sum(mvindex(daily_count,cnt,cnt+27)) ) ), 'summation_json' ), cnt='cnt'+1 ]

| rex field="summation_json" "sum\"\:(?<sum_daily_count>\d+)\}"

| fields sum_daily_count

| mvexpand sum_daily_count

I confirmed these were correct using Excel.

Now I must add _time (%Y-%m-%d) to the results.

Thanks and God bless,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I think on your streamstats example, the first usage of streamstats should be replaced with at "| stats" command instead.

So something like this.

| inputlookup direct_deposit_changes_v4_1_since_01012020.csv

| eval _time=strptime(_time,"%Y-%m-%d")

| stats count as daily_count by _time

| sort 0 +_time

| streamstats window=28 list(daily_count) as values_added_together, sum(daily_count) as sum_daily_count

| table _time, daily_count, values_added_together, sum_daily_count

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

daily_count should be a multivalue field at the time of invoking the

| foreach mode=multivalue daily_count

probably something like this

| inputlookup direct_deposit_changes_v4_1_since_01012020.csv

| eval _time = strptime(_time,"%Y-%m-%d")

| stats count as daily_count by _time

| mvcombine daily_count

```Your code: renamed nums to daily_count.```

| eval cnt=0

| foreach mode=multivalue daily_count

[| eval summation_json=if( mvcount(mvindex(daily_count,cnt,cnt+2))==3,

mvappend( 'summation_json', json_object( "set",

mvindex(daily_count,cnt,cnt+2), "sum",

sum(mvindex(daily_count,cnt,cnt+2)) ) ), 'summation_json' ), cnt='cnt'+1 ]

```My code: Part 2```

| rex field="summation_json" "sum\"\:(?<sum_daily_count>\d+)\}"

| fields sum_daily_count

| mvexpand sum_daily_count- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nice example @dtburrows3 !

You can always get more than the 1500 in the example with stats values to avoid the stats list limit of 100 and prefix the rand with the row index #, e.g. start the search with

| makeresults count=1500

| streamstats c

| eval

low=1,

high=100,

rand=printf("%05d:%d", c, round(((random()%'high')/'high')*('high'-'low')+'low'))

| stats

values(rand) as nums

| eval cnt=0

| rex field=nums max_match=0 "(?<x>[^:]*):(?<nums>\d+)"

| fields - x c

...