Join the Conversation

- Find Answers

- :

- Premium Solutions

- :

- Splunk Enterprise Security

- :

- Why are there duplicate notables/alerts in Enterpr...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why are there duplicate notables/alerts in Enterprise Security Incident Review?

I have duplicate notables/alerts coming in for a specific correlation search I created. I'm sure the problem is within the time ranges of the correlation search but I cannot figure out what it is.

I have this AWS Instance Modified By Usual User search that I got from Security Essentials.

This is my search

index=aws sourcetype=aws:cloudtrail eventName=ConsoleLogin OR eventName=CreateImage OR eventName=AssociateAddress OR eventName=AttachInternetGateway OR eventName=AttachVolume OR eventName=StartInstances OR eventName=StopInstances OR eventName=UpdateService OR eventName=UpdateLoginProfile

| stats earliest(_time) as earliest latest(_time) as latest by userIdentity.arn, eventName

| eventstats max(latest) as maxlatest

| where earliest > relative_time(maxlatest, "-1d@d")

| convert timeformat="%Y-%m-%d %H:%M:%S" ctime(earliest)

| convert timeformat="%Y-%m-%d %H:%M:%S" ctime(latest)

| convert timeformat="%Y-%m-%d %H:%M:%S" ctime(maxlatest)

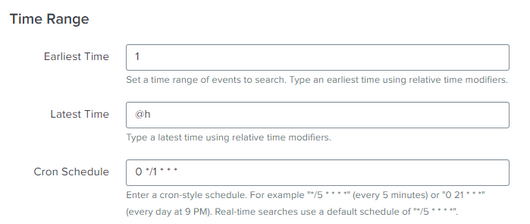

This is the time range in the search:

Yesterday I logged in to our AWS account for the first time and the alert fired - which is good. But the alert kept firing every hour afterwards. From my understanding the alert should fire once and be over with.

8am, original alert:

| userIdentity.arn | eventName | earliest | latest | maxlatest |

| jim | ConsoleLogin | 2023-02-08 07:57:11 | 2023-02-08 07:57:11 | 2023-02-08 07:57:11 |

At 9am:

| userIdentity.arn | eventName | earliest | latest | maxlatest |

| bob | ConsoleLogin | 2023-02-08 08:29:22 | 2023-02-08 08:29:22 | 2023-02-08 08:57:55 |

| jim | ConsoleLogin | 2023-02-08 07:57:11 | 2023-02-08 07:57:11 | 2023-02-08 08:57:55 |

At 10am:

| userIdentity.arn | eventName | earliest | latest | maxlatest |

| bob | ConsoleLogin | 2023-02-08 08:29:22 | 2023-02-08 08:29:22 | 2023-02-08 09:51:21 |

| jim | ConsoleLogin | 2023-02-08 07:57:11 | 2023-02-08 09:20:11 | 2023-02-08 09:51:21 |

At 11am:

| userIdentity.arn | eventName | earliest | latest | maxlatest |

| bob | ConsoleLogin | 2023-02-08 08:29:22 | 2023-02-08 08:29:22 | 2023-02-08 10:15:26 |

| jim | ConsoleLogin | 2023-02-08 07:57:11 | 2023-02-08 09:20:11 | 2023-02-08 10:15:26 |

At 12pm:

| userIdentity.arn | eventName | earliest | latest | maxlatest |

| bob | ConsoleLogin | 2023-02-08 08:29:22 | 2023-02-08 08:29:22 | 2023-02-08 11:15:54 |

| jim | ConsoleLogin | 2023-02-08 07:57:11 | 2023-02-08 09:20:11 | 2023-02-08 11:15:54 |

And what I didn't mention is that, apart from alert repeating itself each hour, there are two alerts created every time due to, I'm assuming, having two users.

How can I fix this to alert only once and to also report only once for both users? thanks for any assistance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is with the earliest and latest settings. The CS is configured to search from the beginning of time (technically, 1 second into the Linux epoch) until the present time and to do so every hour. That means it will pick up the same events every hour forever.

Try these settings:

Earliest = -61m

Latest = -1m

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I thought I had to search from the beginning of time because, as I understand, finding an unusual user means a user that was never seen before. And because I logged in to AWS for the first time, the alert triggered.

But I'll try your suggestion and let you know. Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the query will run every hour then it should look only for new logins in the last hour. That means comparing the event timestamp to the current time and triggering an alert if the event is new.

index=aws sourcetype=aws:cloudtrail (eventName=ConsoleLogin OR eventName=CreateImage OR eventName=AssociateAddress OR eventName=AttachInternetGateway OR eventName=AttachVolume OR eventName=StartInstances OR eventName=StopInstances OR eventName=UpdateService OR eventName=UpdateLoginProfile)

| stats count, latest(_time) as time by userIdentity.arn, eventName

| where (count==1 AND time > relative_time(now(), "-60m"))

| convert timeformat="%Y-%m-%d %H:%M:%S" ctime(time)Also, it may not be necessary to search all time since that can be expensive. You may only have to search back as far as the life of an inactive account (perhaps 90 days) under the assumption that everyone has signed in since then or the account would have been removed.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@richgalloway wrote:If the query will run every hour then it should look only for new logins in the last hour.

the query will run every hour but I want it to look over all of time (or even 90 days is fine).

So, I made the previous change you suggested (below) where I set Earliest = -61m and Latest = -1m and logged into AWS again. After the next hour, the alert triggered on me logging in. This isn't correct because I'm no longer an "unusual" user since I had already logged in the yesterday. This is why I need to search over a long period of time.

But even when I manually search over past 90 days, I'm still showing up.

| userIdentity.arn | eventName | earliest | latest | maxlatest |

| jim | ConsoleLogin | 2023-02-08 07:57:11 | 2023-02-09 13:23:09 | 2023-02-09 13:23:09 |

I'm not sure how to modify my original search or either the time range it looks over so that only users who have never performed a certain action before (i.e. unusual) would show up.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Run the latest query I offered over a longer time (30 days+) and it should show only those logins that happened for the first time in the last 60 minutes.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@richgalloway any suggestions?