Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- Re: CPU load on Search Peers increased significant...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CPU load on Search Peers increased significantly after upgrade to 7.1.1

Hi,

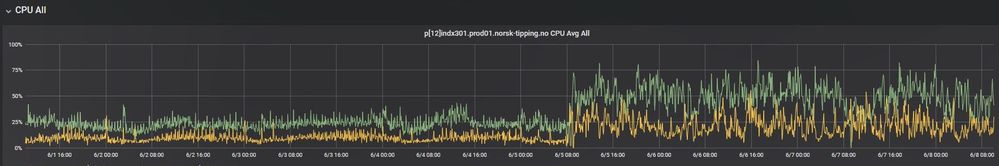

We upgraded our Splunk cluster last Friday. We have 1 Master node, 3 Search Heads in a search head cluster, 2 Search Peers in an indexer cluster and 2 HFs in front of the Search Peers. All have been upgraded to 7.1.1. The SH and HF were previously running 6.5.0 and the Search Peers were running 6.3.2.

After the upgrade, the CPU usage has increased significantly. During office hours the CPU usage peaks at 100% on the Search Peers. This was not the case before the upgrade.

In the "READ THIS FIRST" I can't see anything that should relate to our deployment in terms of increasing the CPU load.

I know that we have some searches that should be optimized to limit the usage of subsearches. But it was working ok before the upgrade, so I can't see why upgrading should have such a huge impact. From what I can see in the DMC it looks like all searches use more CPU than before. But no rotten apples that I can identify.

Can anyone help me out on what I should look for when troubleshooting?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are bugs in 7.1.1. The best thing to do on this issue is to open a ticket with support. We have some issues that are not going to be fixed until 7.1.2. Moral of the story is to research more carefully what the issues are with a version before upgrading to it. We learned that the hard way. Good luck!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Early adopters always pay the highest price.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very true. We would have never gone to 7.1.1 but Enterprise Security 5.1 required it and we needed to upgrade it. ES 5.1 is working GREAT so there is at least that good news. 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We ran into the same issue when we upgraded last week. The fix was to set the acceleration.backfill_time as specified in the highlighted issues in the release notes.

http://docs.splunk.com/Documentation/Splunk/7.1.1/ReleaseNotes/Knownissues#Highlighted_issues

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To which value does it needs to be set?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's arbitrary, but with no value it defaults to "all time". We set ours to 7 days default, but for some DM's we set it higher (such as 30 days).

This is only the initial acceleration, so if you set it for 7 days but have the data model accelerated for 3 months, it will initially accelerate the first 7 days worth of data but after 3 months all data will be accelerated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jonaslar,

Have you had some success in troubleshooting this? We still have big problems with the CPU usage hitting the roof, and causing some searches to take up to 20-30 minutes. Searches that took maybe a minute to complete before upgrading.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What’s in index=_internal log_level=error OR log_level =warn*

?

Maybe you have an error or warning that started after the upgrade?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well. There is a lot of warnings from the DispatchManager:

WARN DispatchManager - enforceQuotas: username=USERNAME, search_id=SID - QUEUED (reason='The maximum number of concurrent historical searches for this user based on their role quota has been reached. concurrency_limit=3')

But my guess is that's because of the longer runtime of the searches. So when loading a dashboard with multiple searches that limit will be reached pretty quickly.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the Monitoring Console there are dashboards that show search performance. Top long running searches etc. have you checked that to see if anything stands out?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like most of the searches has a longer run time. None that truly stands out. Some of the searches that take up to 10 minutes are simple searches that should return within seconds.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is disk io up?

Try iostat 5 and see what iowait% is.

Curious where the bottle neck is, that will help us figure out what the culprit is.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's a snapshot. From what I've seen, iowait has been fine since the upgrade. Just for giggles, I applied the configuration bundle once more. And now the cluster looks more like it should be. Will keep an eye on the load and see if it stabilizes.

Snapshot:

avg-cpu: %user %nice %system %iowait %steal %idle

91.62 0.00 5.31 0.12 0.00 2.95

Device: tps kB_read/s kB_wrtn/s kB_read kB_wrtn

sdf 9.80 0.00 95.20 0 476

sdg 60.20 0.00 534.40 0 2672

sda 0.00 0.00 0.00 0 0

sdb 0.00 0.00 0.00 0 0

sdd 0.00 0.00 0.00 0 0

sde 0.00 0.00 0.00 0 0

sdc 0.00 0.00 0.00 0 0

dm-0 0.00 0.00 0.00 0 0

dm-1 92.00 0.00 534.40 0 2672

EDIT: Nope. After a while, the CPU usage is back up at close to 100%.