Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Admin Other

- :

- Knowledge Management

- :

- Re: Summary Indexing give duplicated records

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Summary Indexing give duplicated records

Hello,

I had set up a few schedule reports that will collect some data from index A every 15 minutes into index B (which is a summary index). However, I found that nearly all events copied from index A to index B are duplicated (some even have >100 same events), the data inside the events are exactly the same(_raw, _time, _indextime, all fields are equal). I don't want to keep those duplicated events and lower the search performance, could anyone help?

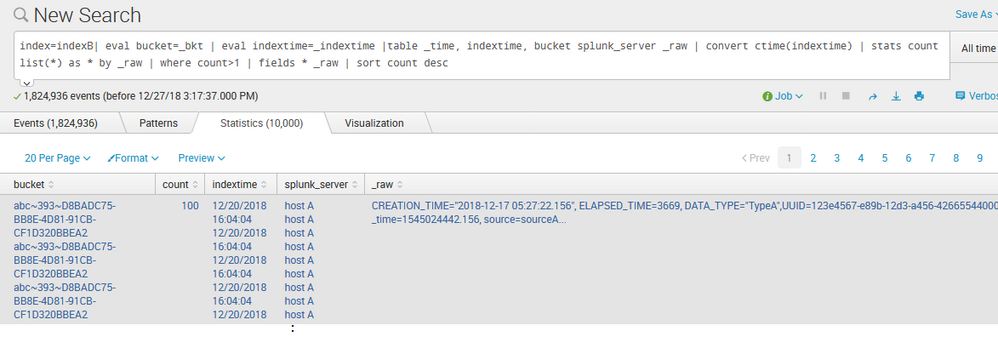

I had tried index=indexB| eval bucket=_bkt | eval indextime=_indextime |table _time, indextime, bucket splunk_server _raw | convert ctime(indextime) | stats count list(*) as * by _raw | where count>1 | fields * _raw | sort count desc for indexB, and found that same events appear 100 times, just like the pic below

I had read several question and answer inside this forum, but still cannot figure out how to solve the problem, could you please kindly advise?

Many thanks!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you also do a cross check on your cron schedule. Because if your search is running multiple times within the span of 15 mins, that would also duplicate your data.

Also check the history of your scheduled search -

index=_internal sourcetype=scheduler | table _time user savedsearch_name status scheduled_time run_time result_count

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you please share the search you are using to create summary index? Also some sample events.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The search to create summary index is something like this:

index=indexA sourcetype=sourcetype1 earliest=-15m source="*XXX_*" field1="field1" field2="*-field2" | table CREATION_TIME ELAPSED_TIME ... UUID _time source| eval DATA_TYPE="DataType1" | table CREATION_TIME ELAPSED_TIME ... UUID _time source DATA_TYPE | eval _raw="CREATION_TIME=\"".CREATION_TIME."\", ELAPSED_TIME=\"".ELAPSED_TIME."\""."\", DATA_TYPE=\"".DATA_TYPE."\"" | eval _raw=if(isnull(UUID), _raw, _raw.", UUID=".UUID) | eval _raw=if(isnull(_time), _raw, _raw.", _time="._time) | eval _raw=if(isnull(source), _raw, _raw.", source=".source) | dedup _raw | collect index=indexB

Events will have the following fields:

CREATION_TIME="2018-12-17 05:27:22.156", ELAPSED_TIME=3669, DATA_TYPE="TypeA",UUID=123e4567-e89b-12d3-a456-42665544000, _time=1545024442.156, source=sourceA

Thanks!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have distributed environment or single instance? If distributed, then what is your outputs.conf on search head?

Check this answer may help:

https://answers.splunk.com/answers/290453/why-is-summary-indexing-creating-duplicate-records.html