Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Admin Other

- :

- Installation

- :

- Re: License usage shows higher volume than the fil...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

License usage shows higher volume than the file being monitored

Hi community

I'm still a bit confused how Splunk is calculating the volume usage.

So far, I'm using the Free license (up to 500MB) and I tried to optimize my logging file as good as possible.

Today, my log file is 800KB big but when checking the License settings using Splunk Web, I see (Volume used today:) 11 MB?

How is this possible? Where is this extra data coming from?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

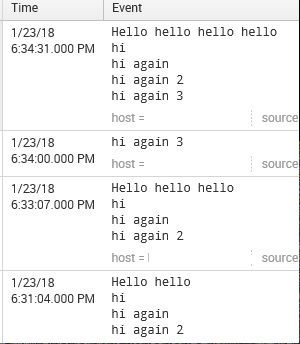

Hi - See the attachment I have uploaded

I started with the text at 6:31 (source is a simple .txt file continuously monitored)

@ 6:31

Hello hello

hi

hi again

hi again 2

Now, at 6:33 , I just added anextra hello to line 1 in the .txt file

but see the event being indexed at 6:33 . Splunk indexed ALL the lines again, even though I did not change anything in lines 2,3&4 in the .txt file......

Hello hello hello

hi

hi again

hi again 2

Now again at 6:34 I added a new line to the file hello again 3, this time Splunk indexed ONLY the new line and NOT ALL the lines in the text file.

Now again, at 6:34:31 I added another hello to line 1 , Splunk indexed ALL the lines again from the text file.

See the behavior?

So - Without knowing your file structure , it is difficult to say why the continuous monitoring is affecting your license, but trust me it does. I have seen this behavior before.

I advise can you look at the way your file (which is being continuously monitored) is structured in terms of the data lines ? If possible try to have a sample uploaded under continuously monitoring. And as @FrankVl mentioned, can you share your inputs.conf settings

I am hopeful though that you will be able to figure out a solution from the example I have given and by tinkering with your data file a bit.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

$ cat ./etc/system/local/inputs.conf

[default]

host = SPLUNK

[monitor://$SPLUNK_HOME/etc/splunk.version]

disabled = 0

[monitor://$SPLUNK_HOME/var/log/splunk]

disabled = 0

[monitor://$SPLUNK_HOME/var/log/splunk/license_usage_summary.log]

disabled = 0

[batch://$SPLUNK_HOME/var/spool/splunk/...stash_new]

disabled = 0

[batch://$SPLUNK_HOME/var/spool/splunk]

disabled = 0

I created the monitor through the Splunk Web

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi - i am curious to see what your indexed event shows , something like the snapshot I gave . How does your indexed event shows? Are there duplicates, if so from which source?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is how it looks like, 2 following events.

I removed certain sensitive information but it shouldn't matter to the behavior I see

I monitor this specific file and split the lines (=events) with TRACE

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Splunk license manager is rarely wrong. What probably has happened - you are doing a continuous (or consuming/monitoring your file at fixed time intervals), when this happens for example say, you use continuous monitoring on your log file at an interval of 1 hour, after 3 hours you would have indexed 800*3=2400MB of data.

The other cause could be some user is running a REST api intergration with some api, when this happens and similar to the above example, the toal indexed data volume (and hence the license) will be the number of times the api is polled * data volume retrieved from the api each time.

Please check carefully.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Indeed, the file is being continuously monitored but how can I tell Splunk not to process the whole file again and again? Because now the file is small but after some time, the file will grow until 100-200MB and then I'm hitting that limit again.

For instance, by now the file has grown to 2.3MB, so if I follow your example, after a few hours the total used volume will be ~7MB even though the file might have only increased to 2.4MB..

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should be able to see in your index whether the file was actually ingested multiple times. Normally this shouldn't happen, but it all depends on how things were configured. Just do some investigation like counting the events in the index(es) against the number of events in the original log file. Or have a look at the license_usage.log in your indexers to see what the license usage is spent on (you can also search that log through the _internal index).

My guess would be that there may be a little overhead in the data that counts against your license and at such tiny numbers that becomes visible. But that really isn't much more than a guess.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A breakdown of the indexes and current sizes

_audit: 26 MB

_internal: 106 MB

_introspection: 145 MB

_telemetry: 1 MB

_thefishbucket: 1 MB

history: 1 MB

main: 146 MB

splunklogger: 0 B

summary: 1 MB

while the only monitored file is 2.6 Mb..

I noticed that Splunk is logging certain data up to 14 (!) times over and over again, which also affects the counts on my dashboard. However, I'm not really sure why he does this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If Splunk is indeed ingesting the data from the monitored file over and over again, that's worth investigating.

Can you provide some details on how logs are being written to that file and what the splunk inputs.conf looks like?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

$ cat ./etc/system/local/inputs.conf

[default]

host = SPLUNK

[monitor://$SPLUNK_HOME/etc/splunk.version]

disabled = 0

[monitor://$SPLUNK_HOME/var/log/splunk]

disabled = 0

[monitor://$SPLUNK_HOME/var/log/splunk/license_usage_summary.log]

disabled = 0

[batch://$SPLUNK_HOME/var/spool/splunk/...stash_new]

disabled = 0

[batch://$SPLUNK_HOME/var/spool/splunk]

disabled = 0

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And which files specifically did you check for size and did you see getting duplicated?

This all looks like copies of config that is already in /etc/system/default/inputs.conf anyway, but with some important settings missing (like move_policy = sinkhole for the batch inputs...).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the configuration of /etc/system/default/inputs.conf

[default]

index = default

_rcvbuf = 1572864

host = $decideOnStartup

[blacklist:$SPLUNK_HOME/etc/auth]

[monitor://$SPLUNK_HOME/var/log/splunk]

index = _internal

[monitor://$SPLUNK_HOME/var/log/splunk/license_usage_summary.log]

index = _telemetry

[monitor://$SPLUNK_HOME/etc/splunk.version]

_TCP_ROUTING = *

index = _internal

sourcetype=splunk_version

[batch://$SPLUNK_HOME/var/spool/splunk]

move_policy = sinkhole

crcSalt = <SOURCE>

[batch://$SPLUNK_HOME/var/spool/splunk/...stash_new]

queue = stashparsing

sourcetype = stash_new

move_policy = sinkhole

crcSalt = <SOURCE>

[fschange:$SPLUNK_HOME/etc]

#poll every 10 minutes

pollPeriod = 600

#generate audit events into the audit index, instead of fschange events

signedaudit=true

recurse=true

followLinks=false

hashMaxSize=-1

fullEvent=false

sendEventMaxSize=-1

filesPerDelay = 10

delayInMills = 100

[udp]

connection_host=ip

[tcp]

acceptFrom=*

connection_host=dns

[splunktcp]

route=has_key:_replicationBucketUUID:replicationQueue;has_key:_dstrx:typingQueue;has_key:_linebreaker:indexQueue;absent_key:_linebreaker:parsingQueue

acceptFrom=*

connection_host=ip

[script]

interval = 60.0

start_by_shell = true

And to be honest, the file I'm always referring to is not really mentioned in neither the inputs.conf files. The file I monitor is called 'Splunk.log' and put in Source Type 'SessionLogs' for example

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If it gets ingested into splunk it must be mentioned in some inputs.conf, so best to find out where. If it is not in system/default or system/local, I guess it is in some specific app under etc/apps?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indeed, it is mentioned in /opt/splunk/etc/apps/search/local

[monitor:///opt/splunk/share/DEMO/logs/Splunk_new.log]

disabled = false

index = main

sourcetype = SessionLogs

You think I should add extra configs to this monitor stanza?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That looks fine. How does that file get populated?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One of our servers is putting certain data in it depending on the load this server has (amount of session requests). It's hard to predict how often this file gets updated.

Know that I configured this location as a share from our server towards the Splunk Enterprise server.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

and when you query this data file source, you get duplicate events?

That is events (lines) already indexed once are being reflected again and again?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, but the duplicate events might not be the problem anymore because I also see them in the log files, so it's ok that there are duplicates.

However, I'm still amazed that a small file can use up so much daily volume 😐

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to mention a couple of things here -

data indexed PER DAY contributes towards license, hence it does not matter if there are duplicates, however if there are duplicates (for eg. file being indexed 4 times a day), then your data consumed towards license will be the sum of the data volume being ingested for each of the 4 times.

Indexes like _audit , _internal DO NOT contribute towards daily licensing UNLESS you are doing a continuous monitoring on those files and ingesting them again and again in a 24 hour time period.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I understand if there are duplicates that this also counts towards the daily volume usage.

I just checked and the file size is now -> 1.1MB while my daily usage is over 10MB.

How can I configure Splunk monitoring in such a way that he only needs to care about the newly added date from this file? Or how can I configure Splunk monitoring to only check this file every 20 minutes and index the data that has been added?