- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Would additional pipelines or better SAN help reso...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Would additional pipelines or better SAN help resolve high IO bandwidth on my indexers?

Hi,

I noticed that my io bandwidth is approaching 100% on my servers (though, my overall resources (cpu, mem) are fine. Would adding additional pipelines help here (my guess is no), or do I need better SAN? (additional servers not an option).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

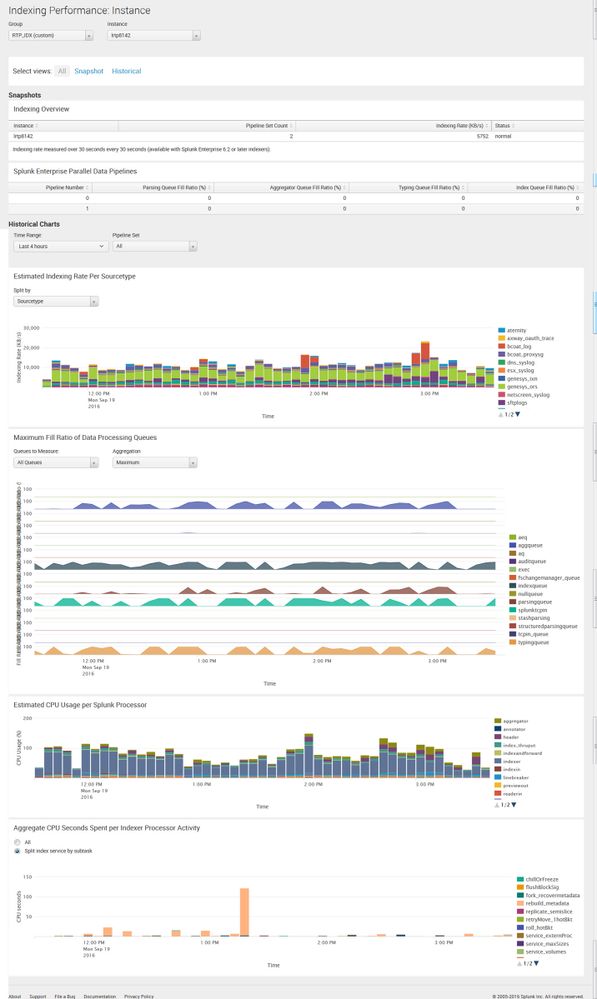

Is the high IO on your indexers? ( I assume so..) You should investigate further, use the DMC, and see which queue the most time is being taken on.

Most likely its the indexing queue, which is the write to disk. In which case, you need to look at faster disks (or as you say is not an option, more servers..)

There are some potential areas for tuning you can do, but again it depends where the problems are. Some more details would be helpful.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Saying the same thing another way: Increasing pipelines will just try to but more cars on the already backup-up highway. If the system is slow to writing (IOPS) then attacking that with more indexers or faster throughput to disk would be the answer.

@jdonn_splunk put Bonnie++ into an app. That might help get a handle on what you're working with - especially if you need to prove to folks that you've been given less-than-adequate storage speed: https://splunkbase.splunk.com/app/3002

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, great info. How do I show that the increased IO is affecting users? It's a much easier sell if I can show how it is affecting our ability to use Splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use searches and normalize by events/second. My understanding is that you want at least 50k/second. The Job Inspector should be helpful: http://docs.splunk.com/Documentation/Splunk/6.5.0/Search/ViewsearchjobpropertieswiththeJobInspector

Does that answer?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Per your comments and the screenshot above, as esix mentioned it's mostly an issue writing to disk.

Yes, you could just buy a new SAN that's super fast and more full of awesomeness. That's an expensive option and probably not the first thing most people familiar with Splunk's performance characteristics would suggest.

One option to help Splunk get the IOPS it needs is to pin its LUN(S) to higher-speed storage, if your storage is tiered and has that ability. This is often done on tiered storage for systems needs that little extra boost, like SQL and Splunk.

Another option may be to reduce the load on the SAN by reducing the "other" things running on it and thus free up more write IOPS for your Splunk indexers. In order to determine that you'll have to look at SAN performance statistics and what else your SAN is doing. You may need to engage your storage team for this. This may lead to not-as-expensive "big slow" new SAN or something like EMC's Isilon to which you can move some of the pokey loads, which in aggregate may help Splunk get what it needs. It could lead to a new fast SAN, too, dunno, it'll take work to determine.

That same examination your SAN's performance should also determine if it's disk holding you up (probably) or the controller (not as likely). If you find it's just disk, you could probably expand the SAN with some new trays and disks and increase performance quite a bit. If it's controller, well, we may be back to new SAN. Your storage team or storage vendor can help with both this and the previous topic. The former may want new hardware because they're a storage team, and the latter may want you to buy new hardware because their job is to sell that, but as long as you keep that in mind you can still get good information out of them.

While you may be constrained by something else, it really IS worth keeping an open mind about new servers - the business case for standalone SSD-based servers for your Splunk indexers is usually very strong. Business folks are all about business cases and ROI and that sort of thing and if it's the right solution for a particular problem, they're often willing to bend a bit. And frankly, "new servers" may not even require new servers. I can easily envision pulling a couple of physical VM hosts out of a cluster and rededicating them to Splunk duty by just adding a dozen ~4 TB SSDs, even if you leave cold on the SAN.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is mostly in the indexing pipeline, which means write to disk. You can try decreasing the time on the autolb from your forwarders, that might help lower disk io. But without a better knowledge of your deployment, it seems like adding indexers might help. What are you daily volumes and number of indexers?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it's the indexers. I'll get some more info.