- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Why is copy-truncate a low-quality log-rotation st...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been told that the copy-truncate pattern is a poor choice for log rotation, and that it should only be used when there is no other choice. Why is this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The copy-truncate pattern has some quality issues because there is no way to ensure all data is retained. There is an inherent race condition between the logging application and the program performing the copy & truncate. Data can be written to the file after the copy and before the truncate. This data will be lost.

Additionally, copy-truncate requires two extra I/Os for every log-write. Every log-write will need to be later read back, and written out again by the copy operation. Therefore, this pattern will exhaust I/O resources more readily.

With Splunk specifically, copy-truncate requires handling a large number of additional edge-cases, such as encountering the copy in the process of being built (you would want us to recognize this as an already-handled file), and reading from an open logfile during truncation. The latter problem is potentially not solvable in complex situations.

For example, Splunk could be in a situation where it reads the first half of an event (more likely for large events), and then the file is truncated (reduced to zero length) before we can read the second half of an event. Should we send it on as is, potentially delivering a broken half-event to the index? Should we drop it, potentially losing the only half of the data we will ever gain access to?

In general, of course, Splunk should be well-behaved to the extent possible in the face of copy-truncate. Also, for applications which log to stderr or other applications which have no support for ever reopening their logfile, there may be no other option for file management than copy-truncate.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

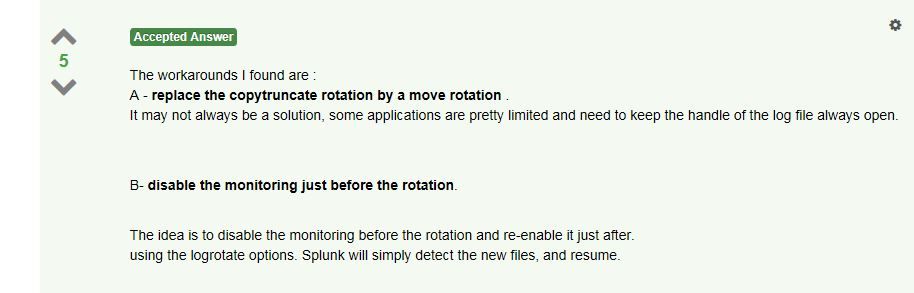

@rjthibod about alternatives - Why copytruncate logrotate does not play well with splunk monitoring

It says -

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My viewpoint is that in situations where a workaround can solve a problem with monitoring, probably Splunk should try to auto-solve that problem so workarounds are not needed. Not to set unrealistic expectations: there could be types of problems that would require redisigns of the splunk file monitoring component and could be very expensive and take along time to become available. However, as a rule, we have continuously added fixes and improvements to handle edge cases like this over the years.

The linked answer is about one such specific situation. I do not know, at the moment, whether we have shipped improvements to better handle that case since 2014.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The copy-truncate pattern has some quality issues because there is no way to ensure all data is retained. There is an inherent race condition between the logging application and the program performing the copy & truncate. Data can be written to the file after the copy and before the truncate. This data will be lost.

Additionally, copy-truncate requires two extra I/Os for every log-write. Every log-write will need to be later read back, and written out again by the copy operation. Therefore, this pattern will exhaust I/O resources more readily.

With Splunk specifically, copy-truncate requires handling a large number of additional edge-cases, such as encountering the copy in the process of being built (you would want us to recognize this as an already-handled file), and reading from an open logfile during truncation. The latter problem is potentially not solvable in complex situations.

For example, Splunk could be in a situation where it reads the first half of an event (more likely for large events), and then the file is truncated (reduced to zero length) before we can read the second half of an event. Should we send it on as is, potentially delivering a broken half-event to the index? Should we drop it, potentially losing the only half of the data we will ever gain access to?

In general, of course, Splunk should be well-behaved to the extent possible in the face of copy-truncate. Also, for applications which log to stderr or other applications which have no support for ever reopening their logfile, there may be no other option for file management than copy-truncate.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you point to another post / link that demonstrates or explains a more suitable approach for Splunk use cases?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For now, I wrote about this a little more over here: https://answers.splunk.com/answers/49663/log-rotation-best-practices.html#answer-468630

However, the aim of writing these was to build content that I hope to hoover in the main splunk web documentation soon.