Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Why does indexer not index file coming from a Wind...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I don't understand why a file coming from a windows based UF does not get indexed properly. By this I mean that some fields contain newlines that are interpreted by Splunk as event delimiters thus turning a single event into multiple events. I can index that same file manually or from a folder input on a unix filesystem without issue.

I am using Splunk Enterprise 8.0.5 with UF 8.0.5 running on Windows 10 VM. I am trying to index ServiceNow ticket data contained in an ANSI encoded CSV file. Scenarios that work and do not work:

- I upload the CSV manually through the "Add Data" wizard specifying the sourcetype and it works

- I deposit the CSV in a local folder on the same VM Splunk Enterprise is installed, configured with the same sourcetype, and it works

- I deposit the CSV in a local folder on the same VM the UF is installed, configured with the same sourcetype, and it does not work

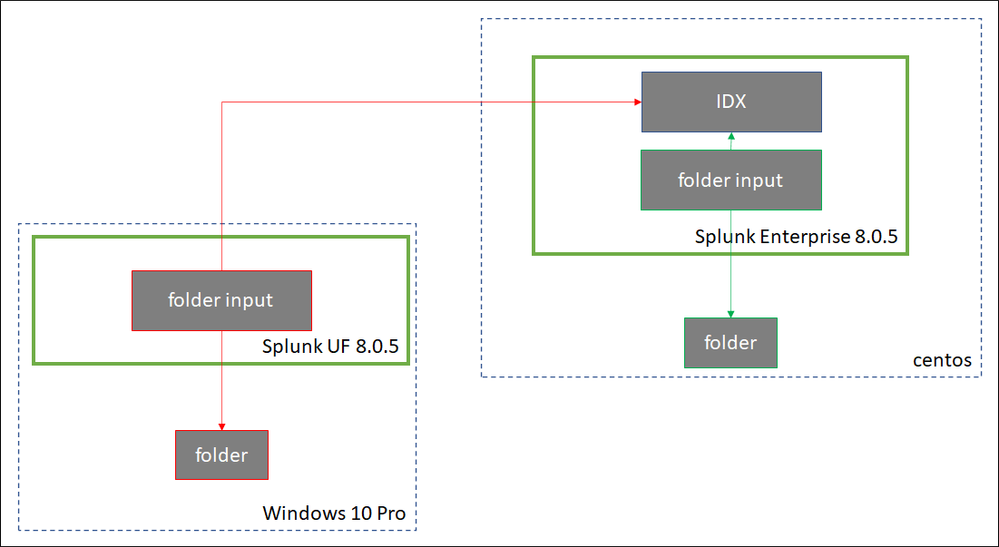

I have no idea why this last scenario does not work. Here is a simple diagram outlining the second and third scenarios (the third one doesn't work and is highlighted in red):

Here is the file I am trying to index (contains one ticket):

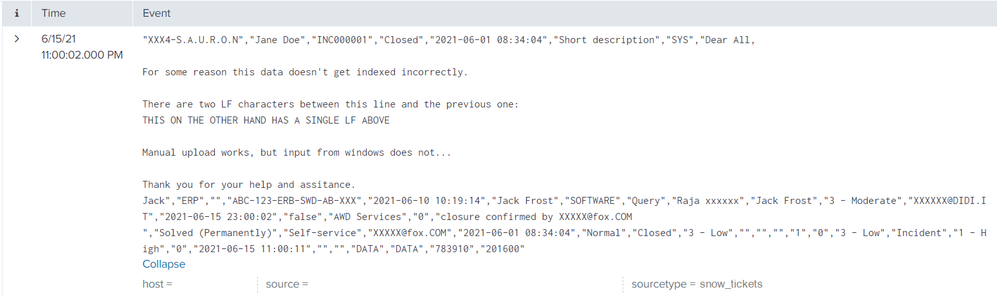

"company","opened_by","number","state","opened_at","short_description","cmdb_ci","description","subcategory","hold_reason","assignment_group","resolved_at","resolved_by","category","u_category","assigned_to","closed_by","priority","sys_updated_by","sys_updated_on","active","business_service","child_incidents","close_notes","close_code","contact_type","sys_created_by","sys_created_on","escalation","incident_state","impact","parent","parent_incident","problem_id","reassignment_count","reopen_count","severity","sys_class_name","urgency","u_steps","closed_at","sys_tags","reopened_by","u_process","u_reference_area","calendar_duration","business_duration"

"XXX4-S.A.U.R.O.N","Jane Doe","INC000001","Closed","2021-06-01 08:34:04","Short description","SYS","Dear All,

For some reason this data doesn't get indexed incorrectly.

There are two LF characters between this line and the previous one:

THIS ON THE OTHER HAND HAS A SINGLE LF ABOVE

Manual upload works, but input from windows does not...

Thank you for your help and assitance.

Jack","ERP","","ABC-123-ERB-SWD-AB-XXX","2021-06-10 10:19:14","Jack Frost","SOFTWARE","Query","Raja xxxxxx","Jack Frost","3 - Moderate","XXXXXX@DIDI.IT","2021-06-15 23:00:02","false","AWD Services","0","closure confirmed by XXXXX@fox.COM

","Solved (Permanently)","Self-service","XXXXX@fox.COM","2021-06-01 08:34:04","Normal","Closed","3 - Low","","","","1","0","3 - Low","Incident","1 - High","0","2021-06-15 11:00:11","","","DATA","DATA","783910","201600"

here is the props.conf stanza which has been positioned exclusively on the indexer:

[snow_tickets]

LINE_BREAKER = ([\r\n])+

DATETIME_CONFIG =

NO_BINARY_CHECK = true

MAX_EVENTS = 20000

TRUNCATE = 20000

TIME_FORMAT = %Y-%m-%d %H:%M:%S

TZ = Europe/Rome

category = Structured

pulldown_type = 1

disabled = false

INDEXED_EXTRACTIONS = csv

KV_MODE =

SHOULD_LINEMERGE = false

TIMESTAMP_FIELDS = sys_updated_on

CHARSET = MS-ANSI

BREAK_ONLY_BEFORE_DATE =

here is a screenshot of the data coming from the local folder (works):

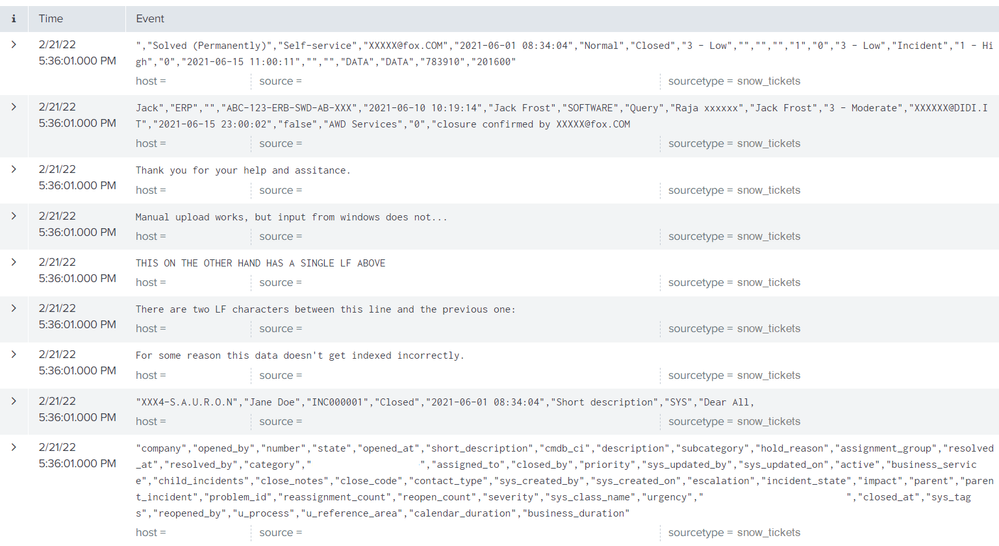

here is what it looks like when it comes from the windows UF:

as you can see, it treats a newline as a new event, and does not seem to recognise the sourcetype.

Is there any blatantly obvious thing that I've missed? Any push in the right direction would be great!

Thank you and best regards,

Andrew

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With structured data such as CSV/JSON, the data can be parsed on the Universal Forwarder (UF), so you would need the props.conf that usually goes on the indexers to be on the UF.

You say it's a CSV file but where are the header fields?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With structured data such as CSV/JSON, the data can be parsed on the Universal Forwarder (UF), so you would need the props.conf that usually goes on the indexers to be on the UF.

You say it's a CSV file but where are the header fields?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@m_pham Moving props.conf to UF worked, thank you for the reply! I thought props.conf should only be on indexer, so I guess the exception is for structured data. As for the header, it's in the post above, first line. I guess there are so many fields it just seems like an event...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's not widely known until you run into it and the Docs pages is scattered around at times.

You can read more about it here in the section below:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, you have LINE_BREAKER set to ([\r\n]+).

Why shouldn't splunk break the line at LINE_BREAKER?