- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Why am I receiving unwanted HB (Heartbeat ?) l...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why am I receiving unwanted HB (Heartbeat ?) logs from my Heavy Forwarder?

Hello Splunkers,

Here is my use-case : I am cloning some events that arrive to my Heavy Forwarder and then forward those cloned event to another Splunk (standalone - free trial) machine.

I am able to receive the logs on my targeted machine with the following configuration :

On my HF (sender / forwarder)

transforms.conf

[srctype-clone]

CLONE_SOURCETYPE = mynewsrctype

REGEX = .*

DEST_KEY = _TCP_ROUTING

FORMAT = tcp_output_conf

outputs.conf

[tcpout:tcp_output_conf]

server = <ip>:<port>

sendCookedData = false

On my Splunk standalone machine (receiver)

inputs.conf

[tcp://15601]

disabled = false

index = whatever_index

sourcetype = mynewsrctype

Based on that I have some questions...

- First, I am receiving the logs but also some unwanted logs containing only "HB", does it correspond to Heart Beat ? Why do I received that ?

- Should I use splunktcp instead of tcp on my receiver ?

- Should I use enableS2SHeartbeat = true on my receiver ?

- Should I use sendCookedData = true on my sender ?

Thanks a lot for your help !

Regards,

GaetanVP

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To be precise, you're not sending the cloned events to external destination. You're indexing them locally (or sending via default output) but sending out the "primary" events.

Also, as @isoutamo already pointed out, you should be using s2s for sending events from one splunk instance to another (especially that you're using HF so you'll be sending parsed events and you'll save some CPU time at destination machine at cost of increased transfer bandwidth).

Of course you can send the data raw but it doesn't make much sense because you have to parse it again (but I can think of a use case where that's actually a desirable thing).

So YMMV

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @PickleRick thanks for your answer, here some stuff I wanted to discuss about !

To be precise, you're not sending the cloned events to external destination.

Are you sure about that point ? I am pretty sure I am sending the cloned events since I assigned them a new sourcetype and apply them some anonymization by using the new props/transforms mechanism.

Also when I checked the logs in my external destination, I can confirm that the sourcetype I see is "mynewsrctype".

Also, as @isoutamo already pointed out, you should be using s2s for sending events from one splunk instance to another (especially that you're using HF so you'll be sending parsed events and you'll save some CPU time at destination machine at cost of increased transfer bandwidth).

Of course you can send the data raw but it doesn't make much sense because you have to parse it again (but I can think of a use case where that's actually a desirable thing).

Makes sense, I didn't think about the increase of transfer bandwidth, this is very interesting. As you correctly guessed, I prefer to send cooked data !

Thanks for your time,

GaetanVP

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I'm sure 🙂

[srctype-clone]

CLONE_SOURCETYPE = mynewsrctype

REGEX = .*

DEST_KEY = _TCP_ROUTING

FORMAT = tcp_output_conf

This transform tells Splunk to:

1) Make a copy of the event, assign it a sourcetype of "mynewsrctype"

2) Rewrite _TCP_ROUTING key _in this event that you're processing_.

So you're gonna have a copy of event with the mynewsrctype pushed to default destination and an event with the old sourcetype pushed to specific output.

At least that's what the docs say happens. (and my use of CLONE_SOURCETYPE should be pretty consistent with the docs).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @PickleRick !

I am a bit afraid to contradict an Ultra Champion but still, let's continue the discussion ! 😋

1) I agree with this first point

2) To mee, the _TCP_ROUTING will be changed on the cloned events, not on the original one

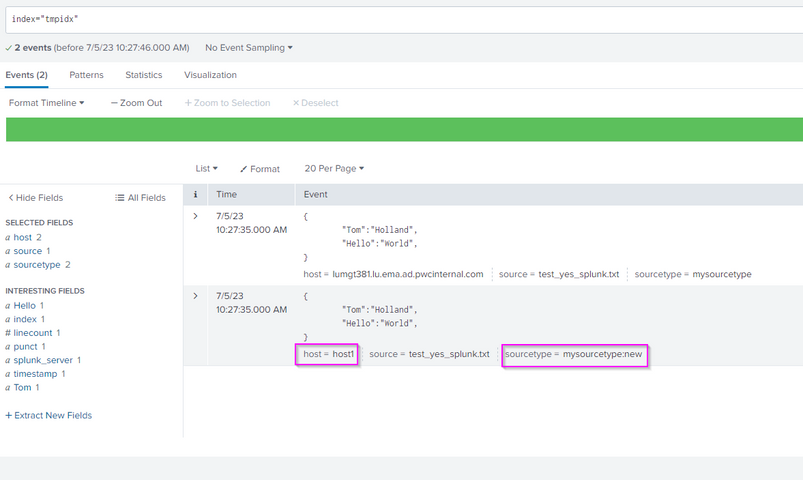

Here is a test I made (on a standalone Splunk Enterprise test VM) :

props.conf

[mysourcetype]

TRANSFORMS-foo-clone = bar-clonetransforms.conf

[bar-clone]

CLONE_SOURCETYPE = mysourcetype:new

REGEX = "Hello":\s*"World"

DEST_KEY = MetaData:Host

FORMAT = host1

Here is the sample of data that I upload to Splunk (via GUI) and assign the "mysourcetype" sourcetype

{

"Tom":"Holland",

"Hello":"World",

}At the end here is the result :

As you can see, it is the host of the duplicated event (with the "mysourcetype:new") that has been changed, and not the original one.

Let me know if I missed something here !

Thanks for your time,

GaetanVP

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is indeed interesting and it would be great if you did a feedback on the docs because the docs do suggest otherwise. I was also pretty sure it worked otherwise but I must say that I might have used only CLONE_SOURCETYPE on its own as the only operation withtin a transform - this might be the difference here. Apparently if it is joined with other operations within a single transform it works a bit counter-intuitively (at least for me).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick is correct about that you are sending original not cloned sourcetype

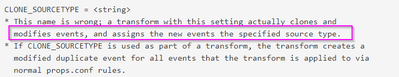

* Specifically, for each event handled by this transform, a near-exact copy

is made of the original event, and the transformation is applied to the

copy. The original event continues along normal data processing unchanged.

* The <string> used for CLONE_SOURCETYPE selects the source type that is used

for the duplicated events.If/when you have mynewsrctype on your remote target, then above description is not true. You should send comments to doc team to get they update that if/when needed.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

when you are forwarding to another splunk instance you should use s2s not tcp as a protocol. So remove sendCockedData or change its value to true. As you expect receiving must me splunktcp just like it’s on your primary splunk instance.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @isoutamo and thanks a lot for your answer.

Would you have some documentation on how to implement a s2s connection ? I mean is it only the fact to use "splunktcp" in inputs.conf instead of "tcp" stanza ? Or there is other configuration to consider ?

Regards,

GaetanVP

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it just like that. It’s Splunk’s default protocol. TCP and UDP are pure IP protocols not a splunk specific ones.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

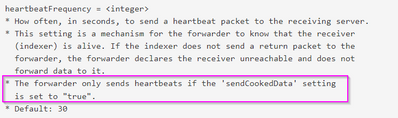

Hello @isoutamo, I still do not understand something...

In my Heavy Forwarder's outputs.conf it clearly specified sendCookedData = false

Based on this doc : https://docs.splunk.com/Documentation/Splunk/9.1.0/Admin/Outputsconf

I should not received any HeartBeat on my receiver

But I was not even sure that this HB logs was really for HeartBeat...

When I try to use splunktcp inputs, I am back to the following issue that I am currently trying to solve.

Thanks for your time,

GaetanVP

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your outputs.conf should be something like

[tcpout]

defaultGroup = splunk_demo

[tcpout:splunk_demo]

server = 192.168.x.x:9997This will use normal s2s protocol between these two servers. This also set sendCoockedData = true.

Then your free instance has normal inputs.conf like

[splunktcp://9997]

disabled = 0Than it should works. If not you should use

splunk btool inputs list --debugon your free instance and same for outputs on your HF to see if there is some setting which disturbs normal S2S traffic.

Only reason to use pure tcp without s2s/aka CockedData is to manipulate event on target server too.