- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Unable to get SEDCMD to mask SSNs (on Indexer)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unable to get SEDCMD to mask SSNs (on Indexer)

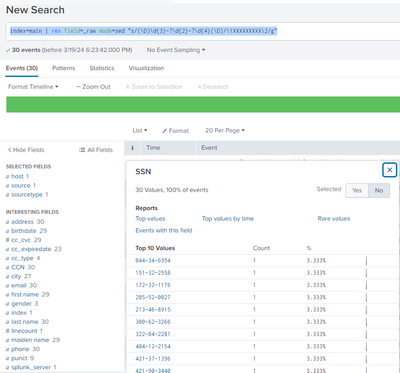

Hello, I am testing using SEDCMD on a single Splunk server architecture.

Below is the current configuration which is put into /opt/splunk/etc/system/local/ - I am uploading a CSV file which contains (fake) individual data including two formats of SSN (xxx-xx-xxxx & xxxxxxxxx). The masking is not working when I upload the CSV file. Can someone help point me in the right direction?

props.conf

### CUSTOM ###

[csv]

SEDCMD-redact_ssn = s/\b\d{3}-\d{2}-\d{4}\b/XXXXXXXXX/g

Included below is FAKE individual data pulled from the CSV file for testing:

514302782,f,1986/05/27,Nicholson,Russell,Jacki,3097 Better Street,Kansas City,MO,66215,913-227-6106,jrussell@domain.com,a,345389698201044,232,2010/01/01

505-88-5714,f,1963/09/23,Mcclain,Venson,Lillian,539 Kyle Street,Wood River,NE,68883,308-583-8759,lvenson@domain.com,d,30204861594838,471,2011/12/01

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The default csv sourcetype has

INDEXED_EXTRACTIONS=csv

It changes how the data is processed. Even if the SEDCMD is applied (of which I'm not sure), the fields are already extracted and since you're only editing _raw, you're not changing already extracted fields.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ah! ok, so I need to test this a different way and update the SEDCMD command to reference the new source type.

What's the next easiest method to test? Setup a UF with a file monitor?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, you can just define another sourcetype and upload the file onto your all-in-one instance. The trick will be to handle the csv fields properly. If I remember correctly, with INDEXED_EXTRACTIONS=csv Splunk uses first (by default) line of input file to determine field names. Without it you need to explicitly name field names and use proper FIELD_DELIMITER so that Splunk knows what the fields are (or write a very ugly regex-based extraction pattern).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On Slack is a new MASA diagram from where you could see how those pipelines are working and which conf files (and parameters) are affecting to those events. https://splunk-usergroups.slack.com/archives/CD9CL5WJ3/p1710515462848799?thread_ts=1710514363.198159...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just found this, from this admin guide:

To anonymize data with Splunk Enterprise, you must configure a Splunk Enterprise instance as a heavy forwarder and anonymize the incoming data with that instance before sending it to Splunk Enterprise.

Previously in other documents it had said this can be performed on either the Indexer OR a Heavy Forwarder. I wonder if this is why it isn't working?

https://docs.splunk.com/Documentation/Splunk/9.2.0/Data/Anonymizedata

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's possible Splunk doesn't like the \b metacharacter. Try this alternative.

SEDCMD-redact_ssn = s/(\D)\d{3}-?\d{2}-?\d{4}(\D)/\1XXXXXXXXX\2/gI also modified the regex to preserve the characters before and after the SSN and to make the hyphens optional.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info!

I deleted the events, updated props.conf, restarted splunk, then uploaded the CSV again - but it is not working yet.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @srseceng ,

how do you take these logs: from a Universal Forwarder or from an Hevy Forwarder?

If from an hevy forwarder, the SEDCMD props.conf must be located on the HF.

If you receive these logs from a Universal Forwarder and there ins't any intermediate Heavy Forwarder the props.conf can be located on the Indexers.

In other words, parsing and typing is done in the first full Splunk instance that the data are passing through.

Then, check if the regex and the sourcetype are correct.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Because this is a test environment, the logs are being added through the UI's "Add Data" > "Upload" feature. I have a CSV file that contains the logs.

Is this a valid test method?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you select correct sourcetype csv when you are uploading it?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it auto selects "CSV" during import but I have also manually selected CSV to see if there was a bug their.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @srseceng ,

OK, Add data of the same Indexer I suppose.

In this case the issue is to search in the regex: what does it happen running the sed regex in the Splunk ui?

Are you sure about the sourcetype?

Did you restarted Splunk after props.conf update?

Sorry for the stupid questions, but "Once you eliminate the impossible, whatever remains, no matter how improbable, must be the truth" (Sir Artur Conan Doyle)!

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

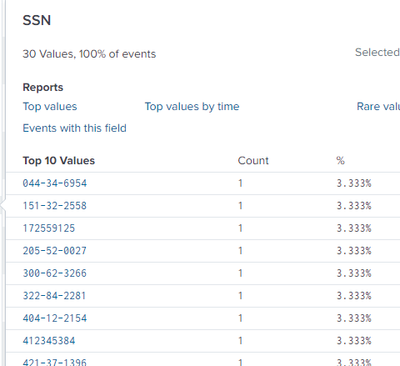

If I run this:

index=main | rex field=_raw mode=sed "s/(\D)\d{3}-?\d{2}-?\d{4}(\D)/\1XXXXXXXXX\2/g"

I get all of the results back, but the SSN's are still in clear text (not redacted)