Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Splunk doesn't format Sysmon JSON correctly

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

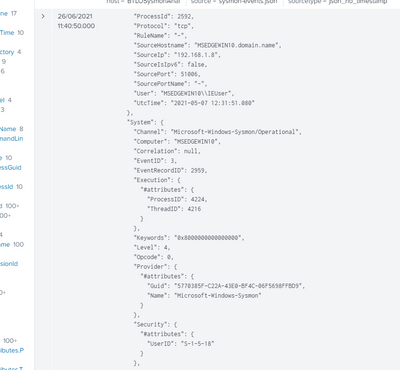

That's the problem. I have a Sysmon JSON to examine but, although in the "Add Data" section everything looks OK, once I get to the search time Events are joined and split without pattern.

After I thought about it a second, I noticed that the document did not have timestamps and Splunk was complaining about it, so I solved it but the issue was still there.

The problem looks like this:

Sometimes the Event begins OK but melts with other events before its end, and others (like the upper example) don't have a heading.

I'm not using any plug-ins or apps. Just clicked on Add Data, selected the document as a non-timestamped JSON, and started searching. How would you solve this? the JSON to be analyzed is downloadable from Blue Team Labs Online.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have to check:

1) the inputs.conf, which sourcetype are you using?

2) the size of events... i have many json which are larger than 10.000 bytes per event, so Splunk truncate them (by default, to lighten the aggregation/parsing process)!

3) if, in point 2, events are more than 10.000 bytes each, you have to aggregate them in props.conf with a TRUNCATE = 0 in the [searchtype_used] stanza,

TRUNCATE = <non-negative integer> * The default maximum line length, in bytes. * Although this is in bytes, line length is rounded down when this would otherwise land mid-character for multi-byte characters. * Set to 0 if you never want truncation (very long lines are, however, often a sign of garbage data). * Default: 10000

You can watch truncated events in splunkd.log of the Indexer(s). If you have an HF, could be it to truncate before Indexer(s),

WARN LineBreakingProcessor - Truncating line because limit of 10000 bytes has been exceeded with a line length >= 65536 - data_source="/xxxxxxxxxx/xxxxxxxxxx", data_host="xxxxxxxxxx", data_sourcetype="xxxxxxxxxx"

4) if the problem is not the truncate, maybe you could use the default "_json" sourcetype or the

INDEXED_EXTRACTIONS = <CSV|TSV|PSV|W3C|JSON|HEC>

method to parse the correct events.

You have to try which method is better.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have to check:

1) the inputs.conf, which sourcetype are you using?

2) the size of events... i have many json which are larger than 10.000 bytes per event, so Splunk truncate them (by default, to lighten the aggregation/parsing process)!

3) if, in point 2, events are more than 10.000 bytes each, you have to aggregate them in props.conf with a TRUNCATE = 0 in the [searchtype_used] stanza,

TRUNCATE = <non-negative integer> * The default maximum line length, in bytes. * Although this is in bytes, line length is rounded down when this would otherwise land mid-character for multi-byte characters. * Set to 0 if you never want truncation (very long lines are, however, often a sign of garbage data). * Default: 10000

You can watch truncated events in splunkd.log of the Indexer(s). If you have an HF, could be it to truncate before Indexer(s),

WARN LineBreakingProcessor - Truncating line because limit of 10000 bytes has been exceeded with a line length >= 65536 - data_source="/xxxxxxxxxx/xxxxxxxxxx", data_host="xxxxxxxxxx", data_sourcetype="xxxxxxxxxx"

4) if the problem is not the truncate, maybe you could use the default "_json" sourcetype or the

INDEXED_EXTRACTIONS = <CSV|TSV|PSV|W3C|JSON|HEC>

method to parse the correct events.

You have to try which method is better.