Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Scripted input permission denied

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello. I have a HF and I want it to download a .csv file from another internal server. Right now, I can download it as the splunk user using wget on CLI so I know connectivity and permissions are no issue. I looked at scripted inputs but I don't think that's the right way about it, as I can't get it to work. Not sure how to go about this?

I just want to download a csv file and then send it to my indexer tier.

/opt/splunk/etc/apps/my_app/bin/script.sh

/usr/bin/wget -O file.csv 'https://myserver.com/feeds/list?v=csv&f=indicator&tr=1'

exit 0

/opt/splunk/etc/apps/my_app/local/inputs.conf

[script://./bin/script.sh]

index = main

sourcetype = test

interval = 600.0

disabled = 0

splunkd.log

07-10-2019 00:08:18.082 +0000 ERROR ExecProcessor - message from "/opt/splunk/etc/apps/my_app/bin/script.sh" file.csv: Permission denied

I checked and the app is owned by splunk:splunk. The script is 755. I ran the ad-hoc command below as the splunk user and it downloaded the file just fine

/opt/splunk/bin/splunk cmd ../etc/apps/my_app/bin/script.sh

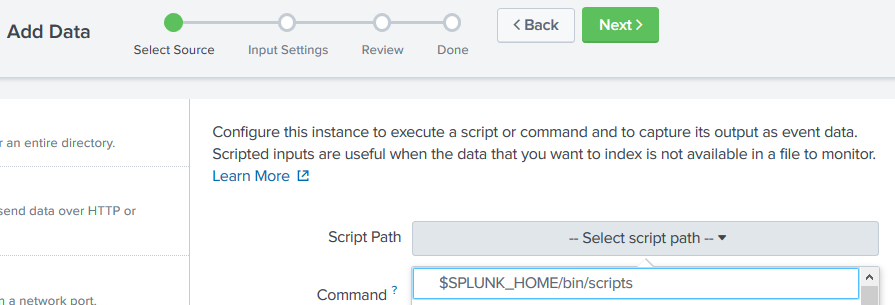

I tried adding the input through the HF's gui (Settings > Data Inputs > Scripts > Add new) but my app and script are not showing up in the dropdown...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DEAD_BEEF,

it's not the script that is the issue here, it's the output file file.csv AND it's location the script is trying to create it. Set the output file to use a full path that you are sure the user splunk can write into.

Hope this helps ...

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also note if you are going to call/access the script from the UI i.e. scripted alert action etc., It will only be accessible from your specific app space my_app etc. Place the script in $SPLUNK_HOME/bin/scripts and restart instance if you are wanting it to be accessible to all app spaces from the UI.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some things to try:

Try using $SPLUNK_HOME to set the path to your script in inputs.conf:

[script://$SPLUNK_HOME/etc/apps/myapp/bin/script.sh]

If still failing, try adding a wrapper script to call the other script to help with logging/debugging.

#wrapper-script.sh

SCRIPT_DIR=$SPLUNK_HOME/apps/my_app/bin

LOG_FILE=$SPLUNK_HOME/var/log/splunk/wrapper-script.log

# execute script and log standard out and standard error

$SCRIPT_DIR/script.sh 2>&1 > $LOG_FILE

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DEAD_BEEF,

it's not the script that is the issue here, it's the output file file.csv AND it's location the script is trying to create it. Set the output file to use a full path that you are sure the user splunk can write into.

Hope this helps ...

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good point -O file.csv possibly should have a specific path before the file name (where the file should be written) unless preceded with a cd some dir command

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there a way I can adjust the script to have splunk read the stream and index it, rather than save the file locally then index it?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Every output to stdout from a script that is run by Splunk will be indexed. Simply make your script to output to stdout and you will have the output indexed.

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am sending to stdout now using what @rob_jordan said (as I didn't know how). I don't see data in main index but seeing this log.

07-10-2019 02:07:56.729 +0000 ERROR ExecProcessor - message from "/opt/splunk/etc/apps/my_app/bin/script.sh" 2019-07-10 02:07:56 (8.61 MB/s) - written to stdout [4011976]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Might be also worth to read this here https://docs.splunk.com/Documentation/Splunk/latest/AdvancedDev/ScriptWriting

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay, I got it. outputting it to /dev/null caused it to send stdout to the trash. I removed that, but left the wget -O- ... and it started indexing immediately.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you pass any timestamp with to output? Search all time in main

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked all time with index=main sourcetype=test and nothing... Also, no timestamps in this data. I assumed Splunk will write the index time for all the events in the file.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If I just want splunk to read the stream and then send it to indexers rather than download a log file and then send it, how would I adjust the script?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk can index whatever is returned to standard out as a scripted input.

wget -O - 'https://myserver.com/feeds/list?v=csv&f=indicator&tr=1' 2> /dev/null

or, if you want to redirect standard error output also:

wget -O - 'https://myserver.com/feeds/list?v=csv&f=indicator&tr=1' 2>&1

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@rob_jordan I changed the script to just be the 1-liner that you put (as I didn't know how to send data to stdout). Now it shows this and no data in index=main

07-10-2019 02:07:56.729 +0000 ERROR ExecProcessor - message from "/opt/splunk/etc/apps/my_app/bin/script.sh" 2019-07-10 02:07:56 (8.61 MB/s) - written to stdout [4011976]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No -O, everything that goes to stdout will be indexed by Splunk when running scripted/modular inputs.

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I put a full path /var/log/file.txt and now the file is saved on the HF, but nothing was indexed...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you check the level of permission on "script.sh". does it have execute permissions?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-rwxr-xr-x. 1 splunk splunk 217 Jul 9 23:35 script.sh