Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Logs from rsyslog server stopped indexing

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My setup is FW, WAF and Web-proxy logs being pushed to my Rsyslog Fwd which has a UF installed to push to my indexers.

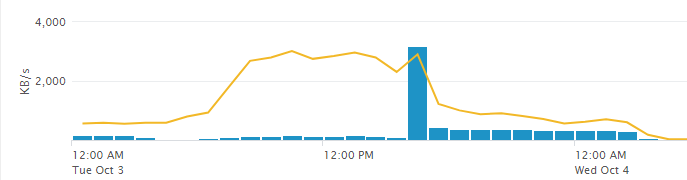

So my logs that were coming from the Rsyslog server stopped mysteriously around 3am a few nights back, but the UF installed on that server is still sending out metrics logs but no firewall logs. I can't figure what the issue is. Whats even weirder is that all the logs didn't stop at one time but over a course of few hours, the logs had been coming in consistently for a few weeks now. And this new deployment had been running about a 4-5 weeks.

There was a sharp increase in logs that came in the day of and after that the logging levels dropped to almost none with only the UF metrics getting indexed but no other logs.

• Host OS: Red Hat Linux 7.3

• Syslog software used: rsyslogd 7.4.7

• Splunk Software used: Splunk Universal Forwarder 6.6.3 for Linux

• Configuration changes to get syslog data from sources was done in /etc/rsyslog.d/rsyslog-splunk.conf.

• Logrotation for syslog data was configured in /etc/logrotate.d/rsyslog-splunk

Any ideas?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue was related to logrotate not being configured properly, so there was no space left on the syslog server.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue was related to logrotate not being configured properly, so there was no space left on the syslog server.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi shaktik,

a little question, when you say "stopped few nights ago" are you saying that you have logs until 30th of september and stopped since the 1st of october?

if yes, probably there's an error in date_time parsing, so you are still receiving logs but logs of 1st of october are indexed as 10th of january.

Bye.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the confusion but the logs stopped and have not started back up again. I have added a picture of what time logs stopped coming in for more clarity.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shaktik!

I would suggest the first thing to check is your UF configuration, specifically limits.conf.

By default, the universal forwarder is tuned to have low impact and low footprint on the host and network.

For a syslog server like this, they will actually get in your way as this is a high volume server.

So firstly, you will want to check :

./splunk btool limits list thruput --debug

Spefically you will want to ensure your maxKBps is not the UF default of 256 as you will need much more thruput to move this data.

Sample from a full splunk instance aka HF, where you can see there is no limit by default.

[splunker@n00bserver bin]$ ./splunk btool limits list thruput --debug

/home/splunker/splunk/etc/system/default/limits.conf [thruput]

/home/splunker/splunk/etc/system/default/limits.conf maxKBps = 0

/home/splunker/splunk/etc/system/default/limits.conf max_mem_usage_mb = 200

Might also be a good idea to check the internal logs for queue blocking:

index=_internal source=*metrics.log blocked=true or by going to the UF and grepping for "blocked=true" he metrics.log file in $SPLUNK_HOME/var/log/splunk/metrics.log. This only really matters if it is constantly blocking.

Another check just to be sure your inputs are ok is to check the status of the files you are monitoring:

./splunk list inputstatus

This will let you confirm the status of the files you are monitoring, in case rolling or truncation caused any issues.

If you find that it is indeed thruput/blocking, Then you may also want to consider adding more pipelines to this forwarder to help it move through the files on your syslog server.

These are just general checks to start, let me know if any of them turn up anything useful