Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Issue Parsing Windows DNS Logs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Issue Parsing Windows DNS Logs

Hoping someone can help here....

We are currently running DNS services on our Windows Active Directory servers (we do not currently have tools/tech in place to stream or otherwise capture this data on the wire --- roadmap item).

We are also running on Splunk Cloud with a Splunk HF (installed on a dedicated stand-alone system) & Splunk UF (installed on the Active Directory server(s) with DNS services running). So the data flows as follows:

Splunk UF (AD Server) -> Splunk HF (dedicated box) -> Splunk Cloud

Using this approach, I am able to successfully get the data in to Splunk Cloud. My issue revolves around parsing the necessary fields. I am most concerned about getting the DNS entry itself (as part of the initial query) as well as the IP address returned in the DNS response. Below I have included the raw data, the inputs.conf, props.conf, and transforms.conf. Please let me know what I am missing as I am at a loss at this point.

========

=======

======DNS Query Raw Data======

8/9/2021 7:19:32 AM 1750 PACKET 00000200616CA100 UDP Rcv ::1 1bf5 Q [0001 D NOERROR] A (27)vm3-proxy-pta-NCUS-CHI01P-2(9)connector(3)his(10)msappproxy(3)net(0)

UDP question info at 00000200616CA100

Socket = 828

Remote addr ::1, port 62839

Time Query=229843, Queued=0, Expire=0

Buf length = 0x0fa0 (4000)

Msg length = 0x004a (74)

Message:

XID 0x1bf5

Flags 0x0100

QR 0 (QUESTION)

OPCODE 0 (QUERY)

AA 0

TC 0

RD 1

RA 0

Z 0

CD 0

AD 0

RCODE 0 (NOERROR)

QCOUNT 1

ACOUNT 0

NSCOUNT 0

ARCOUNT 0

QUESTION SECTION:

Offset = 0x000c, RR count = 0

QTYPE A (1)

QCLASS 1

ANSWER SECTION:

empty

AUTHORITY SECTION:

empty

ADDITIONAL SECTION:

empty

======DNS Response Raw Data======

8/9/2021 7:19:10 AM 1750 PACKET 000002006188FCC0 UDP Snd ::1 196c R Q [8081 DR NOERROR] A (27)vm3-proxy-pta-NCUS-CHI01P-2(9)connector(3)his(10)msappproxy(3)net(0)

UDP response info at 000002006188FCC0

Socket = 828

Remote addr ::1, port 58618

Time Query=229821, Queued=229822, Expire=229825

Buf length = 0x0200 (512)

Msg length = 0x00bb (187)

Message:

XID 0x196c

Flags 0x8180

QR 1 (RESPONSE)

OPCODE 0 (QUERY)

AA 0

TC 0

RD 1

RA 1

Z 0

CD 0

AD 0

RCODE 0 (NOERROR)

QCOUNT 1

ACOUNT 2

NSCOUNT 0

ARCOUNT 0

QUESTION SECTION:

Offset = 0x000c, RR count = 0

QTYPE A (1)

QCLASS 1

ANSWER SECTION:

Offset = 0x004a, RR count = 0

TYPE CNAME (5)

CLASS 1

TTL 241

DLEN 85

DATA Offset = 0x00ab, RR count = 1

TYPE A (1)

CLASS 1

TTL 7

DLEN 4

DATA 20.80.38.248

AUTHORITY SECTION:

empty

ADDITIONAL SECTION:

Empty

======UF inputs.conf======

[monitor://c:\windows\system32\dns\dns.log]

disabled = 0

index = dns

sourcetype = windows:dns

======UF props.conf======

[windows:dns]

SHOULD_LINEMERGE = True

BREAK_ONLY_BEFORE_DATE = True

EXTRACT-Domain = (?i) .*? \.(?P<Domain>[-a-zA-Z0-9@:%_\+.~#?;//=]{2,256}\.[a-z]{2,6})

EXTRACT-src=(?i) [Rcv|Snd] (?P<source_address>\d+\.\d+\.\d+\.\d+)

EXTRACT-Threat_ID,Context,Int_packet_ID,proto,mode,Xid,type,Opcode,Flags_Hex,char_code,ResponseCode,question_type = .+?[AM|PM]\s+(?<Threat_ID>\w+)\s+(?<Context>\w+)\s+(?<Int_packet_ID>\w+)\s+(?<proto>\w+)\s+(?<mode>\w+)\s+\d+\.\d+\.\d+\.\d+\s+(?<Xid>\w+)\s(?<type>(?:R)?)\s+(?<Opcode>\w+)\s+\[(?<Flags_Hex>\w+)\s(?<char_codes>.+?)(?<ResponseCode>[A-Z]+)\]\s+(?<question_type>\w+)\s

EXTRACT-Authoritative_Answer,TrunCation,Recursion_Desired,Recursion_Available = (?m) .+?Message:\W.+\W.+\W.+\W.+\W.+AA\s+(?<Authoritative_Answer>\d)\W.+TC\s+(?<TrunCation>\d)\W.+RD\s+(?<Recursion_Desired>\d)\W.+RA\s+(?<Recursion_Available>\d)

SEDCMD-win_dns = s/\(\d+\)/./g

======HF inputs.conf======

[splunktcp://:5143]

connection_host = x.x.x.x (masking IP)

index = dns

disabled = 0

======HF props.conf======

[windows:dns]

EXTRACT-Domain = (?i) .*? \.(?<Domain>[-a-zA-Z0-9@:%_\+.~#?;//=]{2,256}\.[a-z]{2,6})

EXTRACT-windows_dns_000001 = (?<thread_id>[0-9A-Fa-f]{4}) (?<Context>[^\s]+)\s+(?<internal_packet_id>[0-9A-Fa-f]+) (?<protocol>UDP|TCP) (?<direction_flag>Snd|Rcv) (?<client_ip>[0-9\.]+)\s+(?<xid>[0-9A-Fa-f]+) (?<type>[R\s]{1}) (?<opcode>[A-Z\?]{1}) \[(?<flags>[0-9A-Fa-f]+) (?<flagAuthoritativeAnswer>[A\s]{1})(?<flagTrucatedResponse>[T\s]{1})(?<flagRecursionDesire>[D\s]{1})(?<flagRecursionAvailable>[R\s]{1})\s+(?<response_code>[^\]]+)\]\s+(?<query_type>[^\s]+)\s+(?<query_name>[^/]+)

EXTRACT-windows_dns_000010 = ([a-zA-Z0-9\-\_]+)\([0-9]+\)(?<tld>[a-zA-Z0-9\-\_]+)\(0\)$

EXTRACT-windows_dns_000020 = \([0-9]+\)(?<domain>[a-zA-Z0-9\-\_]+\([0-9]+\)[a-zA-Z0-9\-\_]+)\(0\)$

EXTRACT-windows_dns_000030 = \s\([0-9]+\)(?<hostname>[a-zA-Z0-9\-\_]+)\(0\)$

EVAL-domain = replace(domain, "([\(0-9\)]+)", ".")

EVAL-query_domain = ltrim(replace(query_name, "(\([\d]+\))", "."),".")

EVAL-type_msg = case(type="R", "Response", isnull(type), "Query")

EVAL-opcode_msg = case(opcode="Q", "Standard Query", opcode="N", "Notify", opcode="U", "Update", opcode="?", "Unknown")

EVAL-direction = case(direction_flag="Snd", "Send", direction_flag="Rcv", "Received")

EVAL-decID = tonumber(xid, 16)

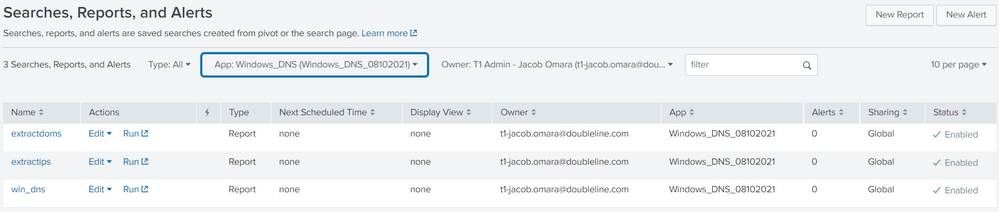

REPORT-win_dns = dns_string_lengths, dns_strings

REPORT-extractdoms = extractdoms

REPORT-extractips = extractips

======HF transforms.conf======

[dns_string_lengths]

REGEX = \((\d+)\)

FORMAT = strings_len::$1

MV_ADD = true

REPEAT_MATCH = true

[dns_strings]

REGEX = \([0-9]+\)([a-zA-Z0-9\-\_]+)\([0-9]+\)

FORMAT = strings::$1

MV_ADD = true

REPEAT_MATCH = true

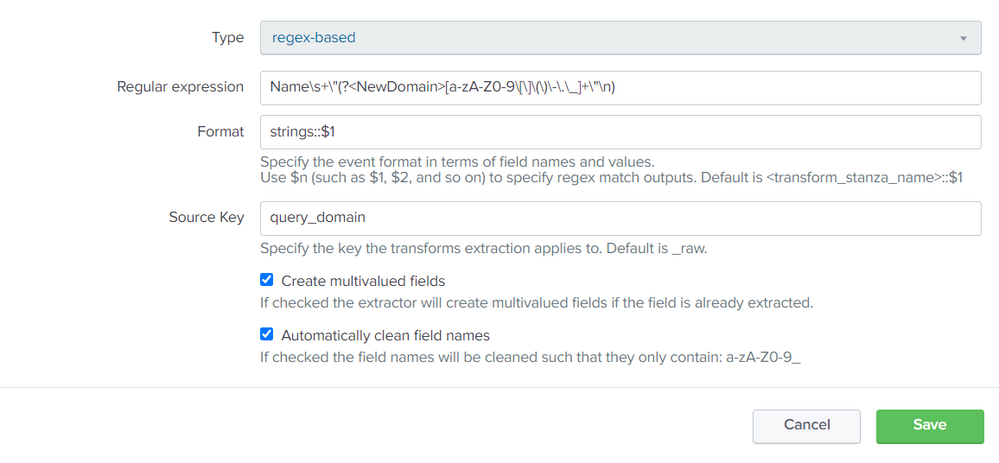

[extractdoms]

SOURCE_KEY = query_domain

REGEX = Name\s+\"(?<NewDomain>[a-zA-Z0-9\[\]\(\)\-\.\_]+\"\n)

FORMAT = strings::$1

MV_ADD = true

REPEAT_MATCH = true

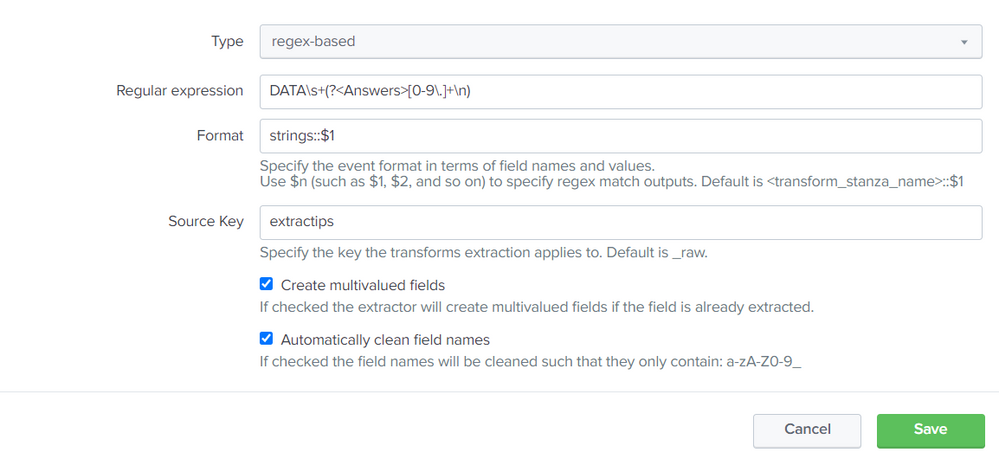

[extractips]

REGEX = DATA\s+(?<Answers>[0-9\.]+\n)

FORMAT = strings::$1

MV_ADD = true

REPEAT_MATCH = true

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, we ended up going with Splunk Stream. I cannot advocate for that approach enough as parsing the native logs is a mess. Even if I could make it work, it is all happening at search time and does not account for the fact that the log formatting could change over time. Happy to share what we did if you are interested.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I would be very interested to see how you ended up onboarding and your sourcetype for these logs. Have you mapped to the Network_Resolution datamodel?

We are also looking at onboarding windows DNS logs but the information out there is lacking.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The short of it is that we gave up on the parsing and decided to leverage "Splunk Stream" for DNS. We have had zero issues with the approach to date and have gotten the data we are looking for in terms of the query itself as well as the resulting response. The fields you want to collect are configurable which means less data to ingest into Splunk (vs the parsing/filtering that had to be done at search time).

Happy to discuss further, so let me know.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why can't you install the UF on the servers and use the Splunk_TA_windows to send the data straight to the Splunk Cloud indexers?

Example inputs.conf configuration:

[WinEventLog://DNS Server]

disabled = false

You'll need the TA on the indexer cluster and search head/search head cluster too of course.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, thank you.

Couple of things:

1. I am trying to limit the number of internet-facing firewall rules I need to create to send external (in part this is why we chose to use the HF --- and why I am forwarding logs from the UF ---> HF ----> Splunk Cloud).

2. We have an inputs.conf already on the UF which is pointing to the DNS logs on the local DNS server --- as well as an outputs.conf pointing to the on-premise HF (which has no indexing enabled but is a Splunk enterprise install).

3. I have the Windows TA installed in Splunk Cloud already, so I believe we are in alignment there already.

Question

If I understand you correctly, you are saying that I should;

1. Install the Splunk Windows TA onto the same server where my existing UF resides, correct?

2. Place my existing inputs.conf (one monitoring my local DNS logs) into the Splunk TA application directory, correct?

3. Given what I initially mentioned as to my reason for an HF, how might I still accomplish this with an HF in play? Can I still install the Splunk Windows TA on the UF and get the same result? Do I need to install the TA onto both the UF and HF?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@qcjacobo2577 wrote:Question

If I understand you correctly, you are saying that I should;

1. Install the Splunk Windows TA onto the same server where my existing UF resides, correct?

2. Place my existing inputs.conf (one monitoring my local DNS logs) into the Splunk TA application directory, correct?

3. Given what I initially mentioned as to my reason for an HF, how might I still accomplish this with an HF in play? Can I still install the Splunk Windows TA on the UF and get the same result? Do I need to install the TA onto both the UF and HF?

For #1: Yes, you'd need to install the Splunk_TA_windows on all of the DNS servers' UFs that you want to collect the DNS logs for and send to the HF that you have that forwards to Splunk Cloud. Since this will be considerend a non-default Windows event logs, you'd need to import the DNS logs to the Windows Event Viewer - see documentation here. Technically you don't even need a HF for this anymore if you don't plan on having any parsing configs - look at this for that: https://docs.splunk.com/Documentation/Splunk/8.2.1/Forwarding/Configureanintermediateforwarder

For #2: You can send your local DNS logs by importing them to the Windows Event Viewer and have the Splunk_TA_windows inputs.conf ingest that - again, documentation here.

For #3: Like I said, you don't need the HF to parse the logs if you are using the Splunk_TA_windows - you just need an intermediate forwarder to send it to Splunk Cloud. Splunk Cloud indexers would need the Splunk_TA_windows to parse the data. Your search head(s) would need the TA too for field extractions.

In my opinion, it's much simpler to use the Splunk Base TA for this as you don't need to create custom field extractions like you did.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Totally understand where you are going with this...however...

When you say " Since this will be considered a non-default Windows event logs, you'd need to import the DNS logs to the Windows Event Viewer", in my case we are talking about DNS debug logging (https://nxlog.co/documentation/nxlog-user-guide/windows-dns-server.html#dns_windows_filebased_loggin...) and not DNS logs exposed in the Windows Event logs. DNS debug logs are a flat file stored in the location you specify during the initial configuration (there is no corresponding .evtx file to extract from or import into. DNS auditing (what I believe you are referring to) only captures audit events --- https://docs.microsoft.com/en-us/previous-versions/windows/it-pro/windows-server-2012-r2-and-2012/dn...While these are useful, they do not provide the DNS query/response data I am seeking in this case. We also looked at DNS Analytical logs --- also stored in the Windows Event Viewer --- but these are really purpose built for debugging specific scenarios --- not meant to be always on --- and do not actually present messages in the event log until debugging is disabled).

So, unless you are aware of something I am not, we are still talking about a flat file. Can the Windows TA parse this? I know there used to be a Windows DNS specific TA but that was deprecated for Splunk Cloud.

I am thinking of using Splunk Stream but am not sure as to the performance impact on my DCs.

Thoughts?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Gotcha, in that case ignore my initial suggestion. I would suggest trying to take a sample data to a standalone instance and configuring the field extraction in the GUI to see what it comes up with in the back end - you have quite a few to being with and mix of props and transforms.

Otherwise - I would have to let another person with more knowledge of field extraction to try and help you on this one.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

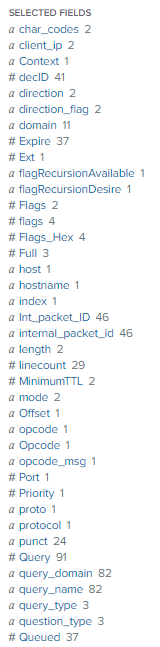

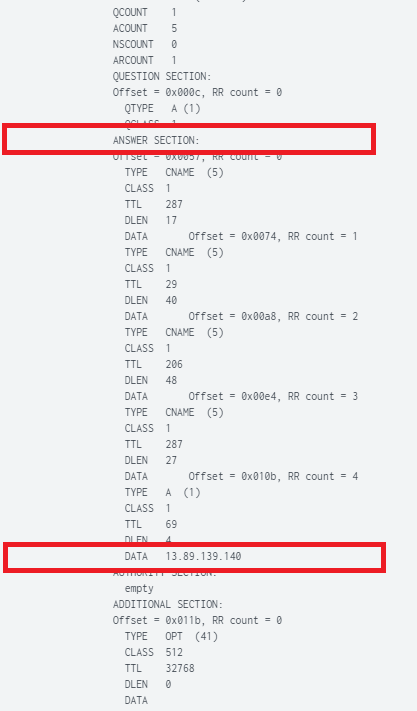

I was able to get most of the fields parsed properly without issue. As someone stated previously, most of these had to be performed at search time --- which is not a problem per se. The only 2 issues I seem to be having are:

1. The size of the data itself is quite large. If I am only able to perform field extraction at search time --- and we are talking about Splunk Cloud --- is there a way I can reduce my log ingestion --- as to lessen the impact on my ingest licensing? Can I perform some line suppression within my UF or HF?

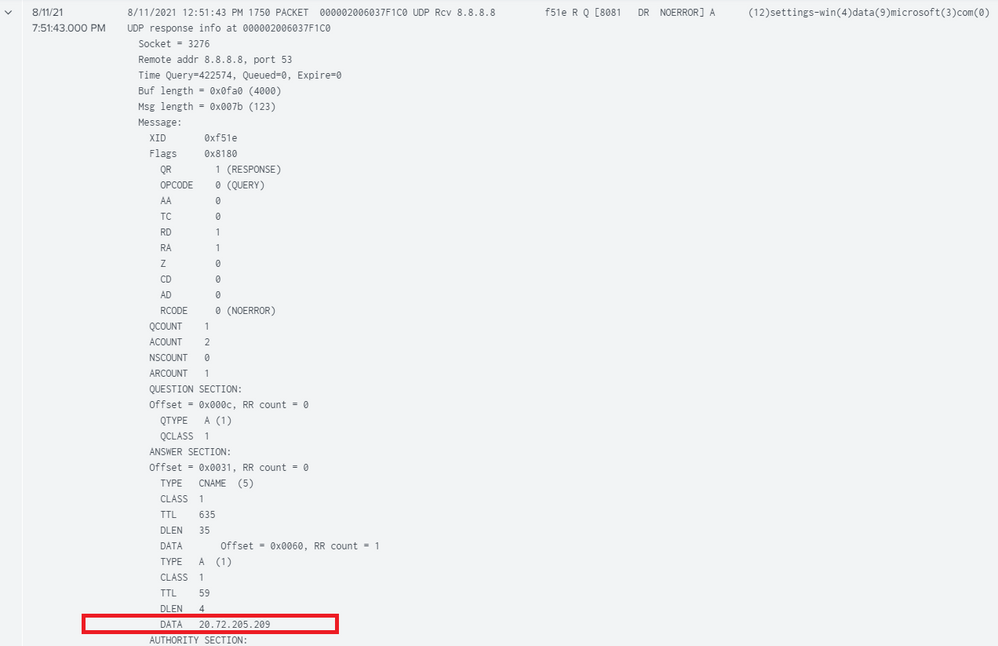

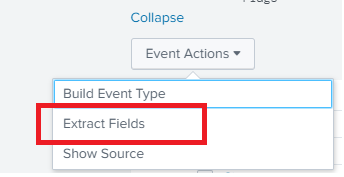

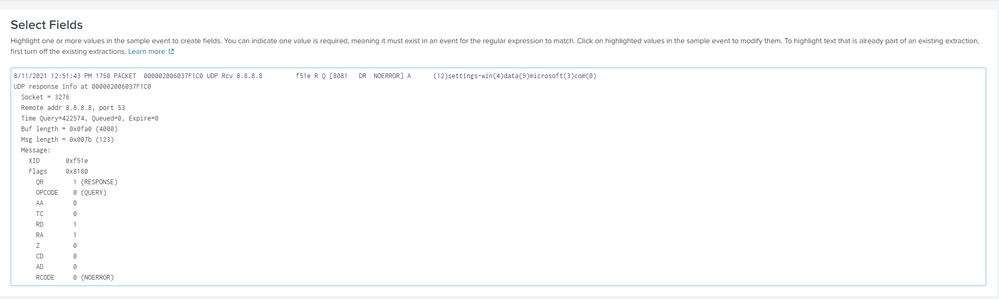

2. I am trying to extract the DATA field in the DNS response without much luck. Doing that through the UI only presents an issue (will show you what I mean below in the embedded images:

===

Here is my search:

Here is the result I am targeting for parsing (honing in on the "DATA field boxed in red):

I am not seeing that field when I select "Extract Fields".

How do I expose the data field in the UI such that I can even attempt to extract for parsing?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If it's not working in the GUI - try modifying the configurations in the back end (props.conf/transforms.conf). Since you are using Splunk Cloud, I assume you are uploading the app via self service app install - this may trigger a search head restart.

https://docs.splunk.com/Documentation/Splunk/latest/Admin/propsconf

https://docs.splunk.com/Documentation/Splunk/latest/Admin/transformsconf

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't have CLI access to Splunk Cloud to make any changes to the "back-end". My understanding via support is that setup is pretty standard. How do I go about getting the access you suggested.

The other option I took was to try and create the props.conf and transforms.conf from the article mentioned here: https://community.splunk.com/t5/All-Apps-and-Add-ons/How-to-parse-the-full-Windows-DNS-Trace-logs/m-...

The difference being in that I took all of the props.conf and transforms.conf entries and placed them in the "Fields" configuration within Splunk Cloud. I could not figure out where to put SECMD (not obvious in the UI). You can see all of this visually in one of my posts in this case.

I just need to be able to

1. Parse the IP address field mentioned previously from the raw log. Do you know how to do that? Everything else is parsing.

2. Reduce the log volume before it is indexed. There are a large number of fields I simply do not need as they do not add any value from a security perspective.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@qcjacobo2577 If your original parsing issue at HF level has been resolved I would advise to close this thread/Accept solution if working fine and re-open new one for Extraction issues.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you have a copy of your UF props.conf deployed to HF and restart and see how it goes. UF can not do parsing when you have HF in line then props having parsing line_breaker, timestamp extractions shall be deployed to HF. I haven't verified your UF props conf, however the following shall work with Parsing deployin into HF, you might need to set TZ (timezone) as well.

## props conf shall be deployed to HF

[windows:dns]

SHOULD_LINEMERGE=false

LINE_BREAKER=([\r\n]+)\d+\/\d+\/\d+\s+\d+:\d+:\d+

NO_BINARY_CHECK=true

TIME_PREFIX=^

TIME_FORMAT=%m/%d/%Y %H:%M:%S %p---

An upvote would be appreciated And Accept solution if this reply helps!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just so I am clear, you are saying;

1. Take all of the content currently contained within my UF props.conf and move it to my HF props.conf (which means I will not longer have a props.conf file on my UF), correct?

2. If the answer to #1 is "Yes", do I merge the UF props.conf content with my existing HF props.conf content or replace it?

3. You are also referencing a new HF props.conf file as well. What am I doing with that content? Adding it to the above?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@qcjacobo2577 Technically the UF props conf having nothing specific to parsing which works on UF. It's all mixed up EXTRACT-* are search-time settings shall go to SH, SEDCMD is index time should goto HF/indexers and should_line_merge and break_only** are parsings related should go to HF I highly doubt it works for your parsing requirement.

I would say try the props conf that i have provided on HF, yes you can merge with existing HF props you have.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@qcjacobo2577 just seen your HF props and transforms conf they are not related to parsing do not work on HF. Setting EXTRACT-*, REPORT-* are search-time should get deployed to search head.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have completely removed the props.conf from UF.

Now I have the following:

- An inputs.conf file on the UF as shown below:

[monitor://c:\windows\system32\dns\dns.log]

disabled = 0

index = dns

sourcetype = windows:dns

- An outputs.conf file on the UF (not shown as it is not relevant to this discussion).

- A modified the props.conf on the HF to reflect the following (as you recommended):

[windows:dns]

SHOULD_LINEMERGE=false

LINE_BREAKER=([\r\n]+)\d+\/\d+\/\d+\s+\d+:\d+:\d+

NO_BINARY_CHECK=true

TIME_PREFIX=^

TIME_FORMAT=%m/%d/%Y %H:%M:%S %p

3. Within Splunk Cloud

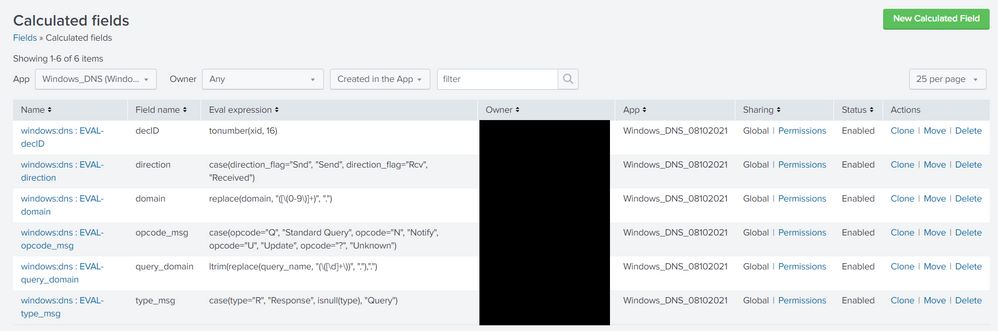

- I have the following configuration for "calculated fields".

- The following for "field extractions".

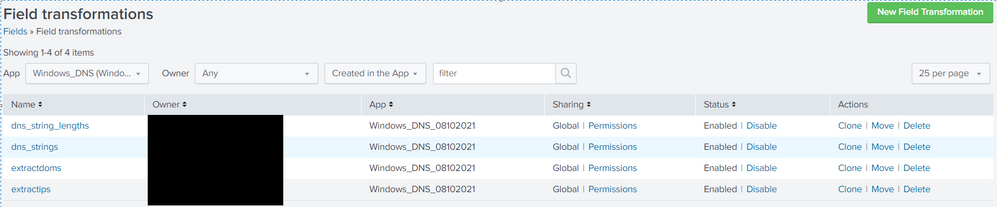

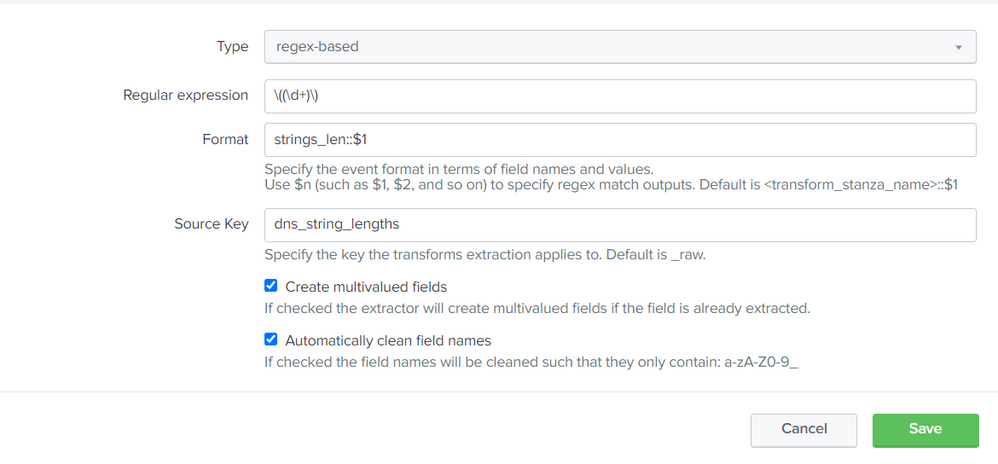

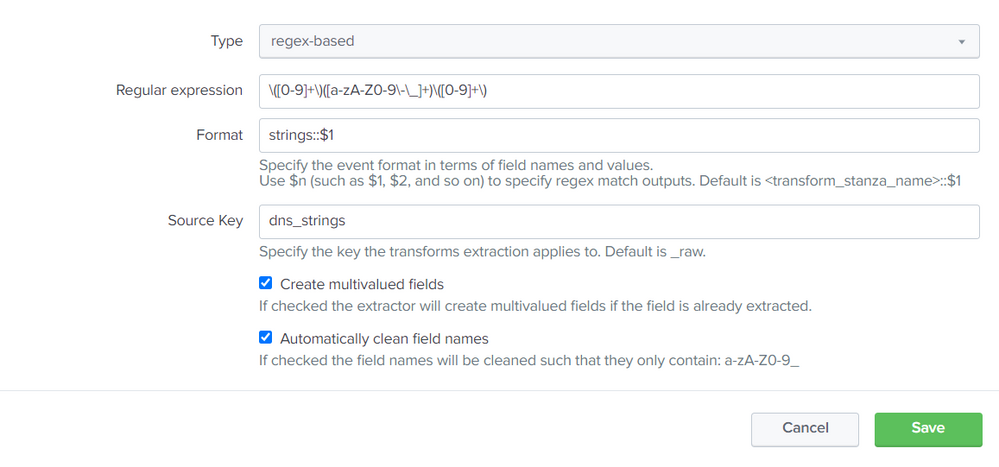

- The following for field transformations:

- The following reports:

This results in the following fields being parsed:

AND

I am however, not able to parse the following:

Please advise.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content