Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Ingesting and 'Transforming' AWS SQS Messages

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ingesting and 'Transforming' AWS SQS Messages

Hello,

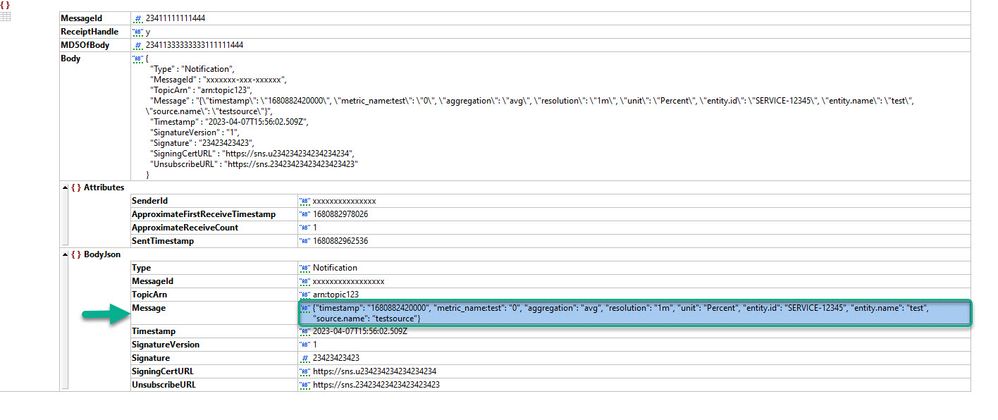

We are trying to ingest JSON based messages from an AWS SQS topic. When ingesting the messages we are finding extra added json around the actual Message we are trying to ingest. The extra JSON is automatically added in by AWS SQS. The actual Message we want to ingest has the xpath of "?BodyJson?Message". Can we configure the Splunk TA to pull the SQS Messages off the topic but apply some type of xpath or transform to only ingest the Message (?BodyJson?Message). See screenshot below. While pulling the message off the SQS topic we only want the message in the green rectangle. but its buried in all the other json....

Actual JSON to whole message above in screenshot.

{

"MessageId": 23411111111444,

"ReceiptHandle": "y",

"MD5OfBody": 23411333333333111111444,

"Body": "{\n \"Type\" : \"Notification\",\n \"MessageId\" : \"xxxxxxx-xxx-xxxxxx\",\n \"TopicArn\" : \"arn:topic123\",\n \"Message\" : \"{\\\"timestamp\\\": \\\"1680882420000\\\", \\\"metric_name:test\\\": \\\"0\\\", \\\"aggregation\\\": \\\"avg\\\", \\\"resolution\\\": \\\"1m\\\", \\\"unit\\\": \\\"Percent\\\", \\\"entity.id\\\": \\\"SERVICE-12345\\\", \\\"entity.name\\\": \\\"test\\\", \\\"source.name\\\": \\\"testsource\\\"}\",\n \"Timestamp\" : \"2023-04-07T15:56:02.509Z\",\n \"SignatureVersion\" : \"1\",\n \"Signature\" : \"23423423423\",\n \"SigningCertURL\" : \"https://sns.u234234234234234234\",\n \"UnsubscribeURL\" : \"https://sns.23423423423423423423\"\n}",

"Attributes": {

"SenderId": "xxxxxxxxxxxxxxx",

"ApproximateFirstReceiveTimestamp": "1680882978026",

"ApproximateReceiveCount": "1",

"SentTimestamp": "1680882962536"

},

"BodyJson": {

"Type": "Notification",

"MessageId": "xxxxxxxxxxxxxxxxx",

"TopicArn": "arn:aws:sns:us-east-1:996142040734:APP-4498-dev-PerfEngDynatraceAPIClient-DynatraceMetricsSNSTopic-qFolXGcy2Ufh",

"Message": "{\"timestamp\": \"1680882420000\", \"metric_name:test\": \"0\", \"aggregation\": \"avg\", \"resolution\": \"1m\", \"unit\": \"Percent\", \"entity.id\": \"SERVICE-12345\", \"entity.name\": \"test\", \"source.name\": \"testsource\"}",

"Timestamp": "2023-04-07T15:56:02.509Z",

"SignatureVersion": "1",

"Signature": 23423423423,

"SigningCertURL": "https://sns.u234234234234234234",

"UnsubscribeURL": "https://sns.23423423423423423423"

}

}- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much tscroggins. We will try your suggestion out. Hopefully being on Splunk SaaS won't prevent our ability to do this.

Thank you

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You'll likely need to contact Splunk support to implement INGEST_EVAL. If the schema and field order of the outer and inner JSON never change, you can also use a combination of SEDCMD and a regular transform:

# props.conf

[aws:sqs]

MAX_TIMESTAMP_LOOKAHEAD = 13

SEDCMD-unescape = s/\\//g

TIME_FORMAT = %s%3Q

TIME_PREFIX = "Message"\s*:\s*"\{\\"timestamp\\"\s*:\s*\\"

TRANSFORMS-copy_message_to_raw = copy_message_to_raw

# transforms.conf

[copy_message_to_raw]

DEST_KEY = _raw

FORMAT = $1

REGEX = "Message"\s*:\s*"([^}]+\})

Everything above can be implemented through the user interface.

Note that the SEDCMD regular expression will aggressively remove all backslashes. In my test environment (Splunk Enterprise 9.0.4.1), typical solutions for stripping backslashes end up adding backslashes. E.g.:

\" => s/\x5C"/"/g => \\"

Splunk's treatment of backslashes in SEDCMD and SPL regular expression commands has always been finicky. Strict adherence to C-style escape sequences in SPL strings and no special handling in SEDCMD would be preferred, but I think they're doing their best to balance the user experience.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you again!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

You can do this pretty easily with an INGEST_EVAL transform:

# props.conf

[aws:sqs]

TRANSFORMS-copy_bodyjson_message_to_raw = copy_bodyjson_message_to_raw

# transforms.conf

[copy_bodyjson_message_to_raw]

INGEST_EVAL = _raw=json_extract(_raw, "BodyJson.Message"), _time=strptime(json_extract(_raw, "timestamp"), "%s%3Q")

The example transform also extracts the timestamp from the inner JSON message.

If you have other events with sourcetype = aws:sqs, you can use a source stanza in props.conf instead of a source type stanza and reference the SQS input by name:

[source::<your_sqs_source_name>]

TRANSFORMS-copy_bodyjson_message_to_raw = copy_bodyjson_message_to_raw

If you need to retain the original event, you can clone the event into a new source type and modify _raw on the cloned event:

# props.conf

[source::<your_sqs_source_name>]

TRANSFORMS-clone_service_metric = clone_service_metric

[aws:sqs:service_metric]

TRANSFORMS-copy_bodyjson_message_to_raw = copy_bodyjson_message_to_raw

# transforms.conf

[clone_service_metric]

REGEX = .

CLONE_SOURCETYPE = aws:sqs:service_metric

[copy_bodyjson_message_to_raw]

INGEST_EVAL = _raw=json_extract(_raw, "BodyJson.Message"), _time=strptime(json_extract(_raw, "timestamp"), "%s%3Q")