Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Indexed Extractions not working properly

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indexed Extractions not working properly

Hi all,

we collect some json data from a logfile with a universal forwarder.

Most times the events were indexed correctly with already extracted fields, but for a few events the fields are not automatically extracted.

If i reindex the same events the indexed extraction is also fine.

I did not find any entries in splunkd.log that it is not working.

Following props.conf is on the Universal fowarder and Heavy Forwarder (maybe someone could explain which parameter is needed on UF and which on HF):

[svbz_swapp_task_activity_log]

following props.conf is on the Searchhead:

[svbz_swapp_task_activity_log]

KV_MODE=none

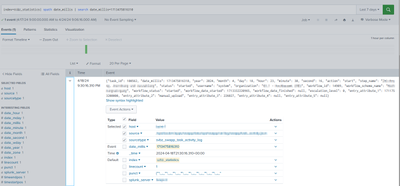

The first time when it was indexed automatically it looks like:

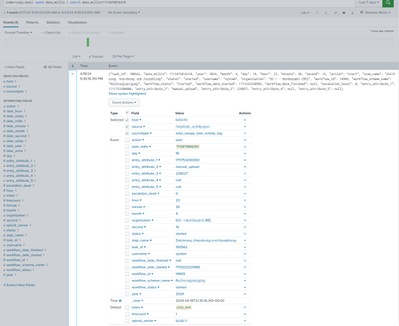

When i reindex the same Event again to another index it looks fine:

In last 7 days it was working correctly for about 32000 event but for 168 events the automatic field extraction was not working.

Here is also the example event:

{"task_id": 100562, "date_millis": 1713475816310, "year": 2024, "month": 4, "day": 18, "hour": 23, "minute": 30, "second": 16, "action": "start", "step_name": "XXX", "status": "started", "username": "system", "organization": "XXX", "workflow_id": 14909, "workflow_scheme_name": "XXX", "workflow_status": "started", "workflow_date_started": 1713332220965, "workflow_date_finished": null, "escalation_level": 0, "entry_attribute_1": 1711753200000, "entry_attribute_2": "manual_upload", "entry_attribute_3": 226027, "entry_attribute_4": null, "entry_attribute_5": null}

Does someone have an idea why it is sometimes working and sometimes not?

When i would now change the KV_Mode on search head the fields are shown correctly for these 168 events but for all others the fields are extracted twice. Using spath with same names would extract it only once.

What is the best workaround for already indexed events to get proper search results.

Thanks and kind regards

Kathrin

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @nunz10 / @klowke_svbz ,

I do not understand the need to extract all the fields at INDEXED TIME for JSON data. The AUTO_KV_JSON parameter is true by default which means it'll automatically extract all the fields at search time. Unless there's a specific use case, I would not suggest to go for INDEXED_EXTRACTIONS = json.

And yes, changing the KV_MODE will extract the fields twice at search time. Once because of AUTO_KV_JSON and the other time because of KV_MODE setting. Also, if KV_MODE is set to JSON, you'll not be able to disable the AUTO_KV_JSON.

Following is the document - https://docs.splunk.com/Documentation/Splunk/latest/Admin/propsconf#:~:text=pattern.%0A*%20Default%3...

Thanks,

Tejas.

---

If the above solution helps, an upvote is appreciated..!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tejas,

from my point of view it should be faster when the fields are already correctly extracted at index time. I only do not understand why it is sometimes working correct and at other timepoint not. So i still have no solution.

Maybe it could be another option to extract fields at searchtime but the other option should work as well.

If i understood the documentation correct then the parameter AUTO_KV_JSON is only used if the parameter KV_MODE is set to 'auto' or 'auto_escaped'. I have the field extraction twice because it was already extracted at index time.

My workaround is also not really good for performance, but at the moment i still use spath command for each field i need to extract in search. And use it in dashboards with loadjob afterwards...

Kind regards

Kathrin

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I doubt that it's faster if you indexed those fields in index time. When you create (or splunk create) indexed fields for all JSON event it means that it will create huge .tsidx file. Usually this means that your searches are slower as peers need to do a lot more IO operations than with not indexed fields.

Can you share your use case with some sample data and we probably can help you with it?

IMHO using KV data instead of JSON you can get much more performer Splunk environment.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same issue here, did you find the root cause for this? Also what work-around did you finally use?