Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Index time field extraction not working with b...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am doing index time field extraction for structured files. files are pipe delimited.

I am using following source type configuration-

[header]

INDEXED_EXTRACTIONS = psv

DATETIME_CONFIG =

LINE_BREAKER = ([\r\n]+)

FIELD_DELIMITER = |

NO_BINARY_CHECK = true

category = Structured

pulldown_type = 1

disabled = false

it work with all events except event where there is some special character in field like \"

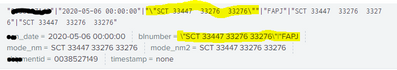

here blnumber should be \"SCT 33447 3344733276\" but its getting addition text from other field and "|" delimiter not worked.

I have tried using SEDCMD-mask_sc_raw = s/\\"/'/g at time of indexing

also tried transform

[mask_sc_meta]

SOURCE_KEY = _meta

DEST_KEY = _meta

REGEX = (.*)\\"(.*)

FORMAT = $1'$2

WRITE_META = false

But still issue with this extraction.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sample text:

XXmentid|XX_date|blnumber|node|mode_nm|mode_nm2

"020202"|"2020-05-06 00:00:00"|"\"SCT 33447 33276 33276\""|"FAPJ"|"SCT 33447 33276 33276"|"SCT 33447 33276 33276"

[psv_test]

LINE_BREAKER = ([\r\n]+)

NO_BINARY_CHECK = true

SEDCMD-trim = s/\\"//g

SHOULD_LINEMERGE = false

category = Custom

description = psv

pulldown_type = true

PREAMBLE_REGEX = XX.*

disabled = false

DATETIME_CONFIG =

TRANSFORMS-psv = psv

[psv]

REGEX = (?<XXmentid>[^\|]+)\|(?<XX_date>[^\|]+)\|(?<blnumber>[^\|]+)\|(?<node>[^\|]+)\|(?<mode_nm>[^\|]+)\|(?<mode_nm2>[^\|]+)

FORMAT = XXmentid::$1 XX_date::$2 blnumber::$3 node::$4 mode_nm::$5 mode_nm2::$6

WRITE_META = true

For index time field extraction, INDEXED_EXTRACTIONS ignores SEDCMD.

All of the structured data expansions(e.g. FIELD_DELIMITER) in props.conf were no good.

so, I am going to extract on transforms.conf . but if you want index time field extraction,

I can't use DELIM and FIELD.

This setting works fine. the set of these REGEX,FORMAT and WRITE_META can make indexed fields.

It was a good learning experience. Thank you @yogip86

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sample text:

XXmentid|XX_date|blnumber|node|mode_nm|mode_nm2

"020202"|"2020-05-06 00:00:00"|"\"SCT 33447 33276 33276\""|"FAPJ"|"SCT 33447 33276 33276"|"SCT 33447 33276 33276"

[psv_test]

LINE_BREAKER = ([\r\n]+)

NO_BINARY_CHECK = true

SEDCMD-trim = s/\\"//g

SHOULD_LINEMERGE = false

category = Custom

description = psv

pulldown_type = true

PREAMBLE_REGEX = XX.*

disabled = false

DATETIME_CONFIG =

TRANSFORMS-psv = psv

[psv]

REGEX = (?<XXmentid>[^\|]+)\|(?<XX_date>[^\|]+)\|(?<blnumber>[^\|]+)\|(?<node>[^\|]+)\|(?<mode_nm>[^\|]+)\|(?<mode_nm2>[^\|]+)

FORMAT = XXmentid::$1 XX_date::$2 blnumber::$3 node::$4 mode_nm::$5 mode_nm2::$6

WRITE_META = true

For index time field extraction, INDEXED_EXTRACTIONS ignores SEDCMD.

All of the structured data expansions(e.g. FIELD_DELIMITER) in props.conf were no good.

so, I am going to extract on transforms.conf . but if you want index time field extraction,

I can't use DELIM and FIELD.

This setting works fine. the set of these REGEX,FORMAT and WRITE_META can make indexed fields.

It was a good learning experience. Thank you @yogip86

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@to4kawa Thanks for quick response. It was really helpful. !!!!

One thing wanted to check is there limit in REGEX.

I have around 180 variables in file when i keep names in REGEX length become large and its not able to extract. Hence i used f2,f2 and so on..

[psv]

REGEX = (?<f1>[^\|]+)\|(?<f2>[^\|]+)\|(?<f3>[^\|]+)\|(?<f4>[^\|]+)\|(?<f5>[^\|]+)\|(?<f6>[^\|]+)\|(?<f7>[^\|]+)\|(?<f8>[^\|]+)\|(?<f9>[^\|]+)\|(?<f10>[^\|]+)\|(?<f11>[^\|]+)\|(?<f12>[^\|]+)\|(?<f13>[^\|]+)\|(?<f14>[^\|]+)\|(?<f15>[^\|]+)\|(?<f16>[^\|]+)\|(?<f17>[^\|]+)\|(?<f18>[^\|]+)\|(?<f19>[^\|]+)\|(?<f20>[^\|]+)\|(?<f21>[^\|]+)\|(?<f22>[^\|]+)\|(?<f23>[^\|]+)\|(?<f24>[^\|]+)\|(?<f25>[^\|]+)\|(?<f26>[^\|]+)\|(?<f27>[^\|]+)\|(?<f28>[^\|]+)\|(?<f29>[^\|]+)\|(?<f30>[^\|]+) and so on...

Thanks again!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

regex101.com

180columns is 721steps.

DEPTH_LIMIT = <integer>

* Only set in transforms.conf for REPORT and TRANSFORMS field extractions. For EXTRACT type field extractions, set this in props.conf. * Optional. Limits the amount of resources that are spent by PCRE when running patterns that do not match. * Use this to limit the depth of nested backtracking in an internal PCRE function, match(). If set too low, PCRE might fail to correctly match a pattern.

* Default: 1000

If this REGEX violates this setting ,please modify it.

making regex query sample:

| makeresults count=180

| streamstats count

| eval pre="(?<field".count.">[^\|]+)\|"

| stats list(eval(if(count>=100,pre,NULL))) as list_1 list(eval(if(count<100,pre,NULL))) as list_2

| eval test=mvappend(list_2,list_1)

| eval regex=mvjoin(test,"")

| fields regex

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@to4kawa Thanks for this information