Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Hunk: Why am I unable to retrieve results for a da...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the exact same issue as https://answers.splunk.com/answers/320535/post.html .

I tried the regex provided in the solution but it still doesn't work.

To elaborate,

We have data in directories in the following format

/.../../buildlogs/time_slot=201701070930/......

for data of 2017 Jan 7th 9:30 AM.

Following is my indexes.conf excerpt

vix.provider = abc

vix.input.1.splitter.hive.tablename = logger

vix.input.1.splitter.hive.fileformat = orc

vix.input.1.splitter.hive.dbname = logsdb

vix.input.1.path = hdfs://xyz/buildlogs/...

vix.input.1.splitter.hive.columnnames = contents,project

vix.input.1.splitter.hive.columntypes = string:string

vix.input.1.required.fields = timestamp

vix.input.1.ignore = (.+_SUCCESS|.+_temporary.*|.+_DYN0.+)

vix.input.1.accept = .+$

vix.input.1.et.format = yyyyMMddHHmm

vix.input.1.et.regex = .*?/time_slot=(\d+)/.*

vix.input.1.lt.format = yyyyMMddHHmm

vix.input.1.lt.regex = .*?/time_slot=(\d+)/.*

vix.input.1.et.timezone = GMT

vix.input.1.lt.timezone = GMT

vix.input.1.lt.offset = 1800

vix.input.1.et.offset = 0

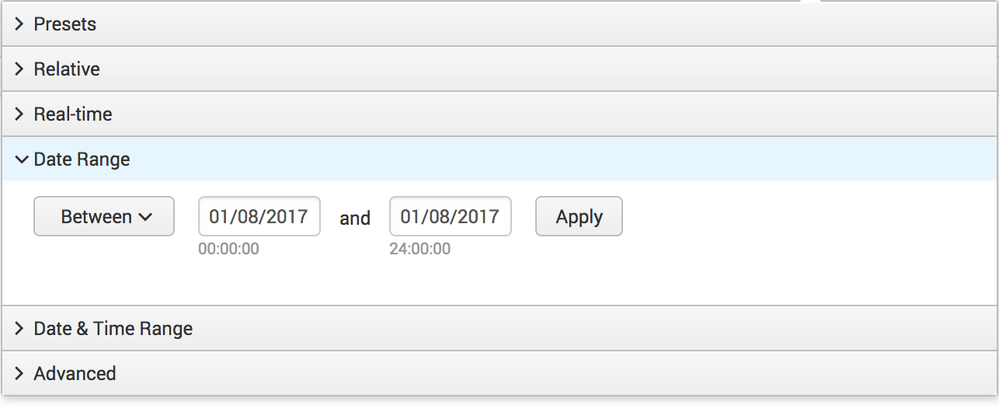

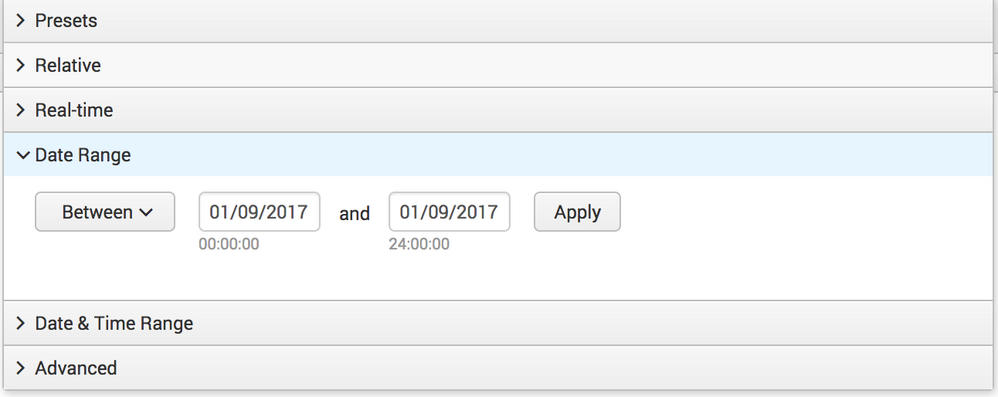

If i search with a date range of any day other than today , I get no results.

However if i search with the date range of today , I get valid results.

I verified that data from previous days is present in Hive.

Can someone help?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So what worked out for me finally was the strpTime .

I am pasting the solution below.

I added the following line to my props.conf

eval-_time=strptime(myTimeField,"%Y%m%d%H%M")

Now events get tagged with myTimeField and are available from search.

thanks to Luan from Splunk for helping us out.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So what worked out for me finally was the strpTime .

I am pasting the solution below.

I added the following line to my props.conf

eval-_time=strptime(myTimeField,"%Y%m%d%H%M")

Now events get tagged with myTimeField and are available from search.

thanks to Luan from Splunk for helping us out.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

More info on this, All our data returned from a search is having CURRENT_TIME as its timestamp. Hence all the data from entire day in hive gets returned if I search for data from the current day alone. Any other search gives 0 data.

Ideally I would like timestamp to be extracted from my hive field called start_time that has the actual timestamp of the log. But if i set it up this way, any splunk search with a date range would require the search to go through every single partition in hive which is incredibly slow. This is because a partition such as /.../../buildlogs/time_slot=201701070930/...... is not guaranteed to contain logs from those 30 minutes in our case.

Practically, What i would like is for the timestamp of the log to be what folder it is in.

For example, all logs under 201701070930 will have this as their timestamp. The timestamp of the log and the folder timestamp are usually within an hour of each other so it doesn't matter much.

The start_time field from hive will be processed and visible in the search results anyway.

The last answer on this page does talk about it a bit

https://answers.splunk.com/answers/235458/how-to-set-time-from-hive-field.html

But it extracts 'yourTimeField' from hive and i would like it to be extracted from the regex in the folder explained above.

Can anyone help me with this?