Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: How to parse escaped json at index time?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to parse escaped json at index time?

(edited to give a more accurate example)

I have an input that is json, but then includes escaped json

a much more complex version of the example below (many fields and nesting both outside of message and in the message string), so this isn't just a field extraction of a particular field, I need to tell splunk to extract the message string (removing the escaping) and then parse that as json

{

"message": "{\"foo\": \"bar\", \"baz\": {\"a\": \"b\", \"c\": \"d\"}}"

}

I can extract this at search time with rex and spath, but would prefer to do so at index time.

parsing this message with jq .message -r |jq . gives:

{

"foo": "bar",

"baz": {

"a": "b",

"c": "d"

}

}what I ideally want is to have it look ike:

{

"message": {

"foo": "bar",

"baz": {

"a": "b",

"c": "d"

}

}

}- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the similar kind of issue i was facing when using sqs queue,

{"user":"abc","friend":"def"}

{"user":"def","friend":"abc"}{"MessageId": "...", "ReceiptHandle": "...", "MD5OfBody": "...", "Body": "{\"user\":\"abc\",\"friend\":\"def\"}\n{\"user\":\"def\",\"friend\":\"abc\"}", "Attributes": {"SenderId": "...", "ApproximateFirstReceiveTimestamp": "...", "ApproximateReceiveCount": "1", "SentTimestamp": "..."}}{"MessageId": "...", "ReceiptHandle": "...", "MD5OfBody": "...", "Body": {"user":"abc","friend":"def"}, "Attributes": {"SenderId": "...", "ApproximateFirstReceiveTimestamp": "...", "ApproximateReceiveCount": "1", "SentTimestamp": "..."}}{"MessageId": "...", "ReceiptHandle": "...", "MD5OfBody": "...", "Body": {"user":"def","friend":"abc"}, "Attributes": {"SenderId": "...", "ApproximateFirstReceiveTimestamp": "...", "ApproximateReceiveCount": "1", "SentTimestamp": "..."}}- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is another issue. In order to get more attention to your question, post it as a new thread.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should repair this on the outside so that it is valid JSON before splunk eats it. That is the TRUE solution. Be aware that the problem is NOT the escapes, but rather the unquoted fields (i.e. 'message'). In the meantime, you can fix it in SPL like this:

|makeresults

| eval _raw="{

\"message\": \"{\\\"foo\\\": \\\"bar\\\"}\"

}"

| rex mode=sed "s/([\r\n]+\s*)(\w+)/\1\"\2\"/g"

| kv

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sorry, my sample should have included quotes around "message" (perils of typing examples in rather than cut-n-paste actual samples)

The original is fully valid json, but with one of the json fields being text that (once it's unescaped) is also valid json

so, if you were to use the jq tool to parse the original

jq .message would return the string {"foo": "bar"} which happens to also be valid json so you could then do a second pass like

jq .message |jq .foo would return the string bar

but

jq .message.foo will fail as message is a string, not a structure.

the actual contents of message are much more complex (a full nested json structure that has (almost) all the interesting fields that we care about)

I was hoping that there was some way to define a sourcetype parser that would do the json parsing, and then allow me to specify to do the parsing again on the contents of a particular field (like I do with the jq example above)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can do search-time or index-time field extractions with any ordering/layering that you might like to do using transforms.conf and props.conf. Just dig through the docs and make it happen.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, mate, but this is counter-productive. It's as if someone asked how to write hello world in C and you responded "Sure, you can write anything in C, just learn it and make it happen". Sounds kinda silly, doesn't it?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

in such a case, you need to modify the event before indexing.

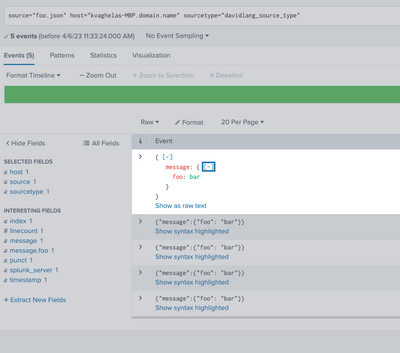

With the below event, I have created a source type that will do the same for you.

foo.json

{"message": "{\"foo\": \"bar\"}"}

{"message": "{\"foo\": \"bar\"}"}

{"message": "{\"foo\": \"bar\"}"}

{"message": "{\"foo\": \"bar\"}"}

{"message": "{\"foo\": \"bar\"}"}

props.conf

[davidlang_source_type]

DATETIME_CONFIG = CURRENT

LINE_BREAKER = ([\r\n]+)

NO_BINARY_CHECK = true

SEDCMD-a = s/{\"message\":\s\"/{"message":/g

SEDCMD-b = s/\"}$/}/g

SEDCMD-c = s/\\"/"/g

SHOULD_LINEMERGE = false

category = Custom

disabled = false

pulldown_type = true

INDEXED_EXTRACTIONS = json

Note: Just use this trick on your original event.

I hope this will help you.

Thanks

KV

If any of my replies help you to solve the problem Or gain knowledge, an upvote would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wouldn't be 100% sure if those are indexed extractions. You have auto-kv in action since the json is interpreted in the search window. You can verify it by searching for indexed values from those "subfields". I'd be tempted to say that they aren't indexed. As far as I understand from the Masa diagrams, indexed extractions happen _before_ transforms are applied so your SEDCMD's won't influence them.

BTW, fiddling with simple text-replacement over a json structure is... risky.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

not as elegant as I was hoping (as PickleRick notes, doing a sed on the entire message is risky, consider quotes embedded in a text field anywhere in the message, this would break the message)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try in search as well. like

YOUR_SEARCH

|spath

| rename Body as _raw | spath | fields Message BodyJson*| rename Message as _raw | spath | table BodyJson* *

KV

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

trying to do this at index time, not just search time

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe that it's already doing a json extraction of the fields in the message, I just need to do a second extraction of the contents of one of those fields. If you were to ask for the contents of message, you would get well formatted json.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have found that heavy index time extraction speeds up searches compared to search time extraction (less data needs to be fetched due to the increased accuracy of the searches)

I'd rather spend cpu as the data is arriving rather than as users are waiting for results (it significantly improves the perceived performance, even if it takes more total CPU, which I question)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's not that easy. indexed extractions (and indexed fields in general) have their uses in some cases but it's good to know when and where to use them. Just making fields indexed "because my search is slow" is often not the way to go - it often means that your search is not well-written. There are also other ways of improving search performance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

agreed, but when the data you are likely to be searching on can appear in multiple places in the raw message, being able to extract the field at index time so that at search time you are only retrieving the messages that have the search term in the right place can be a huge win.

(I'm 'new member' with the account for my current company, but have been running Splunk since 2006, so very aware of the trade-offs, but thanks for raising the concern)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. It's just that I've seen the "it's slow, let's create indexed fields!" approach too many times 😉

But back to the topic - indexed extractions happen at the beginning of event processing so by default you can't modify your event with transforms (even if you managed to write a "safe" regex for manipulating that json; I always advise great deal of caution when dealing with structured data by means of simple text hacking). See https://docs.splunk.com/Documentation/Splunk/9.0.4/Forwarding/Routeandfilterdatad#Caveats_for_routin...

The only very ugly idea I could have (but I've never tried it and don't know if it's possible at all) would be to modify the event with a transform and then reroute it back to earlier queue (I'm not sure which at the moment; it's a bit unclear to me how far back you'd need to move the event). Apart from the fact that I don't know if it would work at all (or if routing works well only on index queue vs. null queue), even if it worked you'd have to make sure that you don't get into a routing loop and I have no clear idea here how to do it.

So you can see that it's not that easy, unfortunately.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, plot thickens. "Normal" rerouting won't work since props/transforms are _not_ processed for indexed extractions.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since this is a really interesting problem, I gave it some more thought. I'm still not sure if it is possible at all but the only way it could possibly work is if you did it like that (don't have the time to verify it which is a pity actually because the problem itself is nice):

- Ingest the data initially with a sourcetype of stA where you don't do indexed extractions.

- Apply transfrorm to that sourcetype to de-escape contents of the embedded json (unforunately, as I wrote earlier, it might be a bit risky since you can only operate on raw string; you don't have parsed fields abailable).

- Apply CLONE_SOURCETYPE to cast the event to sourcetype stB

- route the original stA sourcetype event to nullQueue

- route the stB sourcetype back to structuredparsingQueue

The trick is that after p.3 your stB evend would not have been structured parsed so it should normally hit the transform redirecting it to another queue (I still have no idea if it's possible to push the event that far back, mind you) but after indexed extraction it wouldn't process that transform again (so we'd avoid looping).

But again - while this is the only way it could possibly work if it's at all possible, I'm aware that there are weak spots in this (mostly the p.4 is highly questionable - I'd be tempted to say that Splunk will not push the event back to the queue that far back).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ugh. First question is why would you want to do index-time extractions. Especially if you have many fields. It will inflate your index sizes.

Secondly, indexed extractions are relatively early in the queue whereas transforms are near the end of it. So you might find it complicated if it was at all possible - probably you'd need ingest-evals with json_extract(). But that would be ver ugly. And might be cpu-intensive.