- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- How to monitor and index tar.gz files in Splunk?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a tar.gz file and I wan't to continuously monitor it. I tried to index it to Splunk Enterprise via Settings>Data Inputs>Files&Directories, but when I run a search, Splunk doesn't return a result.

What are the steps to continuously monitor tar.gz files to index in Splunk? Do I need to write a script that automatically decompress tar.gz file so Splunk can index it? Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk won't index compressed files because they look like binaries. A script is one idea. Or you could have Splunk monitor the files before they are tarred.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are trying to monitor a file on a universal forwarder (i.e. tar.gz on a remote system), you can use the GUI to create a forwarder data/file input.

Settings --> Data Inputs --> Forwarded Inputs --> Files & Directories

Once that is complete, make sure you go to Forwarder Management, and enable the app by editing it, and checking the box. The Deployment will take a few minutes, but should start returning results shortly thereafter.

If it doesn't start indexing the data, and if you have direct access to the file location, try moving the files out of location (e.g. from /log to /opt) and back again. The move should trigger indexing.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi thank you for your answer. I'm using heavy forwarder for me to monitor those compressed log.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My comment above works for me

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've already did this but still no logs are being indexed

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

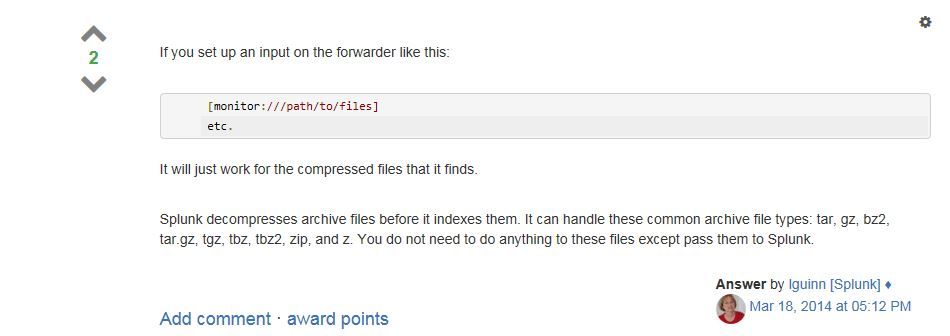

According to the most recent docs Splunk does index compressed files

http://docs.splunk.com/Documentation/Splunk/6.5.0/Data/Monitorfilesanddirectories

How Splunk Enterprise monitors archive files

Archive files (such as a .tar or .zip file, are decompressed before being indexed. The following types of archive files are supported:

.tar

.gz

.bz2

.tar.gz and .tgz

.tbz and .tbz2

.zip

.z

If you add new data to an existing archive file, the entire file is reindexed, not just the new data. This can result in event duplication.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I use the Universal forwarder to monitor compressed files, haven't tried it with the gui though.....

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Normally it should show you an error message in $SPLUNKHOME/var/log/splunk/splunkd.log when its not reading you can force it with a .splunk restart or you try a .splunk add oneshot to see in splunkd.log what happen.

...what kind of files are in the .tar.gz may there is something inside splunk can't read.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

inside the .tar.gz is a log file

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check your sourcetype as well, does it match the data format?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you monitor compress file without using gui? Is it on the inputs.conf?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, you would use inputs.conf

Here is what I do.

in $SPLUNK_HOME/etc/system/local

[batch:///var/nfs/SAT_SplunkLogs/weblogic/twc_media4/*.zip]

move_policy = sinkhole

host_segment=5

sourcetype=wls_managedserver

index=twc

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

keep in mind the batch option on the first line. This will ERASE the zip file when Splunk finishes indexing. If you don't want that, change batch to monitor and delete the move_policy line.

Also you must restart Splunk for any changes in inputs.conf to take effect.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes it is.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk won't index compressed files because they look like binaries. A script is one idea. Or you could have Splunk monitor the files before they are tarred.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I downvoted this post because the answer provided is incorrect.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I downvoted this post because splunk does index the compress files, it just doesn't perform parallel monitoring but sequential one. cpu is the key if you are going to decompress a lot of files like > 50k

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my case splunk enterprise did not index compressed file so we created a bash script to uncompressed the data and proceed with the indexing.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I downvoted this post because splunk can index compressed files

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I downvoted this post because this answer is incorrect. Splunk is capable of monitoring compressed files. There must be some other issue here.

http://docs.splunk.com/Documentation/Splunk/latest/Data/Monitorfilesanddirectories