- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Question - Ensure Splunk Free won't exceed dai...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

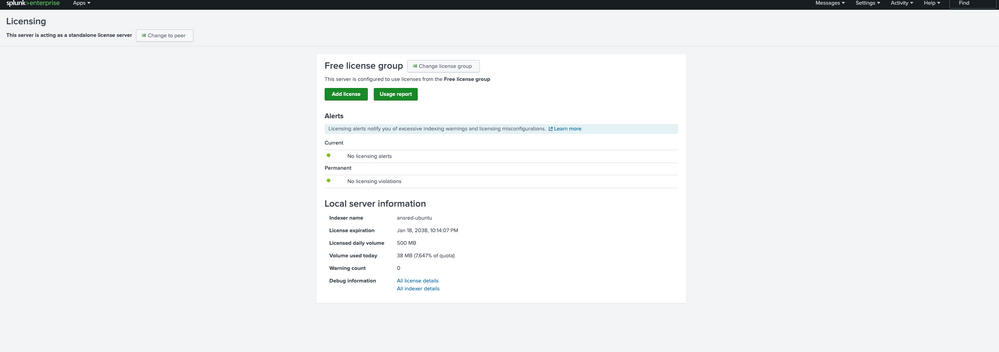

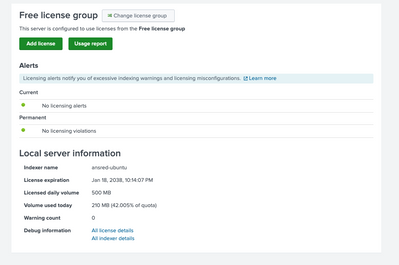

I have a working Splunk Free running on Ubuntu.

This is Splunk Free for home lab setup.

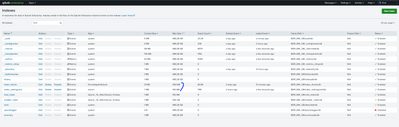

Connected two Syslog servers + Apps via API (Local Inputs)

My question is how to ensure Splunk Free to free disk space and limit ingested data to below the limit >500 MB for those syslogs + apps

For example if one of the syslog keeps sending data that would have its own limit and Splunk to delete older data to allow new data gets ingested into Splunk to make sure that the 500 MB won't be triggered?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can set the index limits to whatever storage size you have - it doesn't affect your license usage.

As a counterexample to the last one - if you have 20TB of storage space, you can hold that 20TB worth of data without any issues as long as you don't ingest more than your licensing limit each day.

So you can ingest 500MB per day and don't remove it until you've hit your 20TB storage space limit and it will not generate a licensing warning.

Ingesting data is one thing, and that's how Splunk is licensed. And data storage is another thing. You're not limited in any way on your storage size. It's the ingestion that's limited by your licensing terms.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Managing your storage, including by deleting old data to make room for new data, will not keep you below your ingest quota. The 500MB (or other) ingest limit is the number of bytes written to disk (measured before compression). It makes no difference what else is on that disk.

There are two ways to stay below quota: a) limit the data sent to Splunk; and b) have Splunk throw away unneeded data.

I'll leave the first option up to you since you know how the data is coming in to Splunk.

The second option involves using props and transforms to filter out unwanted data. Regular expressions are defined and any event matching one of the expressions is sent to the "null queue" (discarded). See https://docs.splunk.com/Documentation/Splunk/8.2.7/Forwarding/Routeandfilterdatad#Filter_event_data_... for details.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remember though that splunk ingesting process does not keep state information - you can't pass information from one event to another. Each event is processed separately. So you can't implement a "threshold" on splunk input. You can filter out some events that match predefined criteria _applying to a single event_ but you can't filter out events after you have received too much data or you had too many events of a given type or any other "group criteria".

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for sharing that.

so to confirm, there is no some kind plugin or script I can use on the Linux machine to rotate and set prune schedule to keep the storage not to hit the free limit >500 MB?

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do NOT attempt to manage storage outside of Splunk. That will result in much sadness.

There are settings in indexes.conf to control how much storage space Splunk is allowed to use. None of them have anything to do with your daily ingest limit. Storage and ingest are two different things. Even if you limit Splunk 100MB of disk space, it's still possible to ingest more than 500MB of data - Splunk will simply delete older data to make room for newer.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good to know.

Storage#

I just found the the doc # https://docs.splunk.com/Documentation/Splunk/9.0.0/Indexer/Setaretirementandarchivingpolicy regarding editing the indexes.conf to delete older data. Will see if that works with the free license setup.

Ingestion rate:

I will try to limit the ingestion from the source to send only error data instead of info from the server to the Data inputs.

Thanks!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Long story short - splunk will retire old data if it hits the index limits but it will not affect the ingest size (and thus license consumption in any way).

So if you have just one index in your Splunk installation and set it to 10MB size limit it should not grow above that (let's not dig into raw data vs. index data, acceleration idx files and so on; for the sake of this argument let's assume that 10MB means exactly 10MB of data and forget the whole bucket mechanics). But your splunk will ingest whatever you throw at it. It will happily exceed your licensing limit but will only remember last 10MB worth of data since you set it up that way.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, that makes sense now. will limit the two indexes I have to below 100 MB for each and ensure the ingestion from the source itself not to send loads of data to overwhelm the daily limit.

Two indexes, set to limit max size 100 MB + 300 MB

Thank you!! @PickleRick

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can set the index limits to whatever storage size you have - it doesn't affect your license usage.

As a counterexample to the last one - if you have 20TB of storage space, you can hold that 20TB worth of data without any issues as long as you don't ingest more than your licensing limit each day.

So you can ingest 500MB per day and don't remove it until you've hit your 20TB storage space limit and it will not generate a licensing warning.

Ingesting data is one thing, and that's how Splunk is licensed. And data storage is another thing. You're not limited in any way on your storage size. It's the ingestion that's limited by your licensing terms.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sounds good. I just tweaked the servers of how much they should send to the Free Splunk instance. it monitor as needed to ensure won't hit the daily limit.

Setup should be in good shape (for a free splunk instance)

Thanks both @PickleRick and @richgalloway you both helped here!! 👏

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see. Thanks for sharing the two options that I can do.

Will look into the second option as it seems to be useful for both Splunk Free limit as well as to prevent Splunk from overfilling the disk on the machine itself.