Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: How to configure Splunk to index NetApp CIFS l...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to configure Splunk to index NetApp CIFS logs in XML format?

I am having issues configuring Splunk to Index NetApp CIFS logs in XML format.

Here is an example of 3 events:

<Events xmlns="http://www.netapp.com/schemas/ONTAP/2007/AuditLog">

<Event><System><Provider Name="Netapp-Security-Auditing"/><EventID>4656</EventID><EventName>Open Object</EventName><Version>101.3</Version><Source>CIFS</Source><Level>0</Level><Opcode>0</Opcode><Keywords>0x8020000000000000</Keywords><Result>Audit Success</Result><TimeCreated SystemTime="2016-03-24T17:00:18.275811000Z"/><Correlation/><Channel>Security</Channel><Computer>4cf616e5-deec-11e5-9347-00a0988f86b6/e64ece12-df28-11e5-9348-00a0988f86b6</Computer><Security/></System><EventData><Data Name="SubjectIP" IPVersion="4">10.10.10.10</Data><Data Name="SubjectUnix" Uid="0" Gid="1" Local="false"></Data><Data Name="SubjectUserSid">S-9-9-99-9999999999-999999999-9999999999-9999</Data><Data Name="SubjectUserIsLocal">false</Data><Data Name="SubjectDomainName">DOMAIN</Data><Data Name="SubjectUserName">admin</Data><Data Name="ObjectServer">Security</Data><Data Name="ObjectType">Directory</Data><Data Name="HandleID">0000000000041f;00;00000040;5e1fd3f6</Data><Data Name="ObjectName">(name);/</Data><Data Name="AccessList">%%4423 %%1541 </Data><Data Name="AccessMask">10080</Data><Data Name="DesiredAccess">Read Attributes; Synchronize; </Data><Data Name="Attributes">Open a directory; </Data></EventData></Event>

<Event><System><Provider Name="Netapp-Security-Auditing"/><EventID>4656</EventID><EventName>Open Object</EventName><Version>101.3</Version><Source>CIFS</Source><Level>0</Level><Opcode>0</Opcode><Keywords>0x8020000000000000</Keywords><Result>Audit Success</Result><TimeCreated SystemTime="2016-03-24T17:00:18.276812000Z"/><Correlation/><Channel>Security</Channel><Computer>4cf616e5-deec-11e5-9347-00a0988f86b6/e64ece12-df28-11e5-9348-00a0988f86b6</Computer><Security/></System><EventData><Data Name="SubjectIP" IPVersion="4">10.10.10.10</Data><Data Name="SubjectUnix" Uid="0" Gid="1" Local="false"></Data><Data Name="SubjectUserSid">S-9-9-99-9999999999-999999999-9999999999-9999</Data><Data Name="SubjectUserIsLocal">false</Data><Data Name="SubjectDomainName">DOMAIN</Data><Data Name="SubjectUserName">admin</Data><Data Name="ObjectServer">Security</Data><Data Name="ObjectType">Directory</Data><Data Name="HandleID">0000000000041f;00;00000040;5e1fd3f6</Data><Data Name="ObjectName">(name);/</Data><Data Name="AccessList">%%4423 %%1541 </Data><Data Name="AccessMask">10080</Data><Data Name="DesiredAccess">Read Attributes; Synchronize; </Data><Data Name="Attributes"></Data></EventData></Event>

<Event><System><Provider Name="Netapp-Security-Auditing"/><EventID>4656</EventID><EventName>Open Object</EventName><Version>101.3</Version><Source>CIFS</Source><Level>0</Level><Opcode>0</Opcode><Keywords>0x8020000000000000</Keywords><Result>Audit Success</Result><TimeCreated SystemTime="2016-03-24T17:00:18.561808000Z"/><Correlation/><Channel>Security</Channel><Computer>4cf616e5-deec-11e5-9347-00a0988f86b6/e64ece12-df28-11e5-9348-00a0988f86b6</Computer><Security/></System><EventData><Data Name="SubjectIP" IPVersion="4">10.10.10.10</Data><Data Name="SubjectUnix" Uid="0" Gid="1" Local="false"></Data><Data Name="SubjectUserSid">S-9-9-99-9999999999-999999999-9999999999-9999</Data><Data Name="SubjectUserIsLocal">false</Data><Data Name="SubjectDomainName">DOMAIN</Data><Data Name="SubjectUserName">admin</Data><Data Name="ObjectServer">Security</Data><Data Name="ObjectType">Directory</Data><Data Name="HandleID">0000000000041f;00;00000040;5e1fd3f6</Data><Data Name="ObjectName">(name);/</Data><Data Name="AccessList">%%4423 %%1541 </Data><Data Name="AccessMask">10080</Data><Data Name="DesiredAccess">Read Attributes; Synchronize; </Data><Data Name="Attributes">Open a directory; </Data></EventData></Event>

I've attempted to create a props.conf with KV_MODE = xml, but haven't had any success yet.

Any assistance would be appreciated.

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How are you getting the files out of NetApp? Are you using a forwarder or is the Filer sending the files to a Heavy Forwarder?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NetApp filers create a log volume that we expose as a CIFS share. We monitor these shares with a Heavy Forwarder.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, please can you share how exactly were you monitoring the share

If you can share how it was mounted and then how you configured the UF to see the share/file

Was is just normal add data and monitor a local file ( which was the mount actually) ?

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

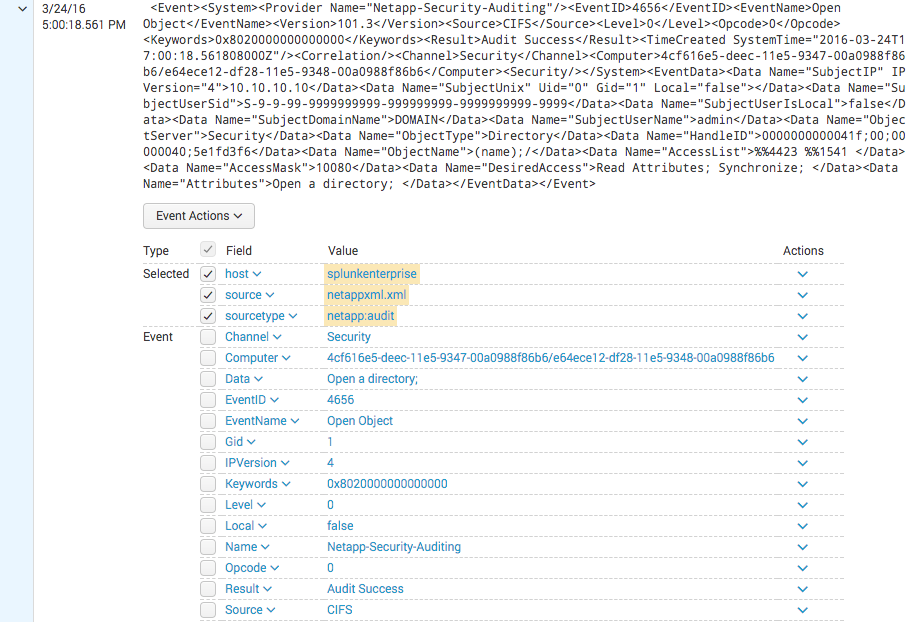

As noted in the comments above, the key is to tell Splunk where to find the timestamp, and that will cause the events to break properly. In props.conf:

TIME_PREFIX = <TimeCreated SystemTime=

Otherwise, I was able to quickly get XML field extraction working by piping to xmlkv. That should be the same end result as kv_mode=xml. Here's a screenshot:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, that works partially. Is there a way to extract automatically al data in fields of the type:

<Data Name="fieldname">value</Data>

Those do not get extracted when piping through xmlkv, only the value for the last "Data" field gets extracted as you can see on your picture.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am able to get XML field extraction, but it is delayed by around 3 hours, and the file keeps getting re-indexed (seek chkptr failed match). Here are my props:

[ontap]

TIME_PREFIX = SystemTime=\"

SHOULD_LINEMERGE = false

LINE_BREAKER = ()

MUST_BREAK_AFTER = \

KV_MODE = xml

Make sure you have global permissions on the TA or SA you are setting this in. Here is my metadata/local.meta

[]

access = read : [ * ], write : [ admin, yourothergroup, yourotherothergroup]

export = system

Is anyone getting a re-indexed file issue which leads to a long delay in making the data searchable? Since the files are large, I am assuming the re-indexing is causing the delay in search due to time/field extraction.

Here is what I am getting in splunkd.log on the UF:

ERROR TailReader - File will not be read, seekptr checksum did not match (file=\fileshare\audit_logs$\audit_last.xml). Last time we saw this initcrc, filename was different. You may wish to use larger initCrcLen for this sourcetype, or a CRC salt on this source. Consult the documentation or file a support case online at http://www.splunk.com/page/submit_issue for more info.

When I use crcSalt = I get the re-indexing issue, which leads to the delayed search results

From splunkd.log on UF

tailReader - ...continuing.

05-24-2016 15:50:58.127 -0400 INFO TailReader - Continuing...

05-24-2016 15:51:18.703 -0400 INFO WatchedFile - Checksum for seekptr didn't match, will re-read entire file='\fileshare\audit_logs$\audit_last.xml'.

05-24-2016 15:51:18.704 -0400 INFO WatchedFile - Will begin reading at offset=0 for file='\fileshare\audit_logs$\audit_last.xml'.

05-24-2016 15:51:19.205 -0400 INFO WatchedFile - Checksum for seekptr didn't match, will re-read entire file='\fileshare\audit_logs$\audit_last.xml'.

05-24-2016 15:51:24.208 -0400 INFO TailReader - Could not send data to output queue (parsingQueue), retrying...

05-24-2016 15:51:36.997 -0400 INFO TailReader - Could not send data to output queue (parsingQueue), retrying...

05-24-2016 15:52:26.751 -0400 INFO TailReader - Continuing...

05-24-2016 15:52:26.751 -0400 INFO TailReader - ...continuing.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am actually having the same issue with Splunk ingesting the logs multiple times.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For time travelers - my guess for the duplicate logs was that xml insertion does not happen at the end of the file throwing off Splunk's check mechanism for detecting change - re-indexing the whole file. I mitig ated this by not monitoring the file currently being written to and having the file rotated every 15 minutes and monitoring the rotated files. These rotated files wont have anything written in them once they are created - hence avoiding the problem. Hope it helps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We ended up doing something similar. Our logs roll after they reach 512MB & we only have Splunk ingest the rolled files.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am able to successfully get Splunk to identify individual events using the following props.conf:

[NetApp:Audit]

KV_MODE = xml

TIME_PREFIX = <TimeCreated SystemTime=

MAX_TIMESTAMP_LOOKAHEAD=300

SHOULD_LINEMERGE=true

BREAK_ONLY_BEFORE=^<Event>

Field extraction of the xml is still not working. This file is on our Search Head & Indexers. Is there further configuration needed for field extraction of XML?

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Has anyone had success with getting the NetApp CIFS logs parsed out correctly? I have tried to manually upload one of these xml files on a standalone sandbox server, and was still not able to get all the fields parsed out correctly. I believe the ONTAP 9 documentation says the logs can be written either in xml or evtx file formats. Would these logs be parsed better if they were written in an evtx file format instead of xml

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i ended up using xml and parse them with a custom props and transforms.