- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- How do I export a large dataset from the REST API?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm reading the documentation at http://docs.splunk.com/Documentation/Splunk/7.2.0/RESTREF/RESTsearch#search.2Fjobs but I'm having problems getting all the search results that I need.

The logic that I thought would work is:

- Create an asynchronous search job

- Regularly poll the job until it is done

- Fetch the resultCount from the job

- Fetch paginated results

I believe that there is some setting in limits.conf that restricts how many results you can pull at a time. By default, it's set to 50k and I'm using Splunk Cloud, so I can't touch this. That's why I'm fetching pages of results.

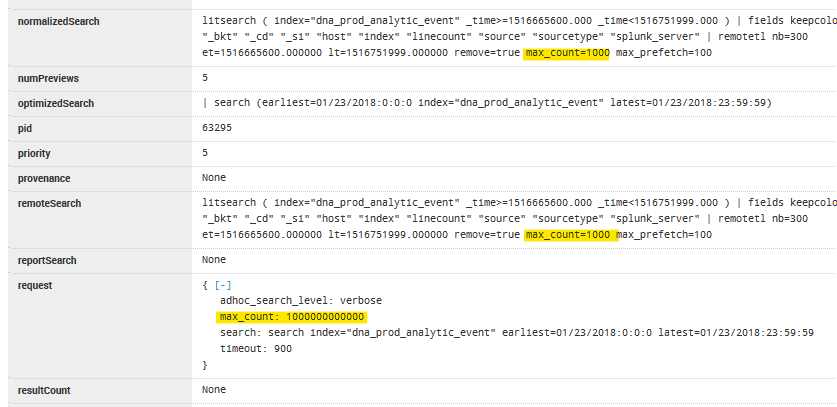

However, no matter what I set max_count to in the search request, Splunk normalizes this request to 1000. When I call the API to get the number of results in the data-set, it says that there are 1000.

Here is a screenshot from inspecting the job:

What is the best way to use the API to get a large dataset out of Splunk?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The easiest way to export is in my opinion to do it via curl. E.g.

curl -k -u USERNAME:PASSWORD https://SPLUNK_URL:8089/services/search/jobs/export \

--data-urlencode search='search index="my-index" earliest=0 latest=now | table field1, field2' \

-d output_mode=csv \

-d earliest_time='-y@y' \

-d latest_time='@y' \

-o output-file.csv

In this case from the previous year into the file output-file.csv

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

heres my powershell way of getting large results through rest

https://github.com/dstaulcu/SplunkTools/blob/master/Splunk-SearchLargeJobs-Example.ps1

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The easiest way to export is in my opinion to do it via curl. E.g.

curl -k -u USERNAME:PASSWORD https://SPLUNK_URL:8089/services/search/jobs/export \

--data-urlencode search='search index="my-index" earliest=0 latest=now | table field1, field2' \

-d output_mode=csv \

-d earliest_time='-y@y' \

-d latest_time='@y' \

-o output-file.csv

In this case from the previous year into the file output-file.csv

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems that the export API endpoint streams results instead of saving them and so allows you to have much larger result sets.

The answer to the question is, therefore, not to use the /jobs/search endpoint to create a search job and then later go fetch the results. Instead, use export to stream it all over the wire.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Andrew, we're getting XML parse errors from the jobs.export API over python SDK, whereas jobs.oneshot completes the same query (albeit too slow for our application). Is there an alternative, fast method to export query results as a single XML or json file perhaps?