Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: How can we avoid indexing delays?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How can we avoid indexing delays?

We have cases when the indexing delays are up to 15 minutes, it's rare but it happens. In these cases, we see that the indexing queues are at 80 – 100 percent capacity on three of the eight indexers. We see moderate bursts of data in these situations but not major bursts.

These eight indexers use Hitachi G1500 arrays with FMD (flash memory drives).

How can we understand better these situations and hopefully minimize the delays?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is likely with the load balancing setting on your forwarders in outputs.conf

If you have autoLBVolume set, try disabling it. That parameter causes a forwarder to switch to another indexer only after a certain amount of data has been sent.

A more preferred method, especially when you see some indexers receiving more data than others as you describe, is to use autoLBFrequency. This setting will force the forwarder to switch to another indexer after a specified interval (in seconds), rather than amount of data sent. Typically you can get a better distribution with this setting in place, and a shorter interval setting.

See the documentation here for more info:

https://docs.splunk.com/Documentation/Splunk/8.0.3/Admin/Outputsconf

An upvote would be appreciated and Accept Solution if it helps!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How is the data distribution on your indexers also? If you have forwarders that are sticking to the three indexers, it could cause delays as the queues are filled.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How can I check it?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

monitoring console (it has a views for this) or there are some dashboards in Alerts for Splunk Admins to assist in visualising data across the indexing tier via the metrics.log file

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

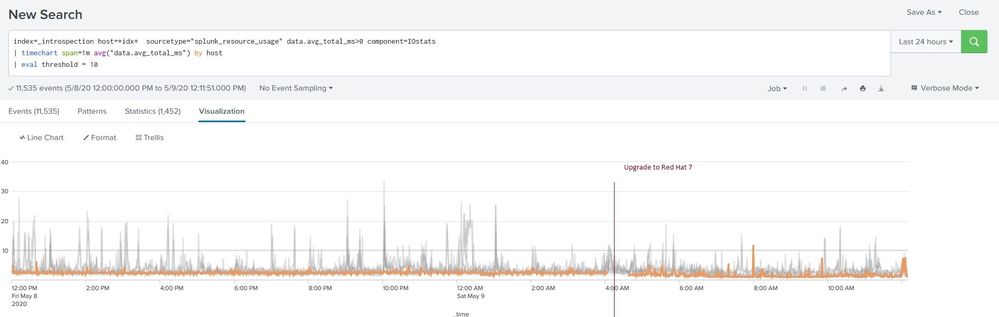

We got the following query from our Sales Engineer -

index=_introspection host=host_name earliest=-24h sourcetype="splunk_resource_usage" data.avg_total_ms>0 component=IOstats

| timechart span=1m avg("data.avg_total_ms") by host

| eval threshold = 10

We have eight indexers and we can easily see that for some indexers we have a couple of milliseconds delay throughout the day, some are up and down the 10 ms threshold and two that consistently reach the 30 or 40 ms level during the day.

Is 10 milliseconds the threshold? If we go over it consistently, does it mean that we have a hardware issue?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the issue could be due to the soucetyping of the data.

May be try going to the "Data Quality" dashboard in the Monitoring Console and check.

It is available in Monitoring console --> Indexing --> Data Inputs --> Data quality

It would should you the issues related to line breaking, time stamping or aggregation.

Solve these issues and see if the indexing delays continue.