Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Help with a free disk space query.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Help with a free disk space query

Hello Splunkers,

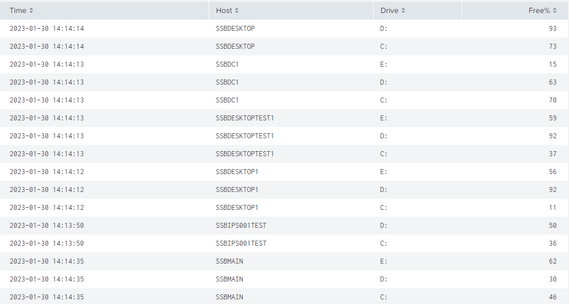

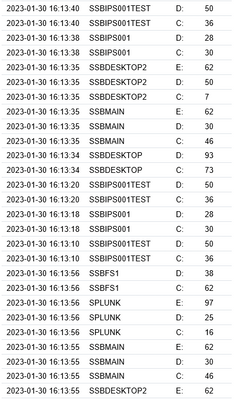

I am attempting to gather the free disk space of all servers and create a report / alert based on it. Thus far I have the SPL set so it outputs the Time, Host, Drive and % Free but the results come back in a long list of pages.

What I'd like to do is two-fold. First part is getting one result per Drive, so one result for each drive on a host and then I'd like to set up an alert for low disk space. Here's my SPL so far:

(index=main) sourcetype=perfmon:LogicalDisk instance!=_Total instance!=Harddisk* | eval FreePct-Other=case( match (instance, "C:"), null(), match(instance,"D:"), null(),true(),storage_free_percent), FreeMB-Other=case( match (instance, "C:"), null(), match(instance,"D:"), null(), true(),Free_Megabytes), FreePct-{instance}=storage_free_percent,FreeMB-{instance}=Free_Megabytes| search counter="% Free Space" | eval Time=strftime (_time,"%Y-%m-%d %H:%M:%S") | table Time, host, instance, Value | eval Value=round(Value,0) | rename Value AS "Free%" | rename instance AS "Drive" | rename host AS "Host"

The result is:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

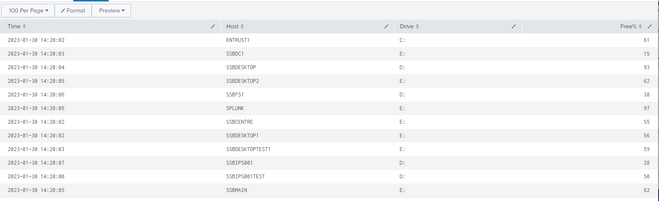

I've gotten it to list one drive per host but I can't seem to manipulate the SPL to show me all drives.

(index=main) sourcetype=perfmon:LogicalDisk instance!=_Total instance!=Harddisk* | eval FreePct-Other=case( match (instance, "C:"), null(), match(instance,"D:"), null(),true(),storage_free_percent), FreeMB-Other=case( match (instance, "C:"), null(), match(instance,"D:"), null(), true(),Free_Megabytes), FreePct-{instance}=storage_free_percent,FreeMB-{instance}=Free_Megabytes| search counter="% Free Space" | stats latest(_time) as _time, latest(instance) as instance, latest(Value) as Value by host | eval Time=strftime (_time,"%Y-%m-%d %H:%M:%S") | table Time, host, instance, Value | eval Value=round(Value,0) | rename Value AS "Free%" | rename instance AS "Drive" | rename host AS "Host"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

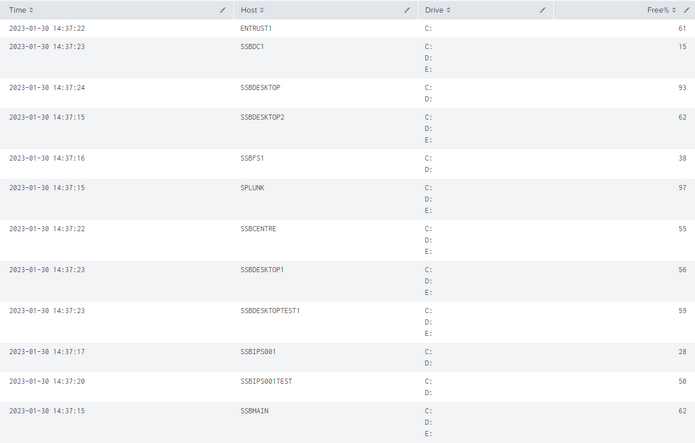

Making more headway but not 100% there, I tried "values" for stats in the case of instance and I got all the drive letters tied to the hosts but I can't seem to get the values themselves to populate:

(index=main) sourcetype=perfmon:LogicalDisk instance!=_Total instance!=Harddisk* | eval FreePct-Other=case( match (instance, "C:"), null(), match(instance,"D:"), null(),true(),storage_free_percent), FreeMB-Other=case( match (instance, "C:"), null(), match(instance,"D:"), null(), true(),Free_Megabytes), FreePct-{instance}=storage_free_percent,FreeMB-{instance}=Free_Megabytes| search counter="% Free Space" | stats latest(_time) as _time, values(instance) as instance, latest(Value) as Value by host | eval Time=strftime (_time,"%Y-%m-%d %H:%M:%S") | table Time, host, instance, Value | eval Value=round(Value,0) | rename Value AS "Free%" | rename instance AS "Drive" | rename host AS "Host"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

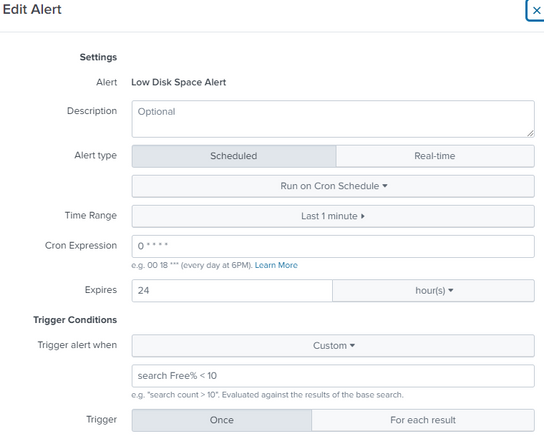

Alright, now I have the alert set up and it works but then the e-mail it sends will include all results over 1 minute with lots of duplicates. Now I need a way to say in the alert which host had low disk space. I have a custom trigger alert for free% < 10 so it will give me a long list of hosts repeated and the one less than 10 is in there (SSBDESKTOP2 in this instance). Just need to get it narrowed down: