Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Forwarding mirror copies of logs to multiple i...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Forwarding mirror copies of logs to multiple indexer groups [Splunk UF]- Why are the logs not being received properly?

Hi There,

I have a universal forwarder that is installed on a Syslog Server and is reading all the logs received on the Syslog Server and forwarding them onwards to a single indexer.

Network Devices --> Syslog Server (UF Deployed) --> Single x Indexer

However, now I want to configure the UF to forward the mirror copies of some specific log paths to another indexer group as well.

Network Devices --> Syslog Server (UF Deployed) --> (all log sources) Single x Indexer

--> (some log sources) Multiple x Indexers

This has been configured by configuring two output groups in outputs.conf and then in the monitor stanza's adding the _TCP_ROUTING to specify which log paths to forward to both indexer groups.

# outputs.conf

# BASE SETTINGS

[tcpout]

defaultGroup = groupA

forceTimebasedAutoLB = true

maxQueueSize = 7MB

useACK = true

forwardedindex.2.whitelist = (_audit|_introspection|_internal)

[tcpout:groupA]

server = x.x.x.x:9999

sslVersions = TLS1.2

[tcpout:groupB]

server = x.x.x.x:9997, x.x.x.x:9997, x.x.x.x:9997, x.x.x.x:9997

#inputs.conf

[monitor:///logs/x_x/.../*.log]

disabled = false

index = x

sourcetype = x

_TCP_ROUTING = groupA, groupB

ignoreOlderThan = 2h

The issue is that the logs are not being received properly in the groupB (Multiple Indexers). Is there any misconfiguration I've made in the above or is there any way to check what the issue can be?

Kind regards

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ahmadgul21,

yes, it's possible, but indexing twice your logs, you also pay twice the license!

Anyway, see at https://docs.splunk.com/Documentation/Splunk/latest/Forwarding/Routeandfilterdatad#Filter_and_route_... to understand how to do this.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello ,

I've configured it as per the article you mentioned but I've used just the outputs.conf and inputs.conf because the article mentions the props.conf and transforms.conf method for heavy forwarders.

Since I have a UF that's why I've just defined the group in outputs.conf and then the specific monitor stanza has been updated with _TCP_ROUTING to forward to both groups. Is this the correct approach?

This configuration has been performed but the issue is that not all the logs are not being received in the second group of indexers e.g. is there any way I can check from the UF level what data it is sending to which group and if there are any errors?

Both the indexers groups are separate Splunk Deployments - I have access to the UF to make the changes.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ahmadgul21,

_TCP_ROUTING in inputs.conf's stanzas is useful to selectively send data to a group of Indexers or to another.

If you want to send all the data to all the Indexers groups, you need only to configure outputs.conf.

About configuration updates deployment to Forwarders I hint to use a Deployment Server, so direct access to UFs isn't useful.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apologies for not clarifying it - all data is being sent to one indexer group however some data is being sent to both the indexer groups so that's why tried to use _TCP_ROUTING in inputs.conf

The default group is groupA (one indexer) so all data is being sent to this group however for specific data I've added _TCP_ROUTING = groupA, groupB so that it is also sent to groupB (multiple indexers) as well.

groupA is a different Splunk Deployment and groupB is a different Splunk Deployment so I am sending the data from this Syslog Server to two different Splunk Deployments.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello ,

Thank you for confirming about the approach.

Yes, there is an issue that right after I configured the _TCP_ROUTING for the specific log sources (log paths) the groupB did not receive any events from the log sources even though the log files on the Syslog Server were being updated - the events / logs were received in groupB after some time. Further, for example, I have 50 different hosts sending logs in one log path on the Syslog Server which is being sent to both groupA and groupB - the groupB received logs from only 5 even though all 50 log files were being updated.

Summary of Issues,

1. How to identify the reason for the delay in logs being received in groupB (configured via _TCP_ROUTING)

2. Is there a chance for logs to be missing? How to identify if this is the case?

Steps taken to identify whether logs are being sent,

I've tried to read the metrics.log file on the UF to see if any data is being sent to the groupB which showed that right after the configuration was applied no logs were being sent but after some time some logs were being sent, sample snippet pasted below,

tail /opt/splunkforwarder/var/log/splunk/metrics.log | grep groupB

09-01-2022 13:59:32.194 +0500 INFO Metrics - group=queue, name=tcpout_groupB, max_size=7340032, current_size=2322132, largest_size=2322132, smallest_size=2322132

09-01-2022 13:59:32.194 +0500 INFO Metrics - group=tcpout_connections, name=groupB:x.x.x.x:9997:0, sourcePort=8089, destIp=x.x.x.x, destPort=9997, _tcp_Bps=3967.13, _tcp_KBps=3.87, _tcp_avg_thruput=3.87, _tcp_Kprocessed=116, _tcp_eps=0.10, kb=116.22, max_ackq_size=22020096, current_ackq_size=118360I did find these from the health.log file pasted below which signify the issue might be with load - however I'm not sure about this one, if you can confirm about this,

tail /opt/splunkforwarder/var/log/splunk/health.log

09-01-2022 13:46:35.112 +0500 INFO HealthChangeReporter - feature="TailReader-0" indicator="data_out_rate" previous_color=green color=yellow due_to_threshold_value=1 measured_value=1 reason="The monitor input cannot produce data because splunkd's processing queues are full. This will be caused by inadequate indexing or forwarding rate, or a sudden burst of incoming data."

09-01-2022 13:46:40.112 +0500 INFO HealthChangeReporter - feature="BatchReader-0" indicator="data_out_rate" previous_color=yellow color=red due_to_threshold_value=2 measured_value=2 reason="The monitor input cannot produce data because splunkd's processing queues are full. This will be caused by inadequate indexing or forwarding rate, or a sudden burst of incoming data."

09-01-2022 13:56:34.898 +0500 INFO HealthChangeReporter - feature="TailReader-0" indicator="data_out_rate" previous_color=red color=green measured_value=0- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ahmadgul21,

answering to your questions:

1)

there isn't any general reaso for a delay except an overload of the server in some moments, you should identify the times of delays and see if there was an oveload of CPU and network,

you can do this using the Monitor Console on Splunk servers (heavy Forwarders), and take the Operative System logs (using e.g. the Spluk_TA-nix Add-On) on Universal Forwarders.

2)

logs should be never missed, but see if there's a queue on your hosts, you can see this issue running the following search:

index=_internal source=*metrics.log sourcetype=splunkd group=queue

| eval name=case(name=="aggqueue","2 - Aggregation Queue",

name=="indexqueue", "4 - Indexing Queue",

name=="parsingqueue", "1 - Parsing Queue",

name=="typingqueue", "3 - Typing Queue",

name=="splunktcpin", "0 - TCP In Queue",

name=="tcpin_cooked_pqueue", "0 - TCP In Queue")

| eval max=if(isnotnull(max_size_kb),max_size_kb,max_size)

| eval curr=if(isnotnull(current_size_kb),current_size_kb,current_size)

| eval fill_perc=round((curr/max)*100,2)

| bin _time span=1m

| stats Median(fill_perc) AS "fill_percentage" by host, _time, name

| where (fill_percentage>70 AND name!="4 - Indexing Queue") OR (fill_percentage>70 AND name="4 - Indexing Queue")Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello Thank you for the answer and the SPL Query - it is much appreciated.

I have one more question - related to useACK = true

[tcpout]

defaultGroup = groupA

useACK = true

[tcpout:groupA]

server = x.x.x.x:9999

sslVersions = TLS1.2

[tcpout:groupB]

server = x.x.x.x:9997, x.x.x.x:9997, x.x.x.x:9997, x.x.x.x:9997

Can I set useACK = false for groupB? I feel maybe because the groupB indexers are under a huge load and in a different network therefore the acknowledgement would take time or be delayed that's why the data to groupB is not being sent in real-time and has delays because it is waiting for the acknowledgement.

What would you recommend regarding this configuration - setting useACK = false for groupB?

Thank you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ahmadgul21,

yes you could but I don't hint this: it's always better to have acks!

If you have delays, consider them in your searches.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello ,

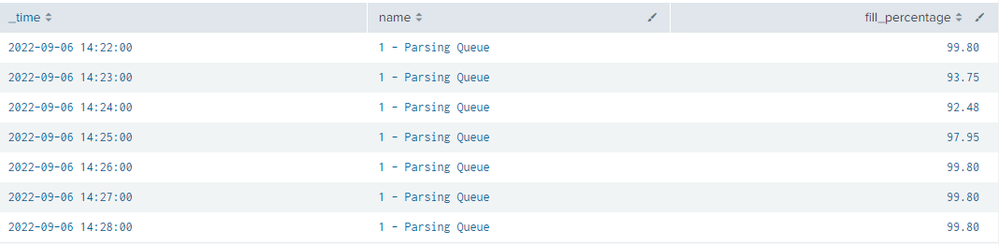

I was able to finally run the search you provided, can you help me understand the snippet from the output pasted below,

It seems that there is a lot of queues in the parsing queue - fill percentage is on average around 96% approx.

Kind regards

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ahmadgul21,

this means that you need to make a tuning activity on some of your hosts because there's a queue.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello ,

I have been trying to figure out how to tune the servers and see if there are any other challenges.

The findings have been that even though useACK=true has been set in outputs.conf even still logs are being missed in Splunk - this was identified when it was identified that Splunk did not receive logs for a specific day and after checking on the Syslog Server I found there were logs for that day but they were not received in Splunk.

When you said to tune the hosts - there is only one host (Syslog Server - UF) which is sending the logs to Splunk and the settings of this host are as follows,

limits.conf

[thruput]

maxKBps = 0

outputs.conf

[tcpout]

forceTimebasedAutoLB = true

maxQueueSize = 7MB

useACK = trueThe host has about 64 GB RAM.

Can you advise if possible on what settings to tune in the host limits.conf etc and about what could be the reason logs are missing even though useACK=true? (Can it be that the delay is so long in receiving the ACK from the Indexers that the Queue is filled up and older data is removed from Queue before being sent to the indexers?)

Kind regards

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ahmadgul21,

the mandatory parameter to modify is maxKBps = 0, that means that you have no limites in parckets sending from UF to IDX.

Using this configuration, do you continue to have queues?

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello ,

Yes, this parameter maxKBps = 0 has been configured from the start - even still we have observed delays - queues as I shared in an earlier post.

So am not sure where else to look into to resolve this issue - only area I was thinking was the delay of the ACK from Indexers to the Syslog Server (UF) as useACK=true was configured.

Kind regards

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ahmadgul21,

I don't think that ack is the problem, after the configuration check, I suppose that the problem is in infrastructure: storage or network.

Ciao.

Giuseppe