Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- An hour of Latency when large amount of data is du...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An hour of Latency when large amount of data is dumped into a file. How to avoid/reduce latency in this case. Details are below.

What else can I do in order to reduce the latency in indexing for below case-

Facing large latency in indexing events for a brief period of time, every day.

I have a single source file, which logs about 0.5 Mb of data per 5 minutes. However, the same source file adds about 5000 MB data within a span of 15 minutes, only once per day.

The issue occurs due to splunk forwarder is not being able to process all 5000 MB of data within 15 minutes. I am observing few queue blocks Warning and our parsing queue is clogged (Filled greater than 90%) for almost an hour.

What have I tried already?

- Increased thruhput from 256KBps to 1024 KBps.

This did help a little bit but still facing large latency issue. Meanwhile my throughput is maxed out during the duration of this issue. Not sure how much more can I increase this limit.

- Added two pipelines to process events on forwarder.

This does not help as each sourcefile can be processed with a single pipeline only. A source does not load balance between multiple pipelines when processing events.

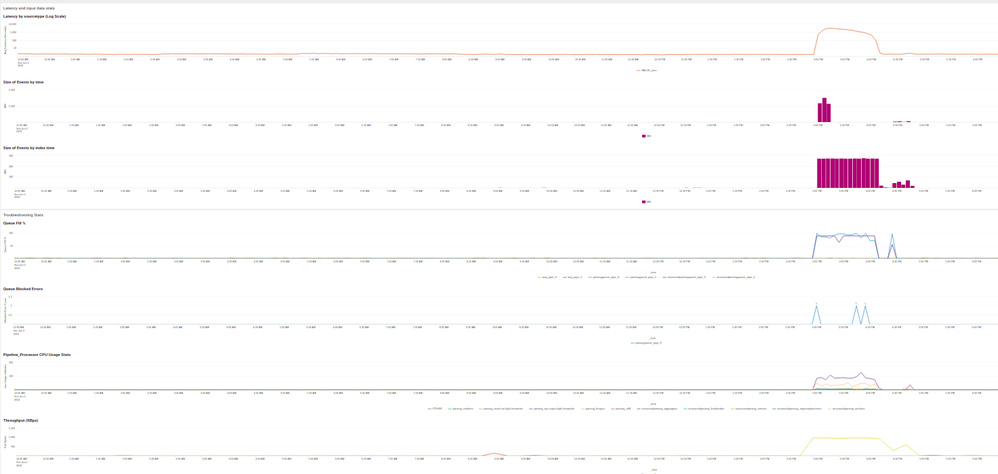

I have collected few time charts into dashboard to troubleshoot it further, attached below is the same.

About time charts in below image -

Timechart 1: Latency in events

Timechart 2: Sum of Size of events { len(_raw) }

Timechart 3: Sum of Size of events by _indextime instead of _time (Shows the size of events that were indexed during a period)

Timechart 4: Queue Fill Percentage

Timechart 5: Queue blocked count per 5 minutes

Timechart 6: Pipeline processors CPU Usage

Timechart 7: Throughput (KBps)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unless you have a reason to throttle your forwarder, you may want to set maxKBps=0 (unlimited).