Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- Re: Bucket rolling criteria

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I have few concerns regarding buck rolling criteria my question is more focused on hot bucket.

So we have 2 types of index

1. Default

2. Local or customized index

So when I check the log retention of default index

Hot

it shows 90 days

Maxbucketcreate=auto

Maxdbsize=auto

And we don't define anything for for local index

So while checking we fig out like for a particular index we can only have 55 days of logs in out hot bucket n when we see the log consumption for this index is nearly about 12-14gb per day

And for other local index we can see more than 104 days of logs

My concern is what retention policy splunk is following to roll the bucket for local index

1. 90 days period (which is not happening here)

2. When the hot bucket is full per day wise basis( if splunk is following this then how much data a index can store per day n how many hot bucket we have for local index n how much data each bucket can contain)

Hope im not confusing

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k ,

Yes, maxTotalDataSizeMB defines the total storage of hot+warm+cold.

When an index exceeds this value, the older buckets will be automaticaaly discarded without any attention to the retention, for this reason I hint to put much attention to the Capacity Plan, to avoid to discard data inside the retention period that you could need (e.g. regulation requirements).

If you need to maintain these data after the retention period or when the max side is reached, you should create a script that saves data in another location in offline way.

Anyway it's possible to resume these frozen data as thawed.

To know how many logs you daily index you can use the Monitoring Console, anyway, the algorythm to calculate storage occupation is the one I described in my previous answer:

daily_index_rate * 0.5 * retention_period_in_days,

even if the best way is using the calculator I already shared.

use always a safe factor because a bucket will not be immediately deleted but when the latest event exceeds the retentin period.

Tell me if I can help you more, otherwise, please, accept one answer for the other people of Community

Ciao and happy splunking

Giuseppe

P.S.: Karma Points are appreciated 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

a bucket will roll when it will exceed the default rolling period 90 days or wen it exceed the max dimension for warm buckets or when the number of hot buckets exceed the max.

In indexes.conf you can find all the default values and the parameters to change those values:

- maxWarmDBCount

- maxTotalDataSizeMB

- rotatePeriodInSecs

- frozenTimePeriodInSecs

- maxDataSize

- maxHotBuckets

- etc...

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

Yes you are correct what I figured out are

- maxTotalDataSizeMB=auto

- maxDataSize=auto

- maxHotBuckets=auto

Everything is set to auto so my ask is auto means what value I have 64 bit of machine.

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

I can help you more only if you define which requirement you whant, but when you defined this, you'll not need my help!

Anyway, tell me if I can help you more.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

I have few questions to ask.

Case 1

Support

Total hot bucket = 3

Max data a bucket can have is 750MB

I.e. Total 2.2 GB roughly

My question is support a index is get feeded 12 GB Of data per day, so It is sure that it will Roll the bucket from hot->warm->cold

So up to 2.1 GB will be in hot bucket rest 10GB of data will move to warm bucket? Or it will roll previous data to warm to make space for new data

case 2

i want to keep 90 days of data for a index in hot bucket and my daily consumption is almost 12-14 gb so how much buckets do I need to define or do I need to change any other setting on indexer so the bucket size get increase automatically

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

question 1:

the path is always:

- hot bucket

- warm bucket

- cold bucket

a bucket cannot directly pass ro warm

in your case, surely many hot buckets will roll to warm in a day.

When they will roll?

- when they reach the max dimension for a bucket,

- or when you reach the max number of hot buckets

- or when the earliest event in a bucket exceed the hot buckets duration.

I never configured hot buckets duration and it's better that they pass as soon as possible to warm for this reason:

hot and warm buckets hare in the same storage so the storage performance is the same for both, but hot buckets txindexes are continously rebuilded, instead warm buckets are complete and closed.

For this reason, warm buckets answer better than hot buckets.

I hope I was clear.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

Yes you are correct.

So if data is in warm bucket can we see the data in search head.

for one index online searchable is only 55 days and for rest it almost 110 days.

Is there any way I can see 110 days of data in online search for the 55days one index

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

you have online data in hot, warm and cold buckets!

offline data are the ones in thawed buckets.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

That’s new thing I learned today.

1 question I have like for one index I can see the logs for just 55 days how to fix this issue like I want Atleast 90days of logs should be searchable in online

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

check the retention and the max dimension for that index, these are the only relevant parameters if you discarded events older than 55 days.

Especially check the frozenTimePeriodInSecs: when the last event exceed this time the bucket can be discarded or copied in another folder and it goes off line, you can put on line again this bucket copying it in the thawed folder.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

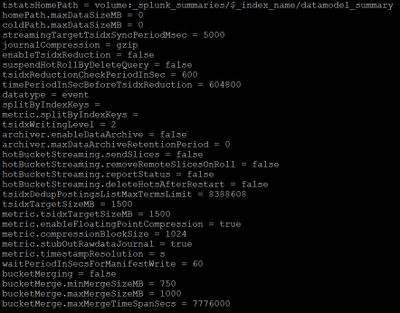

Hi @gcusello

when I checked the setting of indexer I could see

for hot bucket

maxdatasize=auto (I.e. equivalent to 750MB for one bucket)

maxhotspansecs=90 days

for warm bucket

maxwarmDBCount=300

for frozen bucket

frozenTimePeriodInSecs=188697600 (I.e. 6 years)

When I check how many bucket I have using SPL

HOT BUCKET =3

warm bucket =300

cold bucket = 366

And when I check the status of the bucket for that x index it show full for everything.

kindly correct me if I’m wrong

the log injection is high due to which it is taking ton amount of space and when the cold bucket is full it is moving the data to thawed bucket which is offline storage.

if I’m correct then I want to know is there any way where I can increase the size of warm and cold bucket so that I can store min 90 days of data

Attaching the snip

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

as you can see Hot and warm buckets are the default values and I hint to leave these values.

About the cold buckets, now you have 366 but they will grow because you have 6 years of retention and I don't know maxSpace.

Anyway, if you want 90 days for on line searches, you don't need this retention and you could reduce the frozenTimePeriodInSecs to a lower value (e.g. 120-180 days), this value is very important because it influeces the needed storage.

At the end (or at the beginning!) i hint to make a capacity planning to identify the requirents for your storage and then use this storage calculator http://splunk-sizing.appspot.com/

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

Thank you for your time I’m very new to Splunk and I’m still learning.

ik I’m repeating same thing.

as you mentioned earlier that cold bucket can be search online but I can’t see any data more than 55 days from a particular index so is their any way I can see the data which is more tha. 55 days as cold bucket can be search online

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

be sure, no problem to explain again!

as I said, cold data are on line searcheable until they will be deleted or frozen,

if you don't see them, it means the you don't have them more.

This is possible if your system discarded them.

The reason of discarding can be that you reached the max dimension for your index or that you exceeded the retention period.

This occurs if you have a configuration for one or both these parameters: you could have more settings for these paramenters in more indexes.conf files.

You should search these parameters in all your indexes.conf files, maybe there's more tan one definition in your apps.

You can find them using the btool command:

/opt/splunk/bin/splunk csm btool index list --debug | grep frozenTimePeriodInSecs or

/opt/splunk/bin/splunk csm btool index list --debug | grep maxDataSizeMBCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

Thank you I learn something new today.

yes I checked and found that maxdatasizeMB=0

and frozentimeperiodinsec=188697600 which is set to default i.e. 6 years and one yes 1 Custom index has different frozen time but it is not related to that index which I’m taking about.

I too check other custom index situation is same but I can see 100+ days of data but it is not happing with that index whose log injection is high all index are confirmed in same way.

thinking why there is a discrepancy in data where the data is being drop after 55 days

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

sorry but I don't have other hints:

you checked configurations and data,

eventually, see in the next days if the limit of 55 days remains or not: if remains there's something that discards older buckets, otherwise you haven't older events.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

Please no sorry thank you for everything.

if I found something or if I suck anywhere I will come back here again and will update you the same.

Is there any luck you have linkdln account if you don’t mind then we connect also connect there

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k ,

ok, let me know, if you need other information related to this question, but anyway if one answer solves your need, please accept it for the other people of Community or tell me how we can help you.

Ciao and happy splunking

Giuseppe

P.S.: Karma Points are appreciated by all the Contributors;-)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

I have a few questions on my mind it would be great if you could address me

1. What is the use of maxTotalDataSizeMBand what will happen if it is set to zero

2. If my log injection is high then data will from hot to warm to cold if hot is full n warm 300 buckets is full, so my current retention policy is set to 6 years that means my no of cold bucket will increase correct me if my understanding is wrong

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @debjit_k,

As you can read at https://docs.splunk.com/Documentation/Splunk/latest/admin/Indexesconf

maxTotalDataSizeMB = <nonnegative integer>

* The maximum size of an index, in megabytes.

* If an index grows larger than the maximum size, splunkd freezes the oldest

data in the index.

* This setting applies only to hot, warm, and cold buckets. It does

not apply to thawed buckets.

* CAUTION: The 'maxTotalDataSizeMB' size limit can be reached before the time

limit defined in 'frozenTimePeriodInSecs' due to the way bucket time spans

are calculated. When the 'maxTotalDataSizeMB' limit is reached, the buckets

are rolled to frozen. As the default policy for frozen data is deletion,

unintended data loss could occur.

* Splunkd ignores this setting on remote storage enabled indexes.

* Highest legal value is 4294967295

* Default: 500000I don't think that's possible to set it as zero.

About thesecond question, if you have an high data flow, your buckets will remain in hot state for a few time, and you'll have many warm buckets, this isn't a problem in performaces, but only for the storage you need.

As I said, you should do a detailed capacity plan and define the requirements for your storage.

Having a 6 years retention period, you'll have a large storage occupation, put attention to the capacity plan, especially if you have a large input flow, only a quick calculation:

if you index 100 GB/day and you have a 6 years retention, you need a storage of around:

100 GB / 2 * 365 * 6 = 109.500 TB

do you really need to have all these on line data?

If you have storage to loose (and to back-up), you can do it, but I hint to carefully think to this!

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello

Yeah you are right I should do a defined capacity plan for my storage.

One things I was going through the index.conf file and come to know like my maxTotalDataSizeMB=500000 i.e. Equivalent to 500 GB (so this define the total storage of hot+warm+cold) and if the number exceed then splunk will delete the data automatically.

Kindly correct me if im wrong.

If im write can you please suggest me a query to know how many gb hot+warm+cold bucket is getting consumed by a x index..

Thanks