- Find Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- Re: Getting error on search head regarding replica...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Getting error on search head regarding replication

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if indexer or indexer cluster is not down, this message generally indicates high queue utilization in Splunk.

if you have DMC set up:

Settings > Monitoring Console > Indexing > Performance > < 2 Options>

first, if you have a cluster, check deployment first to see what is busy.

then check the instance that is indexing a lot, or high in Splunk queues.

Panel > Median Fill Ratio of Data Processing Queues

Change dropdowns to all queuest and maximum.

if you don't have DMC set up, this search would show you all of your queues. Find your indexer from host name:

index=_internal group=queue

| eval percfull=round(((current_size_kb/max_size_kb)*100),2)

| search percfull>80

| dedup host, name

| eventstats dc(host) as hostCount

| table _time host name hostCount current_size_kb max_size_kb percfull

| rename _time as InfoTime host as Host name as QueueName current_size_kb as CurrentSize max_size_kb as MaxSize percfull as Perc%

| sort - Perc%

| convert ctime(InfoTime)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

InfoTime Host QueueName hostCount CurrentSize MaxSize Perc%

07/26/2018 12:18:07.228 hesplfwd002.xxx.com execprocessorinternalq 14 4095 500 819.00

I got this results for one of the host,is this the issue?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

execprocessor queue is %819 percent, is hesplfwd002 an indexer ? execprocessor would not typically stop indexing. you can restart that instance anyways.

for file operation errors in the indexer cluster( typically from antivirus or account permissions) , I can suggest you this search also:

index=_internal (log_level=ERROR OR log_level=WARN) (host=myindexers) (component=PeriodicReapingTimeout OR component=BucketMover OR component=BucketReplicator OR component=CMRepJob OR component=DatabaseDirectoryManager OR component=DispatchCommandProcessor OR component=HttpClientRequest OR component=HttpListener OR component=IniFile OR component=ProcessRunner OR component=TPool OR component=TcpInputProc OR component=TcpOutputFd OR component=IndexerService OR component=S2SFileReceiver)

| stats values(host) dc(host) last(_raw) count by punct,component

| fields - punct

This will get you last error of each type , and components to check for issues. if this points to anything, you could adjust logging levels on that component to see what is failing.

if it is an indexer in cluster, do in CLI to restart :

splunk offline

this will take some time, then:

splunk start

Finally, check bucket status on the Cluster Master. Check which server has copies failing.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

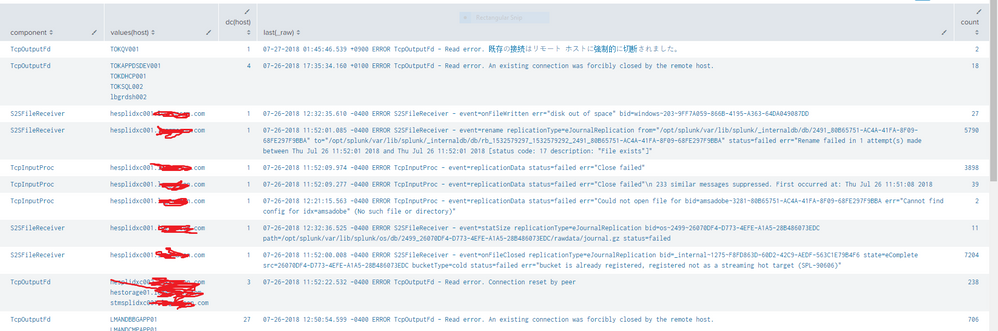

This hesplfwd002 is actually a heavy forwarder.I ran your search taht you gave and got the below details attached in the screen shot.The only thing I changed in the search is the host=*. The problem is with the indexer hesplidxc001.xxx.com ,how to get this up

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you restart that instance yet ? I don't see screenshot here

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see in the question I have attached the screen shot there.Yes I have restarted

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can see in 3rd row, you have that indexer is running out of disk space.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@vrmandadi,

As mentioned in the error, please check if the connectivity between your peers are not blocked by firewall and splunk service on 10.22.12.xxx is up and running and the port 8080 is not blocked.

What goes around comes around. If it helps, hit it with Karma 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked the peer 10.22.12.xxx:8080 in cluster master which has status as pending and searchable as NO.I tried to restart the peer but still it shows the same thing.I checked the disk usage and found that the /apps is using 100%.Is that the issue ,if so how can we solve this