Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- Different storage space occupancy within index cl...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Splunk community,

I'm relative new within the splunk cluster topic. Since three years I'm using Splunk in a standalone environment and has now switched over to a cluster to get the benefit of HA.

My new cluster works as expected. Seachfactor 2 and replication factor 3. In my cluster I have one searchhead, one master and three peernodes.

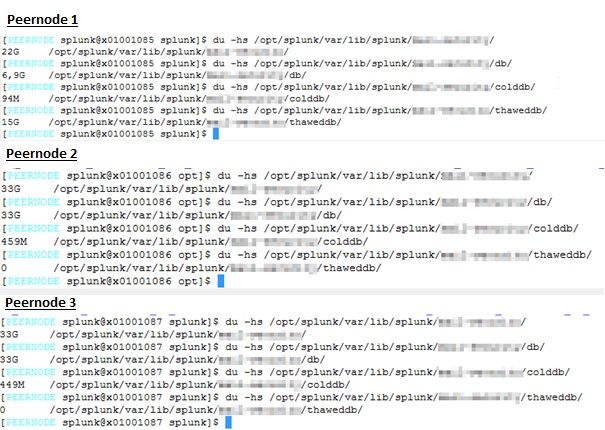

Now I've noticed that the disk storage occupucity is different between the peernodes. In my mind the clustering with replication factor = 3 means that each peernode will hold the same data and based on this the disk usage should be similar. Cn someone explain me this behaviour or did I have maybe an issue in my cluster configuration!? Attached you can find three screenshots regarding the disk usage of one of my indices.

Best regards

seilemor

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If one of the indexers with a searcheable copy fails, then Splunk starts a procedure to regain the search factor it lost: Splunk will copy the tsidx files from the remaining peer that has them to the one that has only the raw data.

More, if both Splunk peers with searchable copies die, you still can live with that because your remaining index has the raw data in it, and Splunk can remake the tsidx files from that raw data and so everything in Splunk will be searchable again.

Note that this last process of rebuilding tsidx files is time and resource intensive. But you'd still have everything after it finishes

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks to everyone. Question has been answered. I've stopped Splunk on one of my both servers which are hold the IDX files. Now I can see all the IDX files also on the third machine and the used disk storage is similar to each other.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If one of the indexers with a searcheable copy fails, then Splunk starts a procedure to regain the search factor it lost: Splunk will copy the tsidx files from the remaining peer that has them to the one that has only the raw data.

More, if both Splunk peers with searchable copies die, you still can live with that because your remaining index has the raw data in it, and Splunk can remake the tsidx files from that raw data and so everything in Splunk will be searchable again.

Note that this last process of rebuilding tsidx files is time and resource intensive. But you'd still have everything after it finishes

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

thank your for your response. What will happen now if one of the two systems which are holding the IDX files is going down!? Will the third machine which only hold the _raw data generate the IDX files too!?

I've checked the size on some of the buckets through the cluster in relation to the IDX and raw files and get exactly what you are telling me. Without IDX files one example bucket has 99MB, with IDX the bucket has 480MB.

Regards

seilemor

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

You are correct by saying each of the Peers should have one copy of each bucket (just the _raw data I mean) as Splunk tries to spread that.

What you are not considering is that the major part of space occupied by a bucket is not the _raw data itself. It is the "indexing" process that creates the structure to make data searchable. And in this case, you have only search_factor of 2, which might mean 2 of your peers are getting the two copies of the "searchable part" as they should. The remaining one will not receive any copy because it does not need to. The Search Factor is already respected with two copies!

Check your monitoring console to make sure it is working properly!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with tiagofbmm, IDX files can take around 50% of your stored data. Since you set a search factor of 2, only 2 copies our of 3 has the tsidx

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you try adding forceTimebasedAutoLB = true parameter to your outputs.conf.

Also, is all indexers have same storage capacity?