Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Dashboards & Visualizations

- :

- need help indexing a simple XML file

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I work with a file delivery system that relies on an xml "index" file that acts as a sort of manifest of files available for download in a given data set. I need to index these xml files so we can search and report on them in Splunk. While the files are fairly simple in construction, I am having a problem when trying to get them indexed cleanly.

Here is a sample of an xml file:

<?xml version="1.0" encoding="UTF-8"?>

<DSIF>

<Heading>

<GenDate>20191014T201231</GenDate>

<Root>\\UMECHUJX\cbm_navy\E-2D\</Root>

</Heading>

<Record>

<Path>HFP/IAVAS</Path>

<Size>14394144</Size>

<Hash>438b87856704c7c3bb5b3927d6d5959aaf997e8777e1ede40d35ff425eb45116</Hash>

<Date>20190825T195424</Date>

<Name>windows10.0-kb4500641.exe</Name>

</Record>

<Record>

<Path>ENG/ALL</Path>

<Size>1458278573</Size>

<Hash>b214cf5847fdd3244fae60036c6c9aad056e5114bd8320dd460cd902bd44a456</Hash>

<Date>20190906T143311</Date>

<Name>E2D_ALE_System_V091_Point_Release_20190822.zip</Name>

</Record>

<Record>

<Path>HFP/Files</Path>

<Size>569985537</Size>

<Hash>dc51058df85791c0786e6f41eba315fd044f0fc911a70763748f3ec9ee95d272</Hash>

<Date>20190919T013754</Date>

<Name>1.02.02_version_update.zip</Name>

</Record>

Here is my props.conf stanza:

[jkcsindex]

DATETIME_CONFIG = NONE

KV_MODE = xml

category = Structured

description = JKCS Index file

disabled = false

pulldown_type = true

SHOULD_LINEMERGE = false

NO_BINARY_CHECK = true

LINE_BREAKER = (\s*)</Record>(\s*)<Record>(\s*)

REPORT-jkcsxml = jkcsxml

TRANSFORMS-nullIndexHeader = nullIndexHeader

From the transforms.conf, here is the nullIndexHeader stanza to remove the header and extra tags:

[nullIndexHeader]

REGEX = (?m)^(<\?xml)|(\<DSIF\>)|(\<Heading\>)|(\<\/GenDate\>)|(\<\/Root\>)|(\<\/Heading\>)|(\<\/Record\>)|(\<\/DSIF\>)

DEST_KEY = queue

FORMAT = nullQueue

And here is the transforms.conf stanza to break out the xml tags:

[jkcsxml]

REGEX = <([^>]+)>([^<]*)</\1\>

FORMAT = $1::$2

MV_ADD = true

REPEAT_MATCH = false

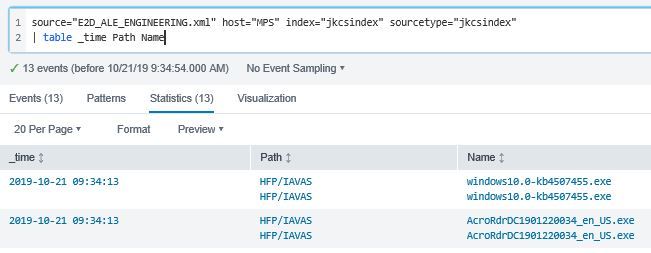

So my main problem is that after all of this, when I try to output a simple table, all of the results get doubled, like this:

Why is that? Where is this duplication coming from? When I do a simple search to show the raw events, only one of each record is listed. I lot of this is cobbled together from other answers that have been posted here, and some of it I don't entirely understand. I've been fighting regular expressions all last week just to get the fields extracted (because what works for me at regex101.com doesn't seem to apply in the LINE BREAKER in the Add Data GUI), but I can't figure out this doubling of the results in the table.

Help greatly appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what it looks like, it seems you only care about the data between the <Record></Record> tags, correct?

If this is the case, you could do something along these lines.

The below configurations will trim the unwanted results using SED (regex may need to be altered if your data appears differently than the sample data previously provided). It includes a line breaker that breaks on the new record and also includes the date field which seems to be in the event. If this is not the correct date, you can simply set DATETIME_CONFIG = CURRENT or your desired value and remove the other time properties. I have set the kv mode to none as the fields are being extracted manually.

props.conf

[your:sourcetype]

SEDCMD-0_remove_header = s/(<\?xml[^\>]+\>\s+\S+\s+\S+\s+\S+\s+\S+\s+\S+)//g

SEDCMD-1_remove_footer = s/(\<\/DSIF\>)//g

SHOULD_LINEMERGE = false

LINE_BREAKER = ([\s\n\r]+)(<Record>)

TIME_FORMAT = %Y%m%dT%H%M%S

TIME_PREFIX = <Date>

MAX_TIMESTAMP_LOOKAHEAD = 15

KV_MODE = none

CHARSET = UTF-8

REPORT-0_fields = your_custom_fields

transforms.conf

[your_custom_fields]

REGEX = \s+\<([^\>]+)\>([^\<\n\r]+)\<\/

FORMAT = $1::$2

This configuration will index the data as follows

<Record>

<Path>HFP/IAVAS</Path>

<Size>14394144</Size>

<Hash>438b87856704c7c3bb5b3927d6d5959aaf997e8777e1ede40d35ff425eb45116</Hash>

<Date>20190825T195424</Date>

<Name>windows10.0-kb4500641.exe</Name>

</Record>

<Record>

<Path>ENG/ALL</Path>

<Size>1458278573</Size>

<Hash>b214cf5847fdd3244fae60036c6c9aad056e5114bd8320dd460cd902bd44a456</Hash>

<Date>20190906T143311</Date>

<Name>E2D_ALE_System_V091_Point_Release_20190822.zip</Name>

</Record>

<Record>

<Path>HFP/Files</Path>

<Size>569985537</Size>

<Hash>dc51058df85791c0786e6f41eba315fd044f0fc911a70763748f3ec9ee95d272</Hash>

<Date>20190919T013754</Date>

<Name>1.02.02_version_update.zip</Name>

</Record>

Notice – the starting tags are not being indexed. Use this configuration only if that is your desired outcome

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what it looks like, it seems you only care about the data between the <Record></Record> tags, correct?

If this is the case, you could do something along these lines.

The below configurations will trim the unwanted results using SED (regex may need to be altered if your data appears differently than the sample data previously provided). It includes a line breaker that breaks on the new record and also includes the date field which seems to be in the event. If this is not the correct date, you can simply set DATETIME_CONFIG = CURRENT or your desired value and remove the other time properties. I have set the kv mode to none as the fields are being extracted manually.

props.conf

[your:sourcetype]

SEDCMD-0_remove_header = s/(<\?xml[^\>]+\>\s+\S+\s+\S+\s+\S+\s+\S+\s+\S+)//g

SEDCMD-1_remove_footer = s/(\<\/DSIF\>)//g

SHOULD_LINEMERGE = false

LINE_BREAKER = ([\s\n\r]+)(<Record>)

TIME_FORMAT = %Y%m%dT%H%M%S

TIME_PREFIX = <Date>

MAX_TIMESTAMP_LOOKAHEAD = 15

KV_MODE = none

CHARSET = UTF-8

REPORT-0_fields = your_custom_fields

transforms.conf

[your_custom_fields]

REGEX = \s+\<([^\>]+)\>([^\<\n\r]+)\<\/

FORMAT = $1::$2

This configuration will index the data as follows

<Record>

<Path>HFP/IAVAS</Path>

<Size>14394144</Size>

<Hash>438b87856704c7c3bb5b3927d6d5959aaf997e8777e1ede40d35ff425eb45116</Hash>

<Date>20190825T195424</Date>

<Name>windows10.0-kb4500641.exe</Name>

</Record>

<Record>

<Path>ENG/ALL</Path>

<Size>1458278573</Size>

<Hash>b214cf5847fdd3244fae60036c6c9aad056e5114bd8320dd460cd902bd44a456</Hash>

<Date>20190906T143311</Date>

<Name>E2D_ALE_System_V091_Point_Release_20190822.zip</Name>

</Record>

<Record>

<Path>HFP/Files</Path>

<Size>569985537</Size>

<Hash>dc51058df85791c0786e6f41eba315fd044f0fc911a70763748f3ec9ee95d272</Hash>

<Date>20190919T013754</Date>

<Name>1.02.02_version_update.zip</Name>

</Record>

Notice – the starting tags are not being indexed. Use this configuration only if that is your desired outcome

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the radio silence, got pulled away to other things.

That is exactly what I need. I will test that out right now.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That worked perfectly, thank you very much!

The timestamp in the field is just the modification date of the file in that recordd. The date/time I really need to be assigned to each 'event' in Splunk is the GenDate value from the top of the file, but couldn't figure out a way to use it for each event. So for now, i'm just going with the CURRENT. The data is only good for about 24 hours, when the process is run again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had a similar problem, but with JSON. Can you try setting KV_MODE to none? Something similar to this question https://answers.splunk.com/answers/626871/double-field-extraction-for-the-json-data.html

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With KV_MODE set to none, my LINE_BREAKER regex no longer works (never understood why it worked, anyway).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking at another question about LINE_BREAKER on XML, I think yours should be something like (\<Record\>).