Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Dashboards & Visualizations

- :

- Re: How do you sort by a specific order by the val...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you sort by a specific order by the values of a field?

Hello,

I'm struggling with sorting bar chart columns based on a time value.

I have the following in my search:

| chart last(duration) as duration over run_id by task_id

and I get the following table:

run_id task_1 task_2 task_3 task_4

1 14.55000000 1.60000000 11.55000000 1.78333333

2 13.93333333 2.73333333 13.55000000 1.91666667

in the stacked chart visualization the tasks are showing from top to bottom in the order of the tasks column (first task_1 then task_2, etc).

current bar char stacked:

----------------

| | <- task_1

| | <- task_2

| | <- task_3

| | <- task_4

i want to sort the task_id colums based on a value (start time) which i have on the initial search (pre charting) for each of the tasks.

bar char stacked i want to have:

---------------

| | <- task with highest start time

| | <- task_2

| | <- task_3

| | <- task with lowest start time

is it possible to do that?

Thank you!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @D_D ,

The chart command while creating the columns would by default sort the field task_id as it has been mentioned in the by clause.

I think you can create a dummy field which is a string joining the start time and task_id and use that field in the by clause of the chart command. The column names can be renamed using foreach.

| eval dummy_sort = start_time."_".task_id

| chart last(duration) as duration over run_id by dummy_sort

| foreach *task_* [rename <<FIELD>> to task_<<MATCHSEG2>>]

There may be a simpler way to do this.

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you, aprrcaite you reply.

i think it might work the issue is the column names aren't task_1, task_2, etc... it was an example to simplify thinks, they have different name for each column, can i run over them with foreach loop and regex like (*_*) where first asterisk is the "starttime" and the second is the "task name"?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, you can use that. The foreach would look something like this then:

| foreach *_* [rename <<FIELD>> to <<MATCHSEG2>>]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it works.

When i put it in the XML i get "Uencoded <" error.

how can i escape it?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

< would be <

> would be >

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Found a corner case where it doesn't work.

when i use "dummy sort" i get alot of values with 0, then the foreach loops get them instead the actual values.

it happens when there is more then one value of "run_id"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are getting 0 in the column names or the field values?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the fields values,

for each run_id i get:

(num of tasks) * (num of run id).

table size is:

run_id rows and (num of tasks) * (num of run id) columns

for each run_id row there is (num of tasks) fields with value, the rest are 0.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please share screenshots of the result obtained in table as well as the chart?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes,

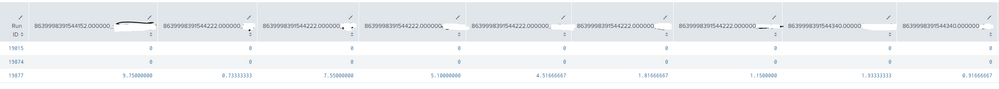

before foreach operation:

table (this has more columns on the right side)

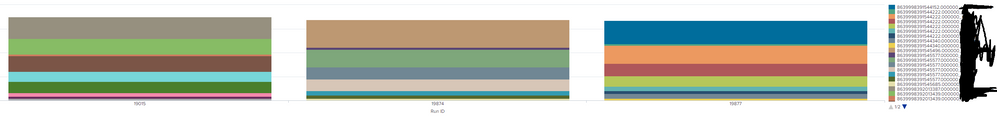

chart:

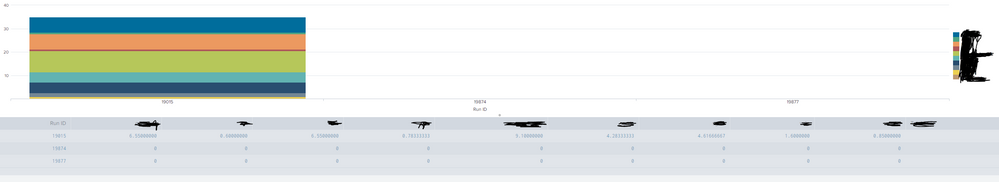

after foreach operation (table + chart):

this is all the columns in the tables.

the deleted part in the pictures is the task name.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

In the data, is it expected that same task_id can have different start time depending for the same run_id?

So for eg, task_id "task_1" has maybe started twice for run_id "run_1". This will create two different field values like time1_task_1 and time2_task_2 in the dummy_sort field. However, on column rename both would have name

If so, then the solution may be creating the problem.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To your question "In the data, is it expected that same task_id can have different start time depending for the same run_id?"

The answer is yes.

Is there a way to overcome this issue?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the original query that you posted in the question, you have been taking the latest duration for every task_id and run_id in the chart.

If we go according to that, then, it seems we need to take into account the starttime of the task_id for the latest duration obtained.

I think that would also solve our problem of having duplicate task_ids.

For that the dummy_sort can be calculated after we have got the events having last duration for the task_id and run_id:

| eventstats last(duration) as last_duration by run_id, task_id

| where duration = last_duration

| eval dummy_sort = starttime."_".task_id

| chart last(last_duration) as duration over run_id by dummy_sort

| foreach *_*

[ rename <<FIELD>> to <<MATCHSEG2>>

]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i added "limit=0" to the chart command to avoid "OTHER", but i get the same results as before.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you,

This is also works.

It still takes the columns from left to right,

right now:

bar char stacked i have:

---------------

| | <- task with lowest start time

| | <- task_2

| | <- task_3

| | <- task with highest start time

can i reverse it somehow?

bar char stacked i want to have:

---------------

| | <- task with highest start time

| | <- task_2

| | <- task_3

| | <- task with lowest start time