Join the Conversation

- Find Answers

- :

- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- Incomplete JSON ingested.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am using the REST API modular input addon to monitor an elasticsearch instance on the stats api endpoint. The output is in JSON format and has an average of 1200 lines.

I am using Heavy Forwarders and I have the following settings

inputs.conf

[rest://elastic-stats]

source = elastic-stats

auth_type = none

endpoint = http://localhost:9200/_nodes/stats

http_method = GET

index = main

index_error_response_codes = 0

polling_interval = 60

request_timeout = 55

response_type = json

sequential_mode = 0

sourcetype = elastic-stats

streaming_request = 0

props.conf

[elastic-stats]

SHOULD_LINEMERGE = false

LINE_BREAKER = {"cluster_name":

category = json_no_timestamp

pulldown_type = 1

disabled = false

elasticsearch api endpoint json output contains :

Total Character Total Word Total Lines Size

26793 2230 1229 27.36 KB

But only the first 10000 characters get indexed.

Please assist & thank you in advance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi andrei1bc!

By default, props.conf sets TRUNCATE = 10000

You can confirm this with the following btool command:

./splunk btool props list elastic-stats --debug

which will show you the fully merged config for this sourcetype.

To fix, simply add TRUNCATE=40000 and/or an appropriate MAX_EVENTS value to your local version of props.conf

I chose 40K based on your character analysis above.

You can also monitor for issues with truncation in the Monitoring Console under Settings > Monitoring Console > Indexing > Inputs > Data Quality or by searching index=_internal for events like:

09-14-2017 10:43:30.132 -0400 WARN LineBreakingProcessor - Truncating line because limit of 10000 bytes has been exceeded with a line length >= 10994 - data_source="json.txt", data_host="n00bserver.n00blab.local", data_sourcetype="someSourcetype"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You do not need to use props.conf or TRUNCATE settings.

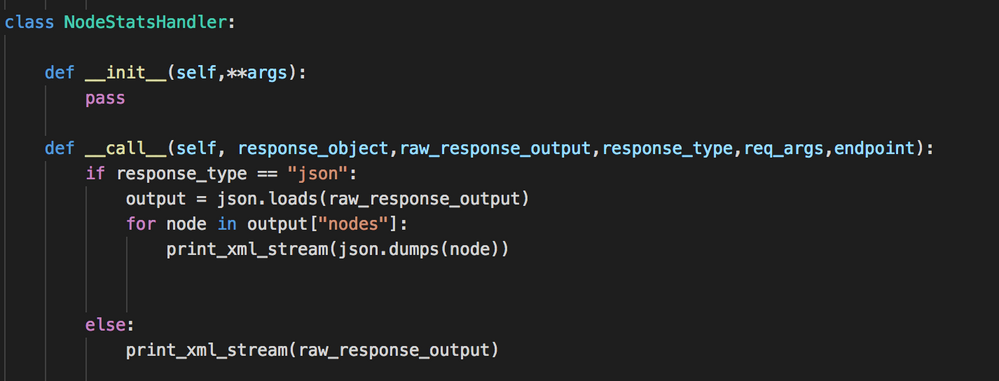

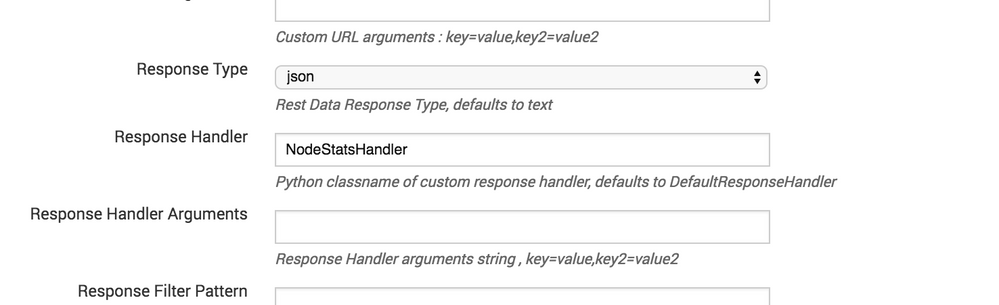

A more ideal solution is to declare a custom response handler to split out the raw received JSON into individually indexed events.

There are several examples in rest_ta/bin/responsehandlers.py

Here is a pseudo example for your use case

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, the handlers are rad, especially when needing to break out arrays and massage the JSON! Only thing I'd like better is jq in the pipeline.

Looks like the LINE_BREAKER had them set up easy enough though. Most customers end up going that route regardless of me showing them the handler. The coding can scare them away. Not all admins have the chops/want to deal with the handlers....but if you do....they are a great option to have!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi andrei1bc!

By default, props.conf sets TRUNCATE = 10000

You can confirm this with the following btool command:

./splunk btool props list elastic-stats --debug

which will show you the fully merged config for this sourcetype.

To fix, simply add TRUNCATE=40000 and/or an appropriate MAX_EVENTS value to your local version of props.conf

I chose 40K based on your character analysis above.

You can also monitor for issues with truncation in the Monitoring Console under Settings > Monitoring Console > Indexing > Inputs > Data Quality or by searching index=_internal for events like:

09-14-2017 10:43:30.132 -0400 WARN LineBreakingProcessor - Truncating line because limit of 10000 bytes has been exceeded with a line length >= 10994 - data_source="json.txt", data_host="n00bserver.n00blab.local", data_sourcetype="someSourcetype"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-14-2017 10:43:30.132 -0400 WARN LineBreakingProcessor - Truncating line because limit of 10000 bytes has been exceeded with a line length >= 10994 - data_source="json.txt", data_host="n00bserver.n00blab.local", data_sourcetype="someSourcetype"

How did you go about solving this

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MichaelBs,

I’m a Community Moderator in the Splunk Community.

This question was posted 7 years ago, so it might not get the attention you need for your question to be answered. We recommend that you post a new question so that your issue can get the visibility it deserves. To increase your chances of getting help from the community, follow these guidelines in the Splunk Answers User Manual when creating your post.

Thank you!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Added in props.conf

TRUNCATE = 20000 (for maximum number of character)

MAX_EVENTS = 50000 (for maximum number of event lines)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pfff .. every time .. i post a question, then find the answer 2 min later...