- Find Answers

- :

- Using Splunk

- :

- Other Using Splunk

- :

- Alerting

- :

- Re: Why aren't the alerts working?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why aren't the alerts working?

Hi team!

I have like 50 alerts and they are not working and I want to know why.

I shuold have like 5 alerts per minute but I have 0.

Have no email and no triggered alert.

Please need help, It shuold be simple.

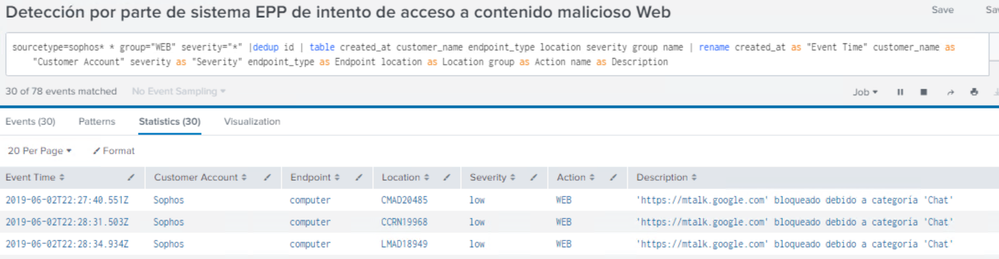

This is a alert example.

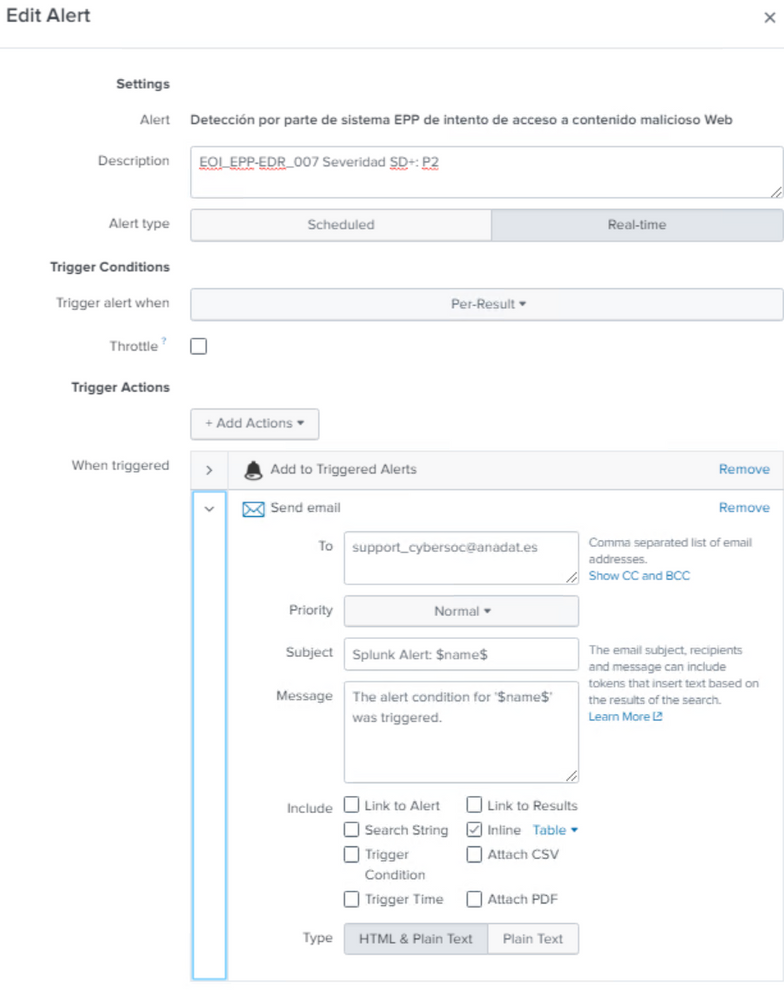

And this is the alert configuration.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi. You need to tell Splunk the name of your email server. You put the directives in alert_actions.conf

[email] from = someuser@mycompany.com

mailserver = mymailserver@mycompany.com:25

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I did days ago...

And it works sometimes. But not now.

Even if the email action configuration is bad. Why I can not see triggered alerts?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Look for errors in the /opt/splunk/var/log/spunk/python.log

It's hard to know if it was working and now not or is intermittent.

Is it possible that you are DDOS the email server? For example, corporate Gmail has limits e.g. https://support.google.com/mail/answer/22839?hl=en

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi burwell,

I cuold fix it. I had like 30 alerts in real time.

When I disabled all of them but 1 it work perfectly...

Do you know why? I have to do something in limits.conf?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The number of emails you send shouldn't be an issue. I have used systems where lots of email has been sent.

Without seeing errors/logs, it's hard to know.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What error log I have to check??

/opt/splunk/var/log/spunk/python.log is clean, No errors there.