- Find Answers

- :

- Using Splunk

- :

- Other Using Splunk

- :

- Alerting

- :

- Deployment Planning - Suggestions needed!

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

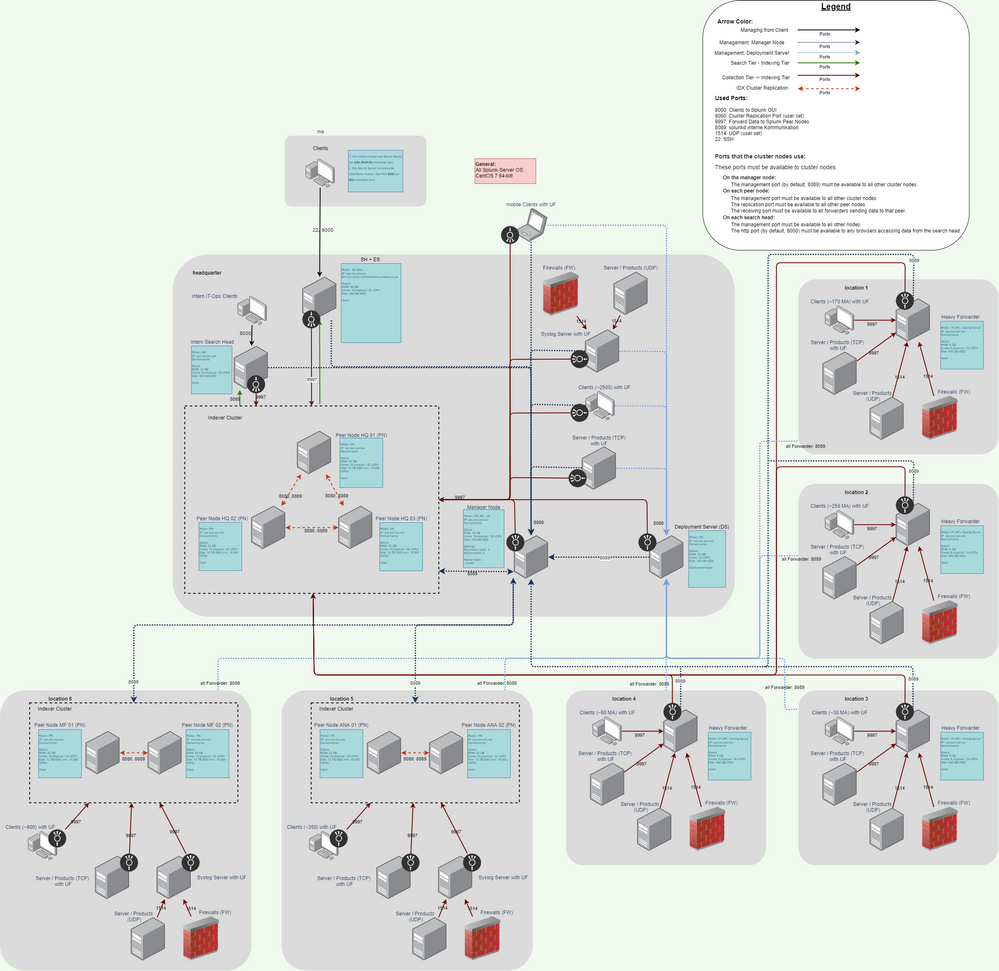

I'm trying to plan an, for me, large deployment. Connecting several sites to a headquarter. Each site does have from 200 to 900 clients who need to send data to the indexer. on two locations the bandwith is relatively poor so the idea is to set up a cluster of two indexers locally on those locations. in the headquarter we'd set up a cluster of three indexers for all other locations who don't have a cluster and of course for the local data that pours in.

location:

within the location I thought of forwarders for the servers (obviously) and then a heavy/intermediate forwarder which collects all those logs and sends it to the index cluster in the headquarter.

in locations who need their own cluster, we'd set up a two server cluster .

headquarter:

there we set up a three server cluster and a master node. two searchheads (one for the technical part and one for enterprise security) and a deployment server to forward apps to all the connected clients in all locations. the searchhead should send its requests not only to the headquarter cluster but to all locations. I've read that it is possible to forward the sh requests through multiple clusters. the clusters don't talk with each other so there is no data exchange between the headquarter cluster and the two other clusters.

for better understanding, since this is complex zu explain, I've generated a draw.io architecture view. my questions to the feasibility of this architecture are as follows:

- do we need a master node for each cluster or can a master node manage multiple clusters? provided that the locations with their own cluster don't sync with the headquarter and are only processing requests from the SHs

- is there a minimum bandwidth that should be provided? splunk docs says 1 Gbit/s but I think for this kind of deployment 10-50 Mbit/s are enough?

- Is it basically better to just make a larger central cluster with more servers in the headquarter or what is better performing? my fear is to set all of this up and then fall on my face cause I didn't scale the servers correctly.

- is one deployment server for all locations and all servers feasible or should I set up an deployment server at least for the locations with their own cluster too?

all specs should be in the draw.io few. hope you can answer my questions. I know it's a lot, but I'm afraid I may scale it wrong and then, months into the setup, I notice what is wrong.

thanks a lot for your tipps and suggestions!

question: on location with their own cluster, do they need their own master node?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @avoelk,

at first, this isn't a question for the Community but for an expert Certified Architect or Splunk Professional Services (better!) that can deeply analyze your situation and requirements because it's very difficoult to surely answer you!

Anyway, I give you some pillow to answer to your questions:

- if you have local Indexers' clusters that don't send their logs to the central Cluster, your ES work only on a subset of your data;

- if the local indexers' clusters send all the logs to the Central Cluster, you don't need local clusters, but you could use two (or more) Heavy Forwarders in each local network;

- you could configure each local cluster with ES and send only alerts to the central cluster, but in this way, you optimize your bandwidth but you lose the correlations between data from different clusters;

- if the local clusters aren't connected to the central cluster, you need a Master Node in each cluster.

My main hint is to enlarge the bandwidth of local networks and put two (or more) Heavy Forwarders in each one to work as concentrators that locally filter and compress data and optimize bandwidth usage.

Then use one central cluster to manage all the data.

About the minimum bandwidth you can mnage the bandwidth occupation configuring its maximum value in Heavy Forwarders.

About Deployment Servers, you don't need a cluster for this rose and a single server is good to manage a perimeter.

About the number of DSs, if you have so many clients in each local networs, I think that the best configuration is one DS in each one, in this way, you reduce also the firewall routes to open.

Take my hints with the correct level of affidability because they are general and without the vision of your data!

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @avoelk,

at first, this isn't a question for the Community but for an expert Certified Architect or Splunk Professional Services (better!) that can deeply analyze your situation and requirements because it's very difficoult to surely answer you!

Anyway, I give you some pillow to answer to your questions:

- if you have local Indexers' clusters that don't send their logs to the central Cluster, your ES work only on a subset of your data;

- if the local indexers' clusters send all the logs to the Central Cluster, you don't need local clusters, but you could use two (or more) Heavy Forwarders in each local network;

- you could configure each local cluster with ES and send only alerts to the central cluster, but in this way, you optimize your bandwidth but you lose the correlations between data from different clusters;

- if the local clusters aren't connected to the central cluster, you need a Master Node in each cluster.

My main hint is to enlarge the bandwidth of local networks and put two (or more) Heavy Forwarders in each one to work as concentrators that locally filter and compress data and optimize bandwidth usage.

Then use one central cluster to manage all the data.

About the minimum bandwidth you can mnage the bandwidth occupation configuring its maximum value in Heavy Forwarders.

About Deployment Servers, you don't need a cluster for this rose and a single server is good to manage a perimeter.

About the number of DSs, if you have so many clients in each local networs, I think that the best configuration is one DS in each one, in this way, you reduce also the firewall routes to open.

Take my hints with the correct level of affidability because they are general and without the vision of your data!

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Giuseppe and thanks for your feedback,

I'll think about your answers and will try to consult a splunk consultant regarding the architecture in depth.

thanks a lot