Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- zero'ing counter problem (and associated graph spi...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

zero'ing counter problem (and associated graph spike explosion)

Hi Splunk gurus.

I have a query problem thats been challenging me for a while.

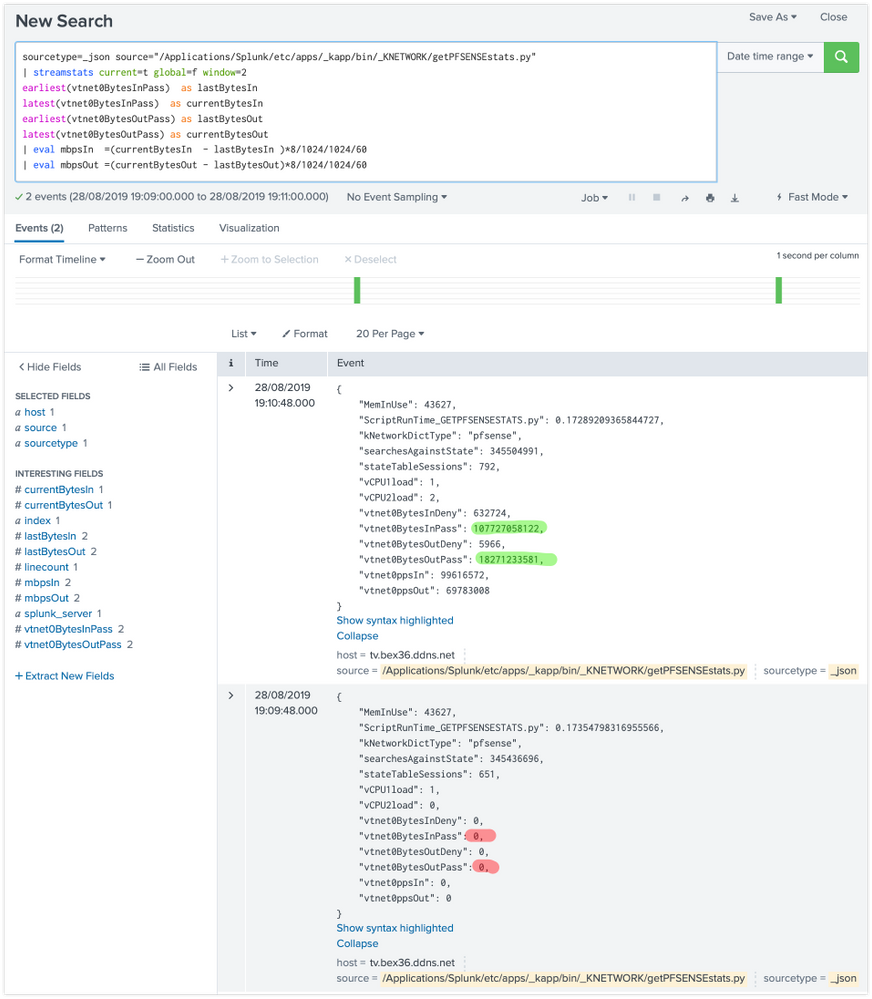

When my polling breaks, or when counters reset to zero for whatever reason (i.e. the device i'm polling is rebooted) i get a situation like this (red shading = condition when broken, green = when polling resumes properly):

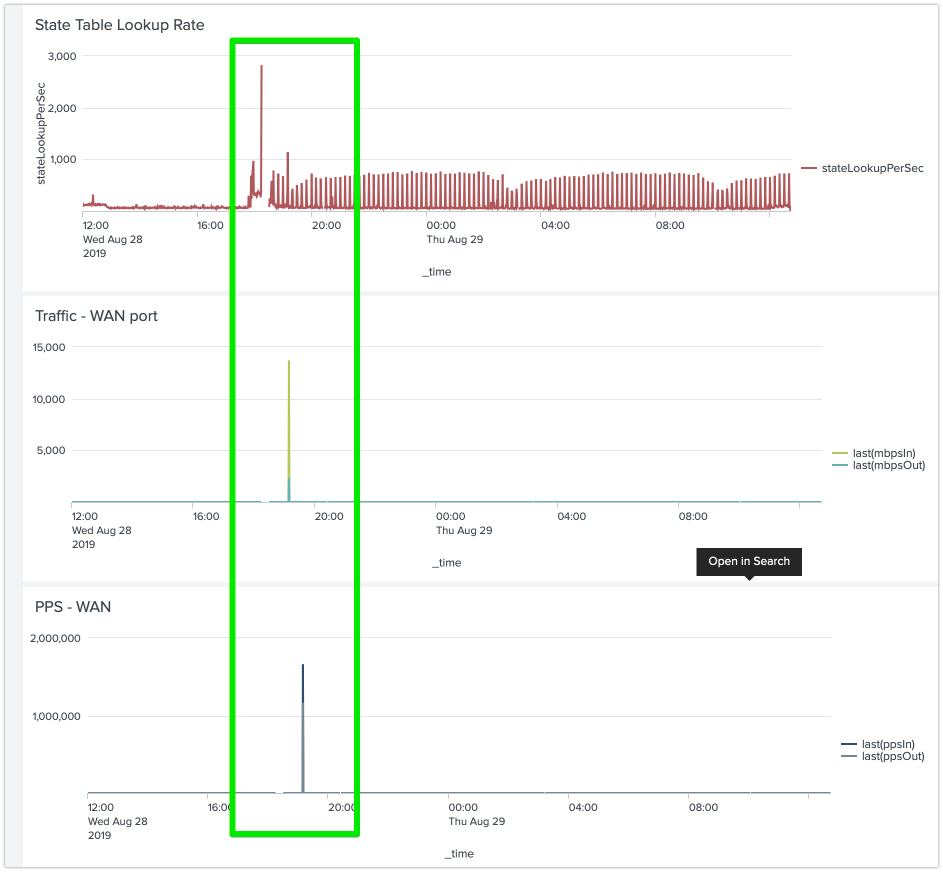

Soi basically get a HUUUUUUGE spike in my graphs which destroys the rest of the fidelity on the Y-axis scale. As so:

Any ideas how i can solve this condition at the splunk search / SPL layer? I dont believe ill be able to ever fix it at the device layer, so will need the dashboards to handle the condition and work around it somehow. Im sure im not the first to solve this problem, so didnt want to re-invent the wheel (a quick search of the forums couldnt help me).

heres my SPL for anyone that wants to copy/paste to give me a hand!.

Thanks all!

sourcetype=_json source="/Applications/Splunk/etc/apps/_kapp/bin/_KNETWORK/getPFSENSEstats.py"

| streamstats current=t global=f window=2

earliest(vtnet0BytesInPass) as lastBytesIn

latest(vtnet0BytesInPass) as currentBytesIn

earliest(vtnet0BytesOutPass) as lastBytesOut

latest(vtnet0BytesOutPass) as currentBytesOut

| eval mbpsIn =(currentBytesIn - lastBytesIn )*8/1024/1024/60

| eval mbpsOut =(currentBytesOut - lastBytesOut)*8/1024/1024/60

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One way to solve it would be to force certain values depending upon conditions:

| makeresults

| eval data="2019-04-06:12:01:00 200;

2019-04-06:12:02:00 205;

2019-04-06:12:03:00 210;

2019-04-06:12:04:00 203;

2019-04-06:12:05:00 204;

2019-04-06:12:06:00 9000;

2019-04-06:12:07:00 0;

2019-04-06:12:08:00 0;

2019-04-06:12:09:00 200"

| makemv data delim=";"

| mvexpand data

| rex field=data "(\s|\n?)(?<data>.*)"

| makemv data delim=" "

| eval _time=strptime(mvindex(data,0),"%Y-%m-%d:%H:%M:%S"),val=mvindex(data,1)

| autoregress val p=1

| eval newval=if(val-val_p1>100 AND val_p1>0,0,val)

| fields _time newval

This solution creates dummy time series data for illustration purposes.

It uses autoregress to allow comparisons with nearby rows. You'll have to find the right threshold (I chose 100) to trigger it to assign zero. You can expose the underlying fields by removing the last line. Try using autoregress with p=1-2 or p=1-3 to fine tune the amount of lookback, depending on the shape of your data.

You can also look for a ratio over a certain comfort level:

| autoregress val p=1

| eval ratio=abs(val/val_p1)

| eval newval=if(ratio>10,0,val)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@keiran_harris,

Probably there are two ways to overcome the situation

- Remove the zero- values from the search result before the streamstats

- Prefill the 0 values with the latest non-zero value of the specific type

Or are you looking for entirely different solution ?

What goes around comes around. If it helps, hit it with Karma 🙂