- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- unusual activity

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

unusual activity

Hi

I have an issue that Splunk might be help to solve it.

Here is scenario:

Need to find unusual send and receive patterns in huge log file, here is the example:

00:00:01.000 S-001

00:00:01.000 S-002

00:00:01.000 S-003

00:00:01.000 S-004

00:00:01.000 S-005

00:00:01.000 R-005

00:00:01.000 S-006

00:00:01.000 R-006

00:00:01.000 S-007

00:00:01.000 S-008

00:00:01.000 R-008

00:00:01.000 R-007

00:00:01.000 S-009

00:00:01.000 S-010

00:00:01.000 S-011

00:00:01.000 S-012

00:00:01.000 S-013

00:00:01.000 R-009

00:00:01.000 R-010

00:00:01.000 R-011

00:00:01.000 R-012

00:00:01.000 R-013

00:00:01.000 S-014

00:00:01.000 R-014

00:00:01.000 R-001

00:00:01.000 R-002

00:00:01.000 R-003

00:00:01.000 R-004

red line need to detect and show on chart.

FYI1: Duration is not good way to find them because some of them occurred at the exact time.

FYI2: ids are different not in order as i write above like this 98734543 or 53434444

any idea?

Thanks,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What defines an unusual pattern and a normal pattern?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

usual pattern like green lines as we see after Send we have Receive.

But in red lines couple of Send coming continuously and after a while related Receive appear continuously.

when ever I see this behavior in log it means something bad happened.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could determine the concurrency of the requests i.e. sends - receives and look for spikes i.e. when you are getting more sends than receives

| makeresults

| eval _raw="00:00:01.000 R-000

00:00:01.000 S-001

00:00:01.000 S-002

00:00:01.000 S-003

00:00:01.000 S-004

00:00:01.000 S-005

00:00:01.000 R-005

00:00:01.000 S-006

00:00:01.000 R-006

00:00:01.000 S-007

00:00:01.000 S-008

00:00:01.000 R-008

00:00:01.000 R-007

00:00:01.000 S-009

00:00:01.000 S-010

00:00:01.000 S-011

00:00:01.000 S-012

00:00:01.000 S-013

00:00:01.000 R-009

00:00:01.000 R-010

00:00:01.000 R-011

00:00:01.000 R-012

00:00:01.000 R-013

00:00:01.000 S-014

00:00:01.000 R-014

00:00:01.000 R-001

00:00:01.000 R-002

00:00:01.000 R-003

00:00:01.000 R-004"

| multikv noheader=t

| rename Column_1 as _time, Column_2 as event

| table _time event

| eval eventid=mvindex(split(event,"-"),1)

| eval event=substr(event,1,1)

| streamstats count(eval(event="S")) as sends count(eval(event="R")) as receives

| eval concurrent=sends-receives

| eventstats min(concurrent) as startingconcurrency

| eval concurrent=concurrent-startingconcurrency

| streamstats count(eval(event="S")) as spike reset_before="("match(event,\"R\")")"I threw in a receive for id 000 to pick up on when the events you are looking at start while there are still previous sends without their corresponding receives.

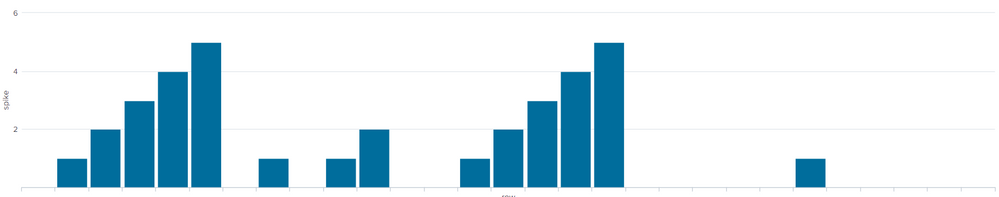

This dummy data generates a chart like this

You could set a threshold at say 3 or 4 depending on your expected unusual spike

You may also need to start with a reverse since splunk is likely to start processing the events going backwards in time, although to be fair this might be what you want anyway, in which case you may need to reverse the logic around sends and receives.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ITWhisperer does it check pair S-R due id?

or just find continious Send?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That was looking for continuous sends - if you want to take the ids into account, you could count the number of sends before the corresponding receive is seen for each event id

| makeresults

| eval _raw="00:00:01.000 R-000

00:00:01.000 S-001

00:00:01.000 S-002

00:00:01.000 S-003

00:00:01.000 S-004

00:00:01.000 S-005

00:00:01.000 R-005

00:00:01.000 S-006

00:00:01.000 R-006

00:00:01.000 S-007

00:00:01.000 S-008

00:00:01.000 R-008

00:00:01.000 R-007

00:00:01.000 S-009

00:00:01.000 S-010

00:00:01.000 S-011

00:00:01.000 S-012

00:00:01.000 S-013

00:00:01.000 R-009

00:00:01.000 R-010

00:00:01.000 R-011

00:00:01.000 R-012

00:00:01.000 R-013

00:00:01.000 S-014

00:00:01.000 R-014

00:00:01.000 R-001

00:00:01.000 R-002

00:00:01.000 R-003

00:00:01.000 R-004"

| multikv noheader=t

| rename Column_1 as _time, Column_2 as event

| table _time event

| eval eventid=mvindex(split(event,"-"),1)

| eval event=substr(event,1,1)

| streamstats count(eval(event="S")) as sends count(eval(event="R")) as receives

| eval concurrent=sends-receives

| eventstats min(concurrent) as startingconcurrency

| eval concurrent=concurrent-startingconcurrency

| eval sendswhenreceived=if(event="R",sends,null())

| eventstats values(sendswhenreceived) as sendswhenreceived by eventid

| eval sendsuntilreceived=sendswhenreceived-sends

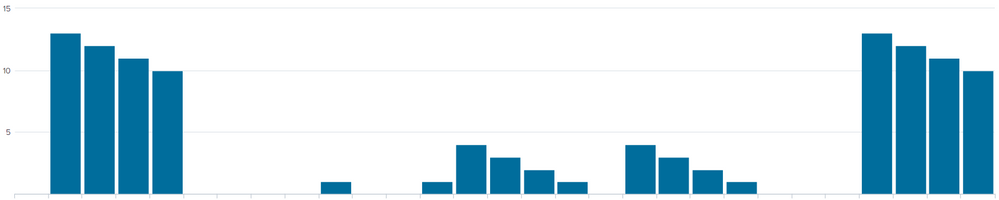

| eventstats max(sendsuntilreceived) as sendsuntilreceived by eventidYou could get a chart like this

Note that I show both the send and receive for the affected events.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If I understand correctly, the proper sequence should be S,R,S,R,S,R with subsequent sequence numbers.

I'd approach it a bit differently albeit with a streamstats as well.

<your search>

| eval eventid=mvindex(split(event,"-"),1)

| eval event=substr(event,1,1)

| streamstats count as seq

| stats list(event) as events list(seq) as seq by eventid

| eval isok=case(mvjoin(events,"")!="SR","Bad",mvindex(seq,1)-mvindex(seq,0)=1,"OK",1=1,"Bad"

You could also add some kind of a check if the s-r pairs should themselves be in a proper order.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick in last line ")" missed

when I add ")" it returen another error:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Might have missed a parenthesis here or there 😉 (was typing on my tablet while walking the dog :D)

Interesting though that it treats sequence numbers as strings.

Casting it to numbers by using tonumber().

tonumber(mvindex(seq,1))-tonumber(mvindex(seq,0))