- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- non english words length function not working as e...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

non english words length function not working as expected

Hi Splunk Gurus... As you can see, non English words length function not working as expected. checked the old posts, documentations, but no luck. any suggestions please. thanks.

| makeresults

| eval _raw="இடும்பைக்கு"

| eval length=len(_raw) | table _raw length

this produces:

_raw length

இடும்பைக்கு 11

(that word இடும்பைக்கு is actually 6 charactors, not 11)

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All, https://docs.splunk.com/Documentation/Community/latest/community/SplunkIdeas

How Ideas are reviewed and prioritized

Due to our large and active community of Splunkers, the number of enhancement requests we receive can be voluminous. Splunk Ideas allows us to see which ideas are being requested most across different types of customers and end-user personas.

When we determine which ideas to triage we look at total vote count across a variety of cohorts, which include but are not limited to:

- Number of total votes (this is the number displayed in the "Vote" box for an idea)

- Number of unique customers requesting an idea ("customers" refers to organizations, not employees)

- Number of votes by customer size or industry. For example: large, small, financial services, government, and so forth.

- Number of votes by customer geography. For example: Americas, Europe, Asia, and so forth.

- Number of votes by end-user persona. For example: admin, SOC analyst, business analyst, and so forth.

- Number of votes from special audiences. For example: Splunk Trust, Design Partners, and so forth.

Could some long time Splunkers please upvote the idea, so that the Splunk will review it please. thanks.

Best Regards,

Sekar

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

tried to raise a bug report, it asked me raise as an idea.. so here it is:

https://ideas.splunk.com/ideas/EID-I-2176

could you pls upvote it, so that Splunk will resolve it soon, thanks.

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your account manager can speak directly to Splunk engineers and product managers to review your support case. This certainly looks like a bug as @PickleRick pointed out. At the very least, it should result in product documentation being updated to reflect product behavior with respect to multibyte characters.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure @tscroggins .. i spoke with my account mgr and wrote to a Splunk account manager(or sales manager i am not sure) and he said he will look into it and reply back within a day.. and three days passed. still i am waiting, waiting and waiting. lets see, thanks a lot for your help.

(as you can see in my youtube channel "siemnewbies", i have been working on this for more than half year.. but good learning actually)

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All..

Good News - Created a bug with Splunk Support and Splunk Support team is working with Dev team.

One more small issue..

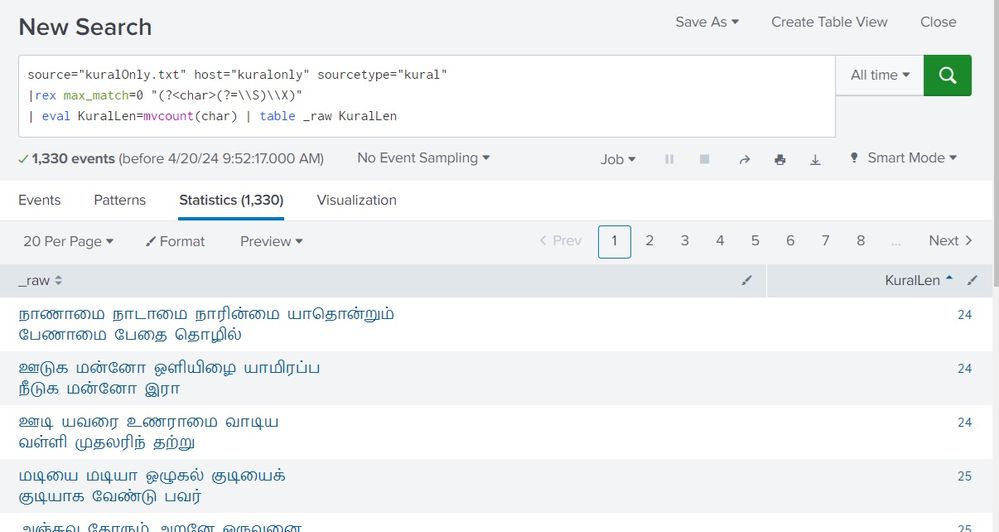

source="kuralOnly.txt" host="kuralonly" sourcetype="kural"

|rex max_match=0 "(?<char>(?=\\S)\\X)"

| eval KuralLen=mvcount(char) | table _raw KuralLenthis SPL works, but the newline character is also getting counted.. pls refer the image, the kural

நாணாமை நாடாமை நாரின்மை யாதொன்றும் பேணாமை பேதை தொழில் got 23 characters only. but SPL reports it as 24 characters. so, i think the "newline" is also getting counted.

Could you pls suggest how to resolve this, thanks.

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @inventsekar,

I can't reproduce the issue with an ASCII newline:

| makeresults

| eval _raw="நாணாமை நாடாமை நாரின்மை யாதொன்றும் பேணாமை பேதை தொழில்".urldecode("%0A")

| rex max_match=0 "(?<char>(?=\\S)\\X)"

| eval len=mvcount(char)

``` len == 23 ```

What characters are present in char? If you have whitespace characters not included in the class [^\r\n\t\f\v ] (the final character is a space), you may need to replace \S with the class form and include e.g. Unicode newlines:

| rex max_match=0 "(?<char>(?=[^\\r\\n\\t\\f\\v \\x0b\\x85])\\X)"

I don't know if it's perfect, but it works with this:

| makeresults

| eval _raw="நாணாமை நாடாமை நாரின்மை யாதொன்றும் பேணாமை பேதை தொழில்".urldecode("%E2%80%A8")

| rex max_match=0 "(?<char>(?=[^\\r\\n\\t\\f\\v \\x0b\\x85])\\X)"

| eval len=mvcount(char)

``` len == 23 ```

Edit:

This is simpler and lets PCRE decide what a Unicode newline is:

| rex max_match=0 "\\R|(?<char>(?=\\S)\\X)"

| eval len=mvcount(char)But given that optimization, we can just remove the positive lookahead completely for a faster regex:

| rex max_match=0 "\\R|\\s|(?<char>\\X)"

| eval len=mvcount(char)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would insist on treating it as a bug.

https://docs.splunk.com/Documentation/Splunk/latest/SearchReference/CommonEvalFunctions

says explicitly

| len(<str>) | Returns the count of the number of characters, not bytes, in the string. |

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Other UTF-8 solutions also count 11 characters, so it may not be a bug if the len() function counts UTF-8 code points.

The string translates to this table split by code points, using https://en.wiktionary.org/wiki/Appendix:Unicode/Tamil as a reference for Tamil characters:

| URL-encoded UTF-8 | Binary-decoded | Unicode Code Point | Character |

| %E0%AE%87 | 00001011 10000111 | U+0B87 | இ |

| %E0%AE%9F | 00001011 10011111 | U+0B9F | ட |

| %E0%AF%81 | 00001011 11000001 | U+0BC1 | ு |

| %E0%AE%AE | 00001011 10101110 | U+0BAE | ம |

| %E0%AF%8D | 00001011 11001101 | U+0BCD | ◌் |

| %E0%AE%AA | 00001011 10101010 | U+0BAA | ப |

| %E0%AF%88 | 00001011 11001000 | U+0BC8 | ை |

| %E0%AE%95 | 00001011 10010101 | U+0B95 | க |

| %E0%AF%8D | 00001011 11001101 | U+0BCD | ◌் |

| %E0%AE%95 | 00001011 10010101 | U+0B95 | க |

| %E0%AF%81 | 00001011 11000001 | U+0BC1 | ு |

If we ignore the Unicode mark category, we count 6 characters.

Splunk's implementation of len() would need to be modified to ignore mark characters. Would that produce the correct result across all languages, or would Splunk need to normalize the code points first? Whether a bug or an idea, Splunk would need to address it in a language agnostic way.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(I've been a bit fascinated by this off and on today.)

I hadn't done this earlier, but a simple rex command can be used to decompose the field value into its component code points:

| makeresults

| eval _raw="இடும்பைக்கு"

| rex max_match=0 "(?<tmp>.)"

| _raw | _time | tmp |

| இடும்பைக்கு | 2024-01-07 18:04:18 | இ ட ு ம ் ப ை க ் க ு |

Determining the Unicode category requires a lookup against a Unicode database, a subset of which I've attached as tamil_unicode_block.csv converted to pdf. The general_category field determines whether a code point is a mark (M*):

| makeresults

| eval _raw="இடும்பைக்கு"

| rex max_match=0 "(?<char>.)"

| lookup tamil_unicode_block.csv char output general_category

| eval length=mvcount(mvfilter(NOT match(general_category, "^M")))

| _raw | _time | char | general_category | length |

| இடும்பைக்கு | 2024-01-07 21:41:41 | இ ட ு ம ் ப ை க ் க ு | Lo Lo Mc Lo Mn Lo Mc Lo Mn Lo Mc | 6 |

I don't know if this is the correct way to count Unicode "characters," but libraries do use the Unicode character database (see https://www.unicode.org/reports/tr44/) to determine the general category of code points. Splunk would have access to this functionality via e.g. libicu.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @tscroggins and all,

I tried to download that tamil_unicode_block.csv, after spending 20 mins i left it.

from your pdf file i created that tamil_unicode_block.csv myself and uploaded to Splunk.

but still the rex counting does not work as i expected. Could you pls help me in counting characters, thanks.

sample event -

இடும்பைக்கு இடும்பை படுப்பர் இடும்பைக்கு

இடும்பை படாஅ தவர்

background details - my idea is to Splunk on tamil language Thirukkural and do some analytics.

each event will be a two lines containing (seven words exactly)

onboarding details are available in youtube video(@siemnewbies channel name)(i should not post the youtube link here as it may look like marketing) i take care of this youtube channel, focusing only Splunk and SIEM newbies.

Best Regards,

Sekar

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @inventsekar,

There's a much simpler solution! The regular expression \X token will match any Unicode grapheme. Combined with a lookahead to match only non-whitespace characters, we can extract and count each grapheme:

| makeresults

| eval _raw="இடும்பைக்கு இடும்பை படுப்பர் இடும்பைக்கு

இடும்பை படாஅ தவர்"

| rex max_match=0 "(?<char>(?=\\S)\\X)"

| eval length=mvcount(char)length = 31

| makeresults

| eval _raw="இடும்பைக்கு"

| rex max_match=0 "(?<char>(?=\\S)\\X)"

| eval length=mvcount(char)length = 6

We can condense that to a single eval expression:

| makeresults

| eval _raw="இடும்பைக்கு இடும்பை படுப்பர் இடும்பைக்கு

இடும்பை படாஅ தவர்"

| eval length=len(replace(replace(_raw, "(?=\\S)\\X", "x"), "\\s", ""))length = 31

You can then use the eval expression in a macro definition and call the macro directly:

| makeresults

| eval _raw="இடும்பைக்கு இடும்பை படுப்பர் இடும்பைக்கு

இடும்பை படாஅ தவர்"

| eval length=`num_graphemes(_raw)`To count whitespace characters, remove (?=\S) from the regular expression:

| makeresults

| eval _raw="இடும்பைக்கு இடும்பை படுப்பர் இடும்பைக்கு

இடும்பை படாஅ தவர்"

| eval length=len(replace(_raw, "\\X", "x"))length = 37

Your new macro would then count each Unicode grapheme, including whitespace characters.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @tscroggins

may i know how this works please.

(?<char>(?=\\S)\\X)

the \\X, google told me, it will match for a unicode character. nice. but may i know if you are aware of this from Perl or Python rex or somewhere else

and may i know what the "?=\\S" does please.

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The "\\" sequence is a double escape.

It is used because the regular expression is provided here as a string parameter to a command.

In SPL strings can contain some special characters which can be escaped with backslash. Since backslash is used to escape other characters, it needs to be escaped itself. So if you type in "\\", it effectively becomes a string consisting of a single backslash. Therefore you have to be careful when testing regexes using rex command and later moving those regexes to config files as props/transforms since in props/transforms you usually don't have to escape the regexes. (unless you put them as string arguments for functions called using INGEST_EVAL).

So - to sum up - your

(?<char>(?=\\S)\\X)

put as a string argument will be effectively a

(?<char>(?=\S)\X)

regex when unescaped and called as a regex.

And this one you can of course test on regex101.com 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @inventsekar,

Note that I use \\ to escape backslashes in Splunk strings, but Splunk isn't strict about escape characters in regular expressions. You may see examples with and without C-style escape sequence. The actual expression is:

(?<char>(?=\S)\X)

(?=...) is a positive lookahead group, and \S matches any non-whitespace character. The expression (?=\S) means "match the next character if it isn't a whitespace character."

The expression (?=\S)\X matches any Unicode character, \X, that isn't a whitespace character.

You may prefer this expression to match any Unicode character that is also a word character, \w, which would exclude European-style punctuation:

(?<char>(?=\w)\X)

Splunk supports Perl-compatible Regular Expressions (PCRE). Note that Splunk uses the PCR2 library internally but does not support PCRE2 functionality.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @inventsekar,

The PDF appears to have modified the code points! I prefer to use SPL because it doesn't usually require elevated privileges; however, it might be simpler to use an external lookup script. The lookup command treats fields containing only whitespace as empty/null, so the lookup will only identify non-whitespace characters.

We'll need to create a script and a transform, which I've encapsulated in an app:

$SPLUNK_HOME/etc/apps/TA-ucd/bin/ucd_category_lookup.py (this file should be readable and executable by the Splunk user, i.e. have at least mode 0500)

#!/usr/bin/env python

import csv

import unicodedata

import sys

def main():

if len(sys.argv) != 3:

print("Usage: python category_lookup.py [char] [category]")

sys.exit(1)

charfield = sys.argv[1]

categoryfield = sys.argv[2]

infile = sys.stdin

outfile = sys.stdout

r = csv.DictReader(infile)

header = r.fieldnames

w = csv.DictWriter(outfile, fieldnames=r.fieldnames)

w.writeheader()

for result in r:

if result[charfield]:

result[categoryfield] = unicodedata.category(result[charfield])

w.writerow(result)

main()$SPLUNK_HOME/etc/apps/TA-ucd/default/transforms.conf

[ucd_category_lookup]

external_cmd = ucd_category_lookup.py char category

fields_list = char, category

python.version = python3$SPLUNK_HOME/etc/apps/TA-ucd/metadata/default.meta

[]

access = read : [ * ], write : [ admin, power ]

export = system

With the app in place, we count 31 non-whitespace characters using the lookup:

| makeresults

| eval _raw="இடும்பைக்கு இடும்பை படுப்பர் இடும்பைக்கு

இடும்பை படாஅ தவர்"

| rex max_match=0 "(?<char>.)"

| lookup ucd_category_lookup char output category

| eval length=mvcount(mvfilter(NOT match(category, "^M")))

Since this doesn't depend on a language-specific lookup, it should work with text from the Kural or any other source with characters or glyphs represented by Unicode code points.

We can add any logic we'd like to an external lookup script, including counting characters of specific categories directly:

| makeresults

| eval _raw="இடும்பைக்கு இடும்பை படுப்பர் இடும்பைக்கு

இடும்பை படாஅ தவர்"

| lookup ucd_count_chars_lookup _raw output countIf you'd like to try this approach, I can help with the script, but you may enjoy exploring it yourself first.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Excellent @tscroggins .. (if community could allow, i should have added more than 1 upvote. thanks a ton! )

(I should start focusing on Python more, python really solves "big issues,.. just like that")

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Normalization may not help for Tamil, which doesn't appear to have canonically equivalent composed forms of most characters in Unicode. I.e. The string இடும்பைக்கு can only (?) be represented in Unicode using11 code points.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. So it calls at least for docs clarification. I can understand that it can be difficult if not next to impossible to do a reliable character counting in case of such combined ones. But indeed ambiguity of wording of the description should be clarified. OTOH, I'm wonder how TRUNCATE would behave if it hit the middle of such "multicharacter" character.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes @PickleRick the docs require bit more detailed info.

I see the docs are not updated yet(screenshot attached) (even after my idea request https://ideas.splunk.com/ideas/EID-I-2176) and my bug report to Splunk (i spent few hrs on multiple conference calls with Splunk Support, but no fruitful results)

(New readers, could you pls spend a min and upvote that idea 2176, so at least i can tell my friends that i have found a bug on Splunk and suggested an idea of worth 100 upvotes 😉 )

okies, sure, agreed that its not a big show stopper for Splunk.

----- i have submitted the docs feedback just now.

----- next steps - around 3 or 4 months i worked on an app creations (following the footsteps of @tscroggins 's superb suggestions), but i got stuck at the app packaging areas.

------ working on this "small task" again now, let me update you all the progress soon, thanks.

Sekar

PS - If this or any post helped you in any way, pls consider upvoting, thanks for reading !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This was a fun thread! I upvoted https://ideas.splunk.com/ideas/EID-I-2176.