- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- knowledge bundle

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Haven't deleted that info, but I would guess it would get replicated again..

check here for more info on distributed search knowledge bundles and how to limit or mount: http://docs.splunk.com/Documentation/Splunk/5.0.2/Deploy/Whatisdistributedsearch#What_search_heads_s...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You may want to look at reducing the bundle size on the search heads first using replication blacklist, just deleting them will only temporarily resolve disk usage problems as they will get replicated again. This will limit what is sent to the search peers (indexers) in the knowledge bundle.

http://docs.splunk.com/Documentation/Splunk/6.2.3/DistSearch/Limittheknowledgebundlesize

Since bin directories, jar and lookup files do not need to be replicated to search peers you could blacklist these in distsearch.conf.

on each Search Head:

$SPLUNK_HOME/etc/system/local/distsearch.conf

[replicationBlacklist]

The ellipsis wildcard … recurses through directories and subdirectories to match.

noBinDir = .../bin/*

jarAndLookups = (jar|lookups)

Note: if you are using the lookup command in a search, give it the option, 'local=true'

You can then stop splunk on each indexer (one at a time) and remove the knowledge bundles in $SPLUNK_HOME/var/run/searchpeers and then start splunk (the entire contents of $SPLUNK_HOME/var/run/searchpeers can be deleted). The search heads will redistribute the new (reduced size) knowledge bundles. Each indexer keeps 5 knowledge bundles per search head. Another alternative is to add more disk space on the indexers.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

to update an old post on lookup files in the knowledge:

- if the lookup file is used in an automatic lookup, it needs to be included in the knowledge bundle and sent to the indexers , so don't blacklist those.

- if the lookup file is referenced by an accelerated datamodel , it needs to be included in the knowledge bundle and sent to the indexers , so don't blacklist those.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot rphillips_splunk !

We did exactly as you said, and OK now:

i.e.

we updated the file distsearch.conf

in /opt/splunk/etc/system/local/

by adding this stanza

[replicationBlacklist]

blacklist_lookups1 = apps/myApp1/lookups/*

blacklist_lookups2 = apps/myApp2/lookups/*

...

blacklist_bin = apps//bin/**

We did like this (not all Splunk apps), because several files in a particular app were really necessary for tranporting within the "knowledge bundle" to our indexer from the search head in question.

These "knowledge bundles" really blocked our alerting on the search head.

So this was a critical issue for us.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below are some guidelines to follow to know if it is safe to blacklist a lookup from the knowledge bundle:

1.) the knowledge bundles are created on the SH and stored in $SPLUNK_HOME/var/run/

In a search head cluster the knowledge bundle is sent from the shc captain to the indexers. The bundle on the peers (aka indexers) will be found in:

/opt/splunk/var/run/searchpeers

we can use the following command to look inside (but not untar) the bundle to see what the largest lookup files are on the SHC captain node. Look at the most recent full bundle by date:

ls -lh

tar -tvf <name>.bundle | grep csv | sort -nk3

The largest files will show up at the bottom of the list.

2.) Take the top 5-10 largest lookup csv files and before blacklisting them:

confirm the lookup file is not used in an automatic lookup, if it is, the indexers need the lookup file and it must be included in the bundle when replicated from SH-->indexer so we do not want to blacklist lookups that are automatic lookups.

This you can check with the following search:

lookup files which are configured for automatic lookups will be defined on the SH's transforms.conf "filename" attribute (don't blacklist these from the knowledge bundle):

| rest /services/configs/conf-transforms splunk_server=<shc captain>

| search filename=*| table splunk_server eai:acl.app title filename id | rename eai:acl.app as app title as stanza If they appear in the search below do not blacklist.

| rest splunk_server=<shc captain> /services/configs/conf-transforms | search filename=*| table splunk_server eai:acl.app title filename id | rename eai:acl.app as app title as stanza splunk_server as shc_captain

| search filename=*file1.csv* OR filename=*file2.csv* If you are validating a standalone SH not in a SH cluster you can run this search on that SH and use splunk_server=local

3.) If the lookup file is referenced in a Data Model acceleration search it needs to be sent in the bundle to the indexers, therefore do not blacklist it in distsearch.conf on the SH.

This would only apply to data models that are accelerated and have the lookup in the search the DM is running.

How to determine if a datamodel search is using a lookup:

a.) on the SH where the DM is accelerated temporarily set the DMA permissions to global under > settings > datamodels > (switch app context to All) > for DMs with yellow lightning bolt > edit permissions > global

we do this so that we can see all accelerated DMs at this endpoint: /services/datamodel/acceleration

b.) login to web UI of a SHC member and run this search:

| rest splunk_server=local /services/datamodel/acceleration | fields title eai:acl.app search | rename eai:acl.app as appthis will show any datamodels that are accelerated and the underlying search that is run when the DMA search runs in the background (every 5m by default)

c.) we want to check if the search used by the DM has any lookups or has any macros which have lookups.

copy the entire string of the 'search' field from the output of the search from item #3b above , and paste / run it as an ad-hoc search for the last -15m. Look in job inspector search properties at the job properties:

does the property value for normalizedSearch contain the term *lookup* ?

d.) if no, you are good to go and the accelerated datamodel isn't using any lookups

e.) if yes, identify the lookup and make sure you don't blacklist the .csv from the knowledge bundle

4.)To blacklist the lookups from the knowledge bundle by applying the following to the search heads:

distsearch.conf

[replicationBlacklist]

no_lookup1 = apps/<app1>/lookups/file1.csv

no_lookup2 = apps/<app2>/lookups/file2.csv

In a SHC this would be applied to the SHC deployer in the shc apps directory and pushed to the SHC members.

Ie;

/opt/splunk/etc/shcluster/apps/configuration_updates/local/

run from the deployer to push shcluster bundle to SHC members:

./splunk apply shcluster-bundle -target https://anySHCmember.com:8089 -preserve-lookups true -auth admin:pwd

upon the next configuration change on the SH a new knowledge bundle will be created and sent to the indexers

you can run the following search on the captain to see the bundles on the captain and their size:

| rest splunk_server=local /services/search/distributed/bundle-replication-files | convert ctime(timestamp) as timestamp| eval sizeMB= round(size/1024/1024,2) | table splunk_server filename location size sizeMB timestamp title| rename splunk_server as "shc_captain" title as "bundle version" | join shc_captain [| rest splunk_server=local /services/configs/conf-distsearch | search maxBundleSize=* | table splunk_server maxBundleSize | rename splunk_server as shc_captain maxBundleSize as "maxBundleSize (distsearch.conf)"]

All indexers initially need a full bundle. A full bundle replication event can be seen by looking in the indexer's splunkd_access.log file for the event:

10.10.10.10 - splunk-system-user [23/Jul/2020:13:44:31.950 -0400] "POST /services/receivers/bundle/rplinux04.sv.splunk.com HTTP/1.1" 200 19 - - - 3563ms

subsequent changes at the SH which require a bundle replication will be a delta replication (only sending the changes) which can be seen on the indexer as:

10.10.10.10 - splunk-system-user [23/Jul/2020:13:33:22.251 -0400] "POST /services/receivers/bundle-delta/rplinux04.sv.splunk.com HTTP/1.1" 200 2132 - - - 436ms

depending on how often changes are occuring at the SHs will determine how often bundles are replicated. You can see a timechart of this activity with the following search:

note: insert a host filter that will match your indexers

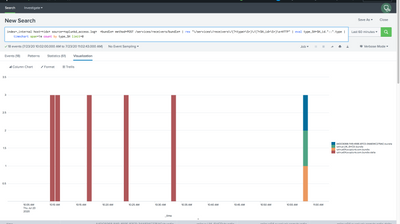

index=_internal host=<*idx*> source=*splunkd_access.log* *bundle* method=POST /services/receivers/bundle* | rex "\/services\/receivers\/(?<type>\S+)\/(?<SH_id>\S+)\s+HTTP" | eval type_SH=SH_id."::".type | timechart count by type_SH limit=0

in the chart above we can see that there were several delta replications as well as full bundle replications from various SHs which are peered to the indexers. The GUID id is the id of the search head cluster which the replication was sent from the SHC captain and is found in the SHC members server.conf [shclustering] stanza :

[root@rplinux08 bin]# ./splunk btool server list --debug shclustering | grep id

/opt/splunk/etc/system/local/server.conf id = A4DC6068-1149-459E-87CD-34A834C275AC

or a table view of the bundle replication events:

index=_internal host=*idx* source=*splunkd_access.log* *bundle* method=POST /services/receivers/bundle* | rex "\/services\/receivers\/(?<type>\S+)\/(?<SH_id>\S+)\s+HTTP" | table _time host method type SH_id bytes spent clientip status

This process of tuning your knowledge bundle needs to be repeated for each unique SHC and each unique standalone SH and may need to be revisited every so often as the environment changes.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi rphillips,

Great tip!

Just one question: If I blacklist the lookup tables to get replicated on the search peers, will it impact the search performance on the search heads? As I would think that now the searches containing the lookups will run slower, as the lookup task would not get distributed among the indexers, as they won't have that lookup tables in the searchpeers bundle anymore. Is this assumption correct?

Actually, I have a very big lookup table getting created/appended by an outputlookup search, and I realized since the time I have started using that outputlookup search to populate my tables, the amount of disk usage has gone up on indexers (specially in the searchpeers folder), hence thinking to just blacklist the lookup tables from sending to search peers.

Any suggestions?

Thanks!

Fatema.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm wondering the same as @fatemabwudel . What would be the performance tradeoffs of keeping the lookup files exclusively in the search head?

Thank you in advance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- The lookup command is a distributable streaming command when local=false, which is the default setting. This means the lookup will happen on the remote peers. There are benefits of running the lookup in parallel on each peer vs just on the SH.

- It only becomes an orchestrating command when local=true. This forces the lookup command to run on the search head and not on any remote peers.

- If you want to determine the difference in search performance compare the search run time using (local=false vs local=true) and check the job inspector.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For a SHC you would see 5 bundles from the SHC by the cluster id , not 5 bundles per SHC member. Replication of the bundles from the SHC is done by the SHC captain.

To determine the SHC id you can run the following command from any SHC member:

$SPLUNK_HOME/bin

./splunk show shcluster-status | grep id

You will see the bundles by this id on your indexers in $SPLUNK_HOME/var/run/searchpeers

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I needed to investigate this for a customer, so it's convenient you asked this question.

The searchpeers directory retains up to five replicated bundles from each search head sending requests. If you delete them, they will be created again for the next search that needs that set of configurations. So technically you could remove older ones (don't delete something in use by a running search!) but if it's really a problem you should be looking at mounted bundles instead.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Haven't deleted that info, but I would guess it would get replicated again..

check here for more info on distributed search knowledge bundles and how to limit or mount: http://docs.splunk.com/Documentation/Splunk/5.0.2/Deploy/Whatisdistributedsearch#What_search_heads_s...