Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Uses Transform Field Extraction-Delimiter

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I was using Transform type Field Extraction, I have an issue to select my Delimiter and facing some errors (not extracting fields as expected). Please see below the Raw Event and the parameters used for it. Thank you so much .....greatly appreciated your support.

Raw Event

"time_stamp":"2021-08-21 19:14:32 EST","user_type":"TESTUSER","file_source_cd":"1","ip_addr":"103.91.224.65","session_id":"ABSkbE7IWb3ZU52VZk=","tsn":"490937st,"request_id":"3ee0a-0c1712196e7-317f2700-d751c8e","user_id":"EASA68A7-780DEA22","return_cd":"10","app_name":"ALAO","event_type":"TEST_AUTH","event_id":"VIEW_LIST_RESPONSE","vardata":"[]","uri":https://wap-prod- /api/web-apps /authorizations,"error_msg":""

Parameters used:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’m not giving up yet. Is your field layout always the same? I wrote a dynamic field extraction before. However, now I’m thinking it’s static from what you’re saying. If so, try this in Settings > Fields > Field Extractions > Add New Field Extraction in the regex text box:

\"time_stamp\":\"(?P<field1>[^\"]+)\",\"user_type\":\"(?P<field2>[^\"]+)\",\"file_source_cd\":\"(?P<field3>[^\"]+)\",\"ip_addr\":\"(?P<field4>[^\"]+)\",\"session_id\":\"(?P<field5>[^\"]+)\",\"tsn\":\"(?P<field6>[^,]+)\"*,\"request_id\":\"(?P<field7>[^\"]+)\",\"user_id\":\"(?P<field8>[^\"]+)\",\"return_cd\":\"(?P<field9>[^\"]+)\",\"app_name\":\"(?P<field10>[^\"]+)\",\"event_type\":\"(?P<field11>[^\"]+)\",\"event_id\":\"(?P<field12>[^\"]+)\",\"vardata\":\"(?P<field13>[^\"]+)\",\"uri\":(?P<field14>[^\"]+),\"error_msg\":\"(?P<field15>[^\"]*)\"

I just labeled the fields 1-15. You can call them whatever your want. If this is still not quite to order. You could create each as a separate field extraction (eg, ,\"error_msg\":\"(?P<field15>[^\"]*)\") so they can be in different orders.

Let me know how this works or doesn’t.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How about this?

props.conf

[<your sourcetype>]

REPORT-xmlext = field-extractor

transforms.conf

[field-extractor]

REGEX = \"*([^\"]+)\":\"([^\"|:]*)\",*|\"(time_stamp)\":\"([^\"]+)|\"(uri)\":([^,]+)

FORMAT = $1::$2

MV_ADD = true

REPEAT_MATCH = true

If you like it, please mark it as the solution. Thank you!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's good when I write a props and transforms configuration files. But, I am trying to extract fields at/from the SPLUNK web console layer....like from the menu options under "Setting" and "Fields" ...how would I write REGEX...your provided code (REGEX) is not grouping data as expected . Thank. you so much, appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

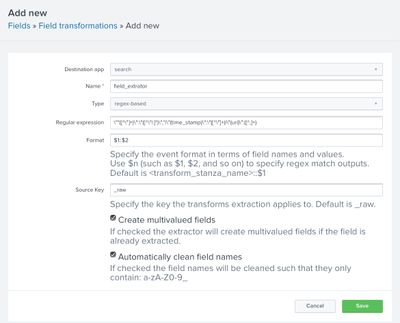

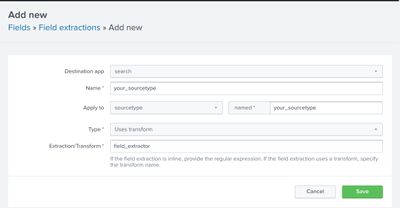

Go to Settings > Fields > Field transformations > New Field Transformation and add this:

Save this. Then, go to Settings > Fields > Field extractions > New Field Extractions and as this:

Try that and let me know how it’s working for you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, your codes/instructions are right for regex-based option. But, I want to use delimiter based.....with giving the field names different than what is in the events. Thank you so much again, appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’m not giving up yet. Is your field layout always the same? I wrote a dynamic field extraction before. However, now I’m thinking it’s static from what you’re saying. If so, try this in Settings > Fields > Field Extractions > Add New Field Extraction in the regex text box:

\"time_stamp\":\"(?P<field1>[^\"]+)\",\"user_type\":\"(?P<field2>[^\"]+)\",\"file_source_cd\":\"(?P<field3>[^\"]+)\",\"ip_addr\":\"(?P<field4>[^\"]+)\",\"session_id\":\"(?P<field5>[^\"]+)\",\"tsn\":\"(?P<field6>[^,]+)\"*,\"request_id\":\"(?P<field7>[^\"]+)\",\"user_id\":\"(?P<field8>[^\"]+)\",\"return_cd\":\"(?P<field9>[^\"]+)\",\"app_name\":\"(?P<field10>[^\"]+)\",\"event_type\":\"(?P<field11>[^\"]+)\",\"event_id\":\"(?P<field12>[^\"]+)\",\"vardata\":\"(?P<field13>[^\"]+)\",\"uri\":(?P<field14>[^\"]+),\"error_msg\":\"(?P<field15>[^\"]*)\"

I just labeled the fields 1-15. You can call them whatever your want. If this is still not quite to order. You could create each as a separate field extraction (eg, ,\"error_msg\":\"(?P<field15>[^\"]*)\") so they can be in different orders.

Let me know how this works or doesn’t.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much, appreciated!!!

Yes field layout always the same and your codes are working as it is written for.

But, my issue using delimiter-based field extraction

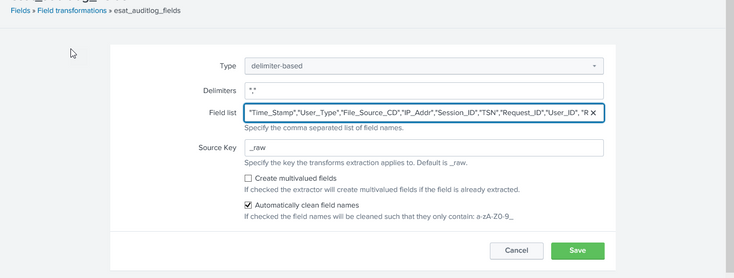

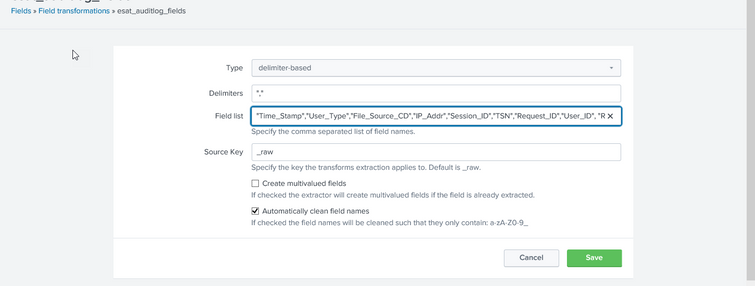

Like Setting->Fields->Field transformations (see screenshot below)

Under Field transformations , Select the Type Delimiter-based. My issue, with choosing the Delimiter value. I used "," in delimiter field, but not extracted/group fields values. Some of the field values are overlap with each other. Thank you again, appreciated

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’m suggesting you not use delimited-based field extraction. You have much more flexibility and control with regex-based. Then, you don’t the errors you mention.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That makes sense. Thank you so much appreciated!