- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Table Displaying Lookup and Search Results; Wrappe...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Table Displaying Lookup and Search Results; Wrapped Columns

Hello,

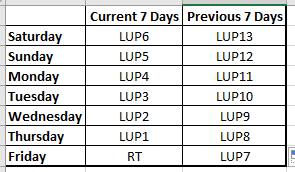

I would like to create a table for the past 14 days of events. 13 of the table cells will contain output from a lookup table (last 13 days). The 14th cell will be "live" data from a realtime search. The table needs to be 8 rows by 3 columns (see below). The cell below listed as Friday will be the current day of the week.

*Using LUP# to represent the value is from the lookup table; and RT to represent the value is from the realtime search.

Stay safe and healthy you and yours.

God bless,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Trying to get a specific look can be troublesome. Different tools simply work differently, so what something *looks* like in one tool can be difficult or impossible in another tool.

But if you are just after a "style" that' s like that, I think we can do this. I have built a run-anywhere search, which I'll explain. There is some minor formatting to do probably, but I think it gives you the idea and gets you 95% of the way there.

| makeresults

| eval dates="2020-06-01:01 2020-06-02:02 2020-06-03:03 2020-06-04:04 2020-06-05:05 2020-06-06:06 2020-06-07:07 2020-06-08:08 2020-06-09:9 2020-06-10:10 2020-06-11:11 2020-06-12:12 2020-06-13:13"

| makemv delim=" " dates

| mvexpand dates

| makemv delim=":" dates

| eval date=mvindex(dates,0), count=mvindex(dates,1)

| append

[| makeresults

| eval date="2020-06-14", count=14]

| eval _time = strptime(date, "%Y-%m-%d")

| eval weekday = strftime(_time, "%w - %A")

| eval week_of_year = strftime(_time, "%V")

| chart avg(count) over weekday by week_of_yearOK, so line by line.

The first makeresults just generates an event.

The next eval creates a pile of data in the one event. (If you remove all other SPL and just run the first two commands, you'll see what I mean. You can add commands one by one to see the difference, though in a couple of cases the changes are "invisible")

The next makemv, mvexpand, makemv and that next eval take that one created event and expand them into separate events with values. In this case one per day.

Up to this point, this was the run anywhere part just getting some data mocked up. You'll replace that WHOLE pile with your "old data search" and don't have to do these sorts of shenanigans.

Now we have an append, and inside there is a super-simple single-event version of the makeresults above, right? This "appends in" your real time data in your search. There's care to be taken to not make this take too long or return too much data or anything, but that's probably a secondary question if you run into problems.

And I lied - the next eval for _time actually "finishes" the run anywhere, but telling Splunk to use the date fields I provided as the actual dates of the events instead of ... the current time. You shouldn't need this line either.

Now we get to the meaty parts.

Eval weekday builds a field called "weekday" for what day of the week it is. Eval week_of_year does the same for what week number it is.

With those two fields, we can chart the average count (in my case, it's the same as count itself because the average of a single value is that single value) by weekday and week_of_year.

weekday 23 24

0 - Sunday 7 14

1 - Monday 1 8

2 - Tuesday 2 9

3 - Wednesday 3 10

4 - Thursday 4 11

5 - Friday 5 12

6 - Saturday 6 13Alignment's a little odd there, but you get the idea. For week 23 (which I supposed you could rename that, or maybe fiddle with the date specifier when you create it to get something different in there), sunday was 7, monday was 1, etc...

All the pieces to the above are documented in Splunk's docs, here's a link to the docs for "chart" but know that the other commands are all there too. Except the time variables, they're here.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply.

There is a lot to unpack here.

Here is the code I am currently using that does the same thing, but it runs the search against the previous 13 days, which is very slow. By the time today, the live table cell is updated, the search has to begin again. If I could populate the first 13 cells with static data from a lookup table, this would vastly improve the speed of this panel.

index IN (linuxevents)

AND earliest=-14d@w latest=now

AND host IN (law)

AND source IN (/data/jboss/standalone/log/server.log)

AND sourcetype IN (jboss:server)

AND message="DOPAY served"

| timechart count(message) AS certs span=1d

| timewrap w

| eval dow=strftime(_time,"%A")

| rename dow AS "Day of Week", certs_latest_week AS "This Week", certs_1week_before AS "Last Week"

| table "Day of Week", "This Week", "Last Week"

On the bottom row, the middle cell is always today.

I will work on the solution you provided and update you.

Enjoy your weekend.

Thanks and God bless,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Shutting it down for the evening. This is what I have so far.

| inputlookup lup_certsTotalByEpochDay.csv

| eval epochToday = strptime(strftime(now(),"%m/%d/%y"),"%m/%d/%y")

| rex field=epochToday "(?<epochToday>\d{10})"

| eval epochStart=epochToday-86400, epochEnd=epochToday-(13*86400)

| where (epochDay<=epochStart AND epochDay>=epochEnd)

| eval weekday = strftime(epochDay, "%w - %A")

| eval week_of_year = strftime(epochDay, "%V")

| chart values(Total) OVER weekday BY week_of_year

Here are the results. Still alot of tweaking to do.

- End up with 3 weeks instead of 2.

- Rename 25 and 24 to This Week and Last Week.

- Rotate the weekday so today is always at the bottom.

- Add the live search (today) to that bottom cell.

weekday 23 24 25

| 0 - Sunday | 92923 | 146151 | |

| 1 - Monday | 122810 | 127312 | |

| 2 - Tuesday | 125986 | 116905 | |

| 3 - Wednesday | 128081 | 113381 | |

| 4 - Thursday | 130493 | 103829 | |

| 5 - Friday | 125972 | ||

| 6 - Saturday | 73311 | 119197 |

Thanks and God bless,

Genesius