- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Splunk search to extract key value pair?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk search to extract key value pair?

Hi, I have injected NATS stream details in json format to the splunk and it look below.

Wanted to extract key value pair from it. Any help is appreciated. Thanks in advance!

looking to extract values of below key -

messages

bytes

first_seq

first_ts

last_seq

last_ts

consumer_count

JSON format -

{

"config": {

"name": "test-validation-stream",

"subjects": [

"test.\u003e"

],

"retention": "limits",

"max_consumers": -1,

"max_msgs_per_subject": -1,

"max_msgs": 10000,

"max_bytes": 104857600,

"max_age": 3600000000000,

"max_msg_size": 10485760,

"storage": "file",

"discard": "old",

"num_replicas": 3,

"duplicate_window": 120000000000,

"sealed": false,

"deny_delete": false,

"deny_purge": false,

"allow_rollup_hdrs": false,

"allow_direct": false,

"mirror_direct": false

},

"created": "2023-02-14T19:26:42.663470573Z",

"state": {

"messages": 0,

"bytes": 0,

"first_seq": 39482101,

"first_ts": "1970-01-01T00:00:00Z",

"last_seq": 39482100,

"last_ts": "2023-03-18T03:10:35.6728279Z",

"consumer_count": 105

},

"cluster": {

"name": "cluster",

"leader": "server0.mastercard.int",

"replicas": [

{

"name": "server1",

"current": true,

"active": 387623412

},

{

"name": "server2",

"current": true,

"active": 387434624

}

]

}

}

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @drogo,

at first in ingestion you should use the "INDEXED_EXTRACTIONS = json" option in props.conf, both on Forwarder and Search Head.

For more infos see at https://docs.splunk.com/Documentation/Splunk/9.0.4/Admin/Propsconf

Anyway, you could use the spath command to extract fields: https://docs.splunk.com/Documentation/SplunkCloud/9.0.2209/SearchReference/Spath

something like this:

index=your_index

| spath

| ...in this way you'll have all the fields in the json file that you can rename as you like.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

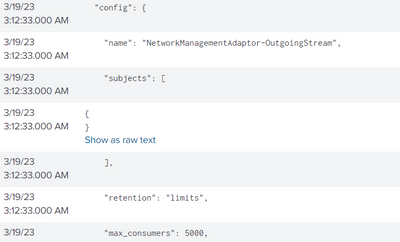

Thanks @gcusello, the main problem I am seeing that on splunk logs. The json file is not showing in one line. Each attribute is on individual line and Splunk is considering each line as separate log. See below example. I am looking to pull config.name field, similarly others.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So the first thing for you is to sort out the data onboarding so that the input stream is properly broken into whole events.

Post in Getting Data In section if you need help with that.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @drogo,

in your props.conf, for your sourcetype you have to configure

SHOULD_LINEMERGE = truei this way the rows are grouped in events and not each row in one event.

Then you should configure

INDEXED_EXTRACTIONS = jsonin this way, you'll have all the fields.

Remember to put the props.conf both on Forwarders and Search Heads, and (if you have) also on intermediate Heavy Forwarders.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No!

Unless there is really no other way, you should _not_ use SHOULD_LINEMERGE=true. It adds unnecessary load to the parsing engine because it runs a huge bunch of heuristic rules to guess where to join already split lines.

It's better to have properly set linebreaker. But that's a topic for another discussion.

Also, blindly turning on indexed extractions without understanding pros and cons instead of relying on search-time parsing is a mistake.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For JSON fields, use spath