- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Splunk Cloud: Increase limit in field extraction f...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using Splunk Cloud and I have defined a sourcetype (from the UI) of category Structured and Indexed Extractions as json.

For most JSON logs published to my Splunk Cloud instance for the given sourcetype, all fields are correctly extracted. The exception to this are some larger JSONs, for which only a few of the fields are correctly extracted.

After reading some other questions, it seems there are some limits either in spath (extraction_cutoff) itself or in the auto-kv extraction (maxchars).

All these solutions require to modify limits.conf and here come my question:

- How do you configure this kind of limits in Splunk Cloud?

- Is there any other way to properly extract all fields from a big JSON in Splunk Cloud?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

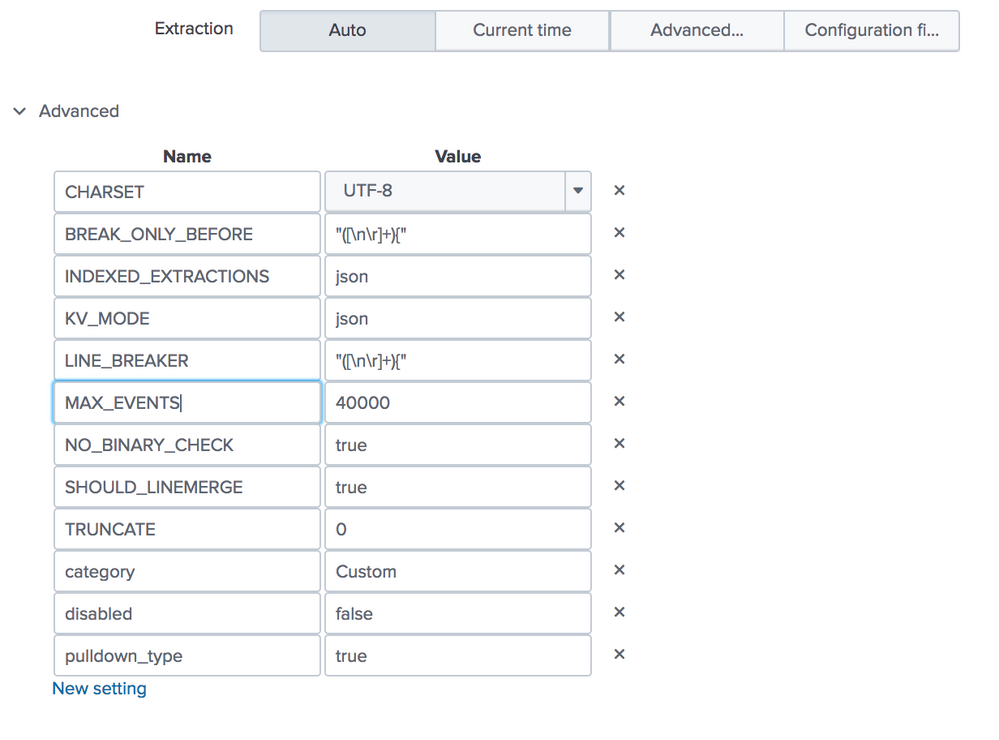

Instead of change the limits.conf, you can change it directly on sourcetype configurations. Search your sourcetype, click on edit and then add in advanced configuration the parameter below:

Name = MAX_EVENTS

Value = 10000

The field value you can change as you go. Attached a picture with a configuration that I have very similar like that.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have applied MAX_EVENTS 40000 for the the _json source type, big fields become searchable, but field name was not extracted. I tried to add maxchars. It did not help as well.

Is there any way how to make Splunk Cloud extract big fields (above 20K)?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Instead of change the limits.conf, you can change it directly on sourcetype configurations. Search your sourcetype, click on edit and then add in advanced configuration the parameter below:

Name = MAX_EVENTS

Value = 10000

The field value you can change as you go. Attached a picture with a configuration that I have very similar like that.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you a lot, that solved it! 😄