- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Regex: regular expression is too large

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regex: regular expression is too large

Hi Team/Community,

I'm having an issue with a lookup file. I have a csv with two columns, 1st is named ioc and second is named note. This csv is an intel file created for searching for any visits to malicious urls for users. The total number of lines for this csv is 66,317. The encoding for this csv is ascii. We keep having an issue with this search where when running it Splunk will give an error message of Regex: regular expression is too large. This error isn't consistent and it this particular alert doesn't seem to trigger much for many of our customers. We also have a similar separate alerts built for looking for domains and IPs. Those work much better than this url csv. I would like help in seeing with the issue is with the regex being too large. Is the number of lines causing a major issue with this file running properly? Any help would be greatly appreciated as I think this search is not running efficiently.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I appreciate all the help and apologize for my late response. I am still a low man on the totem pole and been trying to research more into this with the recommendations. The file gets automatically updated periodically with all the new intel we ingest, this one specifically regarding malicious URLs. My higher up suggested recently a recommendation from a 13 year old Splunk community post to try and fix this issues. (https://community.splunk.com/t5/Splunk-Search/Lookup-table-Limits/m-p/75336)

I am not familiar with this recommendation so need to look into it. If anyone believes this is not a good recommendation from a 13 year old post then please let me know.

Thank you very much.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

max_memtable_bytes is still relevant to performance when using large lookup files, but it has nothing to do with regular expressions.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jhooper33,

Internally, your regular expression compiles to a length that exceeds the offset limits set in PCRE2 at build time.

For example, the regular expression (?<bar>.){3119} will compile:

| makeresults

| eval foo="anything"

| rex field=foo "(?<bar>.){3119}"

but this regular expression (?<bar>.){3120} will not:

| rex field=foo "(?<bar>.){3120}"

Error in 'rex' command: Encountered the following error while compiling the regex '(?<bar>.){3120}': Regex: regular expression is too large.

Repeating the match 3,120 times exceeds PCRE2's compile-time limit. If we add a second character to the pattern, we'll exceed the limit in fewer repetitions:

Good:

| rex field=foo "(?<bar>..){2339}"

Bad:

| rex field=foo "(?<bar>..){2340}"

Error in 'rex' command: Encountered the following error while compiling the regex '(?<bar>..){2340}': Regex: regular expression is too large.

The error message should contain a pattern offset to help with identification of the error; however, Splunk does not expose that, and enabling DEBUG output on SearchOperator:rex adds no other information.

In short, the code generated by the regular expression compiler is too long, and you'll need to modify your regular expression.

With respect to CSV lookups versus KV store lookups, test, test, and test again. A CSV file deserialized to an optimized data structure in memory should have a search complexity similar to a MongoDB data store, but because the entire structure is in memory, the CSV file may outperform a similarly configured KV store lookup. If you want to replicate your lookup to your indexing tier as part of your search bundle, you also need to consider that a KV store lookup will be serialized to a CSV file and used as a CSV file on the indexer.

Finally, if you're using Splunk Enterprise Security, consider integrating your threat intelligence with the Splunk Enterprise Security threat intelligence framework. The latter may not meet 100% of your requirements, so as before, test, test, and test again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jhooper33,

the error messge seems to be related to the regex contained in the search, it doesn't seem to be related to the lookup, could you share it?

Anyway more than 50,000 lines is a max limit for a lookup , use a summary index instead a lookup.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello,

Thank you for the response. I did not create this csv only working with it from past co workers. So I have been spending a bit of time trying to fix this issue. I've tried to find any regex issues with this file but it's a little difficult with how many lines there are. Are you asking if I can share the lookup file?

And as far as using a summary index, this is something I haven't tried yet.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jhooper33,

no, I asked to share the search that caused the message "regex too long", not the lookup, to understand what could be the issue on the regex.

I hint to explore the use of summary indexes or a Data Model instead a lookup if you have too many rows.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apologies for the late response, got the ok to send the search today. The url_intel.csv is what has 66,317 lines. I just ran this alert and it didn't give this regex error, so it is intermittent when it will give an error at all.

index=pan_logs

[ inputlookup url_intel.csv

| fields ioc

| rename ioc AS dest_url]

| search NOT

[| inputlookup whitelist.csv

| search category=website

| fields ignoreitem

| rename ignoreitem as query

]

| search NOT ("drop" OR "denied" OR "deny" OR "reset" OR "block")

| eval Sensor_Name="Customer", Signature="URL Intel Hits", user=if(isnull(user),"-",user), src_ip=if(isnull(src_ip),"-",src_ip),dest_ip=if(isnull(dest_ip),"-",dest_ip), event_criticality="Medium"

| rename _raw AS Raw_Event

| table _time,event_criticality,Sensor_Name,Signature,user,src_ip,dest_ip,Raw_Event

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jhooper33,

There's no regular expression in the search itself, but you should be able to find the cause in search logs.

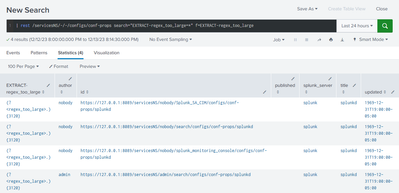

For example, I've turned my "bad" regular expression into a field extraction, and the following error is logged:

12-13-2023 20:06:05.854 ERROR SearchOperator:kv [35240 searchOrchestrator] - Cannot compile RE \"(?<regex_too_large>.){3120}\" for transform 'EXTRACT-regex_too_large': Regex: regular expression is too large.I can then trace the configuration, which we can see is an inline EXTRACT in props.conf:

| rest /servicesNS/-/-/configs/conf-props search="EXTRACT-regex_too_large=*" f=EXTRACT-regex_too_large

In my example, EXTRACT-regex_too_large has the value (?<regex_too_large>.){3120}, which we know to be problematic form my last post:

After you've identified your regular expression, post it here if you can.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The error contains the regular expression, obviously, but the search may help you narrow down the source file location. Running btool on your Splunk instance(s) is more helpful:

$ $SPLUNK_HOME/bin/splunk cmd btool props list --debug | grep -- EXTRACT-regex_too_large

/opt/splunk/etc/apps/search/local/props.conf EXTRACT-regex_too_large = (?<regex_too_large>.){3120}

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jhooper33 ,

don't use the search command: put all the search terms in the main search, so you'll have a faster search:

index=pan_logs [ inputlookup url_intel.csv | fields ioc | rename ioc AS dest_url] NOT [| inputlookup whitelist.csv WHERE category=website | fields ignoreitem | rename ignoreitem as query ] NOT ("drop" OR "denied" OR "deny" OR "reset" OR "block")

| eval

Sensor_Name="Customer",

Signature="URL Intel Hits",

user=if(isnull(user),"-",user),

src_ip=if(isnull(src_ip),"-",src_ip),

dest_ip=if(isnull(dest_ip),"-",dest_ip),

event_criticality="Medium"

| rename _raw AS Raw_Event

| table _time event_criticality Sensor_Name Signature user src_ip dest_ip Raw_EventCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @gcusello

I will have to verify first if I'm allowed to share the search, may fall under IP. But I am going to look at summary index and I'm also considering rewriting this whole file.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jhooper33 ,

let us know if we can help you more, or, please, accept one answer for the other people of Community.

Ciao and happy splunking

Giuseppe

P.S.: Karma Points are appreciated by all the contributors 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Anyway, with big lookups you should rather use KVstore instead of csv files since csv searches are linear and thus not very effective as the file grows.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick!!!

Thank you for the suggestion. I haven't seen anyone use KVstore for lookup for any of our searches yet so I will definitely look into this.