Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Query when count is zero in Minute interval

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

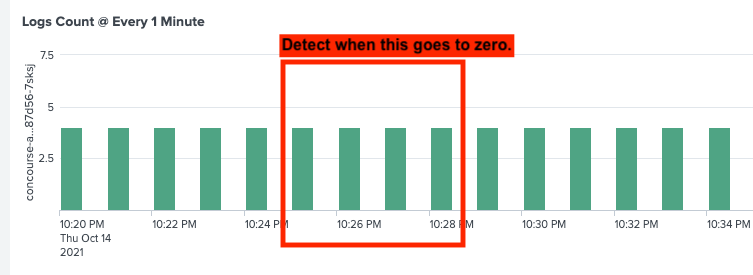

Hello, we receive somewhere between 3-5 messages in every Pod in every 1 minute. We have a situation where some of the pods go Zombie and stops writing messages. Here's the query:

index namespace pod="pod-xyz" message="incoming events" | timechart count by pod span=1m

I want help with this query to detect when the stats count in the minute time interval goes to zero.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this commented, use-anywhere example, assuming you have access to _internal index. It works from the time picker and the span=? value in line 3.

_internal index collects metrics continuously for numerous different components.. I'm using component to represent your pod. The report produces a list of pods reporting "missing" (zero) values in the last interval of the time range (specified in the time picker), and the number of consecutive time intervals, at the end of the time range, that have reported zero minutes. The length of the time interval is specified by the span value in line 3 (1 minute in my example below). Play around with different span values and time ranges. 'Last 60minutes' is a good place a to start on my instance.

index=_internal component="*"

| rename component as pod

| timechart span=1m count by pod partial=f limit=0

| untable _time pod count

| sort pod _time

| eval zeroInterval=if(count=0,1,0) ```zeroInterval is Boolean flag, true (1) when count is zero ```

| streamstats sum(zeroInterval) as durInBadState by pod,zeroInterval reset_on_change=true ```durInBadState is running tally of consecutive zero intervals```

| eventstats count as LastIntervalInRange by pod ```LastIntervalInRange is number of time intervals in time range. Determined by time picker```

| streamstats count as IntervalInRange by pod ```Give each interval a position in time range. So we can determine whether it is the last interval. See filter below```

| where IntervalInRange=LastIntervalInRange AND count=0 ```filter to last time interval per pod. And where count is zero```

| table pod count durInBadState

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tread_splunk @somesoni2

I realized having a sample data set would be helpful. For that reason, I am attaching a sample data set and an explanation below:

We have 4 Pods. Each of these pods receive 3-5 messages in Every Minute. Now these messages are NOT evenly distributed. Meaning, it's not like time: 0s, 15s,30s,45s etc.

We have noticed, one or two of these pods goes in Zombie state. Meaning, for say 3 minutes, these Pods are not writing this event.

Overall objective is to find the query > create a dashboard panel > generate simple alerts when we detect these Zombiness.

Now the explanation of the dataset. Below is the top level query:

index namespace message="incoming events" pod=*

To detect the bad actors with human eyes, I am adding timespan so I can detect the anomaly. The sample data that I am providing comes from the below query (and not the starter query):

index namespace message="incoming events" pod=* | timechart count by pod span=1m

Since data from raw data set is in JSON objects, I am not attaching here. But I can of course provide the true raw data set if it helps in our investigation.

From this dataset, this is the bad Pod:

Bad Pod: pod-a

2021-10-14T21:01:30.000+0000 event count 3

2021-10-14T21:02:30.000+0000 event count 0

.............

...........

02021-10-14T21:05:00.000+0000 event count 0

02021-10-14T21:05:30.000+0000 event count 4

Duration of being in bad state: 3 mins

I am trying to get the query that I can utilize in creating a dashboard (and alert), where me or any of my team mate can simply run the query and detect:

1. Bad Pod

2. Duration in bad state ( time > 1m).

I appreciate your help and Thank you. And as I said, I can always provide the true raw data (from the starter query) if it helps in this investigation.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this commented, use-anywhere example, assuming you have access to _internal index. It works from the time picker and the span=? value in line 3.

_internal index collects metrics continuously for numerous different components.. I'm using component to represent your pod. The report produces a list of pods reporting "missing" (zero) values in the last interval of the time range (specified in the time picker), and the number of consecutive time intervals, at the end of the time range, that have reported zero minutes. The length of the time interval is specified by the span value in line 3 (1 minute in my example below). Play around with different span values and time ranges. 'Last 60minutes' is a good place a to start on my instance.

index=_internal component="*"

| rename component as pod

| timechart span=1m count by pod partial=f limit=0

| untable _time pod count

| sort pod _time

| eval zeroInterval=if(count=0,1,0) ```zeroInterval is Boolean flag, true (1) when count is zero ```

| streamstats sum(zeroInterval) as durInBadState by pod,zeroInterval reset_on_change=true ```durInBadState is running tally of consecutive zero intervals```

| eventstats count as LastIntervalInRange by pod ```LastIntervalInRange is number of time intervals in time range. Determined by time picker```

| streamstats count as IntervalInRange by pod ```Give each interval a position in time range. So we can determine whether it is the last interval. See filter below```

| where IntervalInRange=LastIntervalInRange AND count=0 ```filter to last time interval per pod. And where count is zero```

| table pod count durInBadState

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've used my eventstats/streamstats/where technique previously but realised in this case it could be replaced with a stats latest command. So here is a simplification of the solution. The "list(count) as count" element is simply a way to see the series of values per pod from which you can verify that durInBadState is providing the correct result.

index=_internal component="*"

| rename component as pod

| timechart span=1m count by pod partial=f limit=0

| untable _time pod count

| sort pod _time

| eval zeroInterval=if(count=0,1,0) ```zeroInterval is Boolean flag, true (1) when count is zero ```

| streamstats sum(zeroInterval) as durInBadState by pod,zeroInterval reset_on_change=true ```durInBadState is running tally of consecutive zero intervals```

| stats latest(durInBadState) as durInBadState, list(count) as count by pod

| where durInBadState!=0

| eval count=mvjoin(count,":")

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tread_splunk thank you so much for looking into this. I will try out the query you just provided. After thinking through, I realized that I should just provide real data with clean-up and masking. Here is the real data.

https://drive.google.com/file/d/1If2G2JNFm7NljWR7WuIU_VavIBxH9jqE/view?usp=drivesdk

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not quite clear on what you're looking for but I'm playing around with the following...

index=_internal component="*"

| timechart span=1m count by component

| untable _time component count

| sort component _time

| eval zeroMin=if(count=0,1,0)

| streamstats sum(zeroMin) by component,zeroMin reset_on_change=true

| xyseries _time component count sum(zeroMin) zeroMin format="$VAL$:$AGG$"- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you want to calculate, for each pod, the length (in minutes) of the gap?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@tread_splunk - yes

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

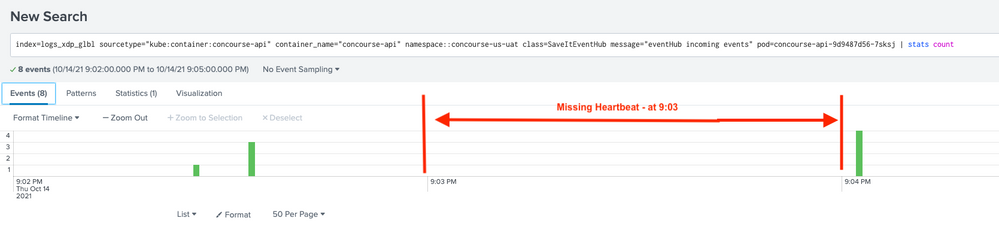

@tread_splunk

the expected behaviour is in every minute, every pod receives 2 to 4 heartbeats messages.

the Zombie behaviour is a POD(s) not either receiving/ writing logs for several minutes.

ultimate goal : figure out which pod(s) and how many minutes did not register heart beats.

then setup alert based on that.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes. I have a list of all pods.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you're querying for small time range (max few hours), you could do something like this: (basically using your pod list lookup, create entry for every minute with count=0, append it to original search result and then get the max count. Any pod with missing data in a minute interval will be listed).

index namespace pod="pod-xyz" message="incoming events" | bucket span=1m _time | stats count by _time pod | append [| inputlookup yourpodlist.csv | table pod | addinfo | eval time=mvrange(info_min_time,info_max_time+60,60) | table pod time| mvexpand time | rename time as _time | eval count=0] | stats max(count) by _time pod | where count=0

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try something like this:

index namespace pod="pod-xyz" message="incoming events"

| timechart count by pod span=1m

| untable _time pod count

| where count=0- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@somesoni2 - sorry, it did not work.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is what I am trying to detect. The time range or minute(s) when we are missing the heart beat for any given pod.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You want to detect it for each pod? Do you have a list of pod somewhere say a lookup?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@somesoni2 - yes