- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- PROPS Configuration for csv without Header Source ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

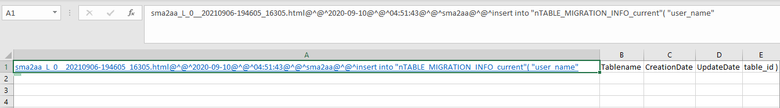

I have issues to write PROPS configuration file for following csv file (please see screenshot below for sample data) with No Header on it. Five columns showed in the screenshot are all values. Value of First Column also included below for better visibility. Any help will be highly appreciated. Thank you so much.

Screenshot

Value of First Column

sma2aa_L_0__20210906-194605_16305.html@^@^2020-09-10@^@^04:51:43@^@^sma2aa@^@^insert into "nTABLE_MIGRATION_INFO_current"( "user_name"

[csv]

SHOULD_LINEMERGE=FALSE

TIME_PREFIX=?

TIME_FORMAT=?

TIMESTAMP_FIELDS=?

HEADER_FIELD_LINE_NUMBER=?

INDEXED_EXTRACTIONS=csv

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, this should work in props.conf on your UF:

[mySourcetypeNameDontUseCSV]

SHOULD_LINEMERGE=false

NO_BINARY_CHECK=true

INDEXED_EXTRACTIONS=csv

FIELD_NAMES=SQLFIELD,Field1,Field2,Field3,Field4

TIME_PREFIX=@\^@\^

TIME_FORMAT=%Y-%m%d@^@^%H:%M:%S

Change the sourcetype name such that it matches what you set in inputs.conf for the monitor stanza. Again, make it a descriptive name rather than generic "csv", depending on what type of log data this is (e.g. sql:querylog or somesuch). Always good to be explicit and descriptive.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Couple of questions:

- Where is this csv file picked up from, i.e. is it read by a UF?

- What field names do you expect to use?

- Which part of the first column do you want to be used as the event timestamp (there are multiple TS values)?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much, appreciated your support.

Here are the answers of your questions

- Where is this csv file picked up from, i.e. is it read by a UF? Yes by UF

- What field names do you expect to use? SQLFIELD, Field1, Field2, Field3, Field4

- Which part of the first column do you want to be used as the event timestamp (there are multiple TS values)? Highligter as Bold below (After html@^@^)

sma2aa_L_0__20210906-194605_16305.html@^@^2020-09-10@^@^04:51:43@^@^sma2aa@^@^insert into "nTABLE_MIGRATION_INFO_current"( "user_name"

Thank you again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, this should work in props.conf on your UF:

[mySourcetypeNameDontUseCSV]

SHOULD_LINEMERGE=false

NO_BINARY_CHECK=true

INDEXED_EXTRACTIONS=csv

FIELD_NAMES=SQLFIELD,Field1,Field2,Field3,Field4

TIME_PREFIX=@\^@\^

TIME_FORMAT=%Y-%m%d@^@^%H:%M:%S

Change the sourcetype name such that it matches what you set in inputs.conf for the monitor stanza. Again, make it a descriptive name rather than generic "csv", depending on what type of log data this is (e.g. sql:querylog or somesuch). Always good to be explicit and descriptive.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assuming it's the first date/time value in the event you want to use as _time, and we just name fields according to your column names, this should work:

[mySourcetypeNameDontUseCSV]

FIELD_NAMES = A,B,C,D,E

INDEXED_EXTRACTIONS = csv

LINE_BREAKER = ([\r\n]+)

NO_BINARY_CHECK = true

SHOULD_LINEMERGE = false

If this file is read by a UF, this props.conf entry must be placed on the UF itself, since you intend to use indexed extractions.

If you want a different part of the event for the timestamp, or if you just want to use index time, you'll need a couple more things.