- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Online [realtime] integration with WebApp

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Online [realtime] integration with WebApp

Hello,

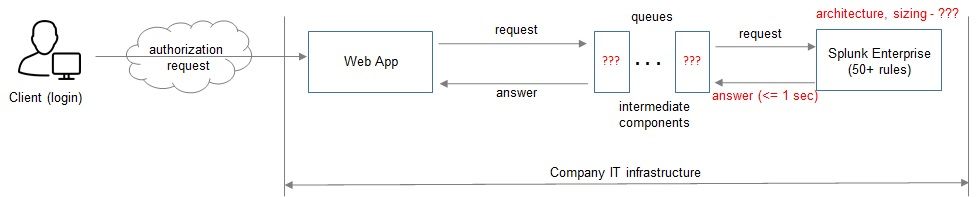

We have WebApp within a Company. It is necessary to receive Authorization Requests (AR) from WebApp for online scoring.

We have Splunk Enterprise installation. Authorization requests from WebApp should go directly (or through an intermediate components) to Splunk. We need to use 50+ rules (searches) for each coming authorization requests and get an answer (search result) in less than 1 second and send that answer back to the WebApp. Suppose that we need the depth of analysis no more than 24 hours.

Based on Splunk search result WebApp decides to allow the client access to the personal account or to deny or send a request to the client for additional verification.

Between the WebApp and Splunk, we can use the intermediate components (for example, a stream-processing software like Apache Kafka)

Questions:

Are there any examples of implementation of such solutions?

What is the Splunk architecture of such a solution and what kind of intermediate components are used?

What are the estimated hardware requirements for Splunk installation?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sticking to that example, I'd recommend letting existing authentication and authorization solutions tackle that. Most LDAP servers will block users after too many failed authentications, for example.

I'm guessing you just used a simple example to illustrate things though... if the ruleset in general is way more complex, you could consider running the rules as often as you can - e.g. updating the number of failed authentications per user every ten minutes - and writing that decision out to a database. Then have the webapp talk to that database, e.g. "deny auth if table splunk_ruleset_blocked_users has an entry for that user within the last hour".

Even with a truckload of hardware you're not going to achieve 50 splunk searches launched and completed within that second response time you've asked for.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you elaborate a bit about the rules, the data that is used by them, whether that underlying data changes often relative to how often a user requests authorization, how many distinct users, etc.?

I'm asking to gauge whether you can reasonably use a caching layer that occasionally gets updated out of Splunk, or whether you really need a fresh batch of 50+ searches for every login attempt... at 50 attempts per second and one second per search that'd be 2500+ parallel searches all the time. That's a lot. If all you told a hardware vendor was "I'd like to buy hardware for Splunk, size it for 2500+ parallel searches please" they'd send you a couple of truckloads.

If however you have users re-authorizing frequently in a short time span and a long enough caching layer TTL for a previously-made decision, then using Splunk for the back-end decision making might become feasible.

Finally though this feels like a topic you should discuss with Splunk PS or your local Splunk service partner - getting all the details right and aspects covered via answers is quite a challenge.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for replying, Martin.

A bit more information about the data and the rules. As an example of one of the rules in 50 ruleset:

"How many unsuccessful authentification attempts has been made by the user in 1 hour span."

As you can see the data that has to be used is application authentification log (apache-like). And the challenging part is to guarantee completion of 2500 parallel searches in one second (hopefully without the need of truckloads of hardware 🙂 )

Maybe it could be some sophisticated approach using data model.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you share me how much amount of data you are talking about.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for replying. It is about 50 requests per second (~4GB per day)