- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Join all objects with specific object within the s...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Join all objects with specific object within the same file?

Hi,

i have a lot of files, the size of each file can be 4M.

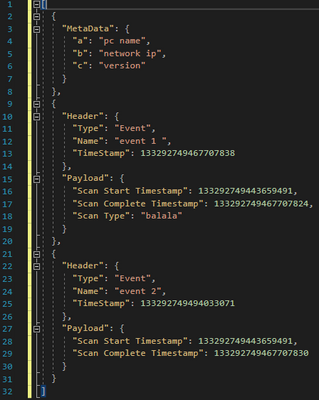

the structure of each JSON file: Events/objects. Each event contains Payload and Header.

Each file contains Metadata event/object. i need to add all metaData fields to each event within of the same file.

example for input:

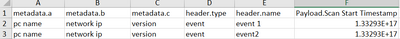

Output-Splunk table, row for each event + add the metadata columns to each row.

do it for all files.

i tried to write the following but it's not correct:

index=my_index source=my_source sourcetype=_json

| search NOT MetaData

| join type=left source [ | search index=my_index source=my_source sourcetype=_json | search NOT HEADER]

| table *

thanks,

Maayan

fdgd

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, please post sample data in text form, especially structured data types such as JSON. Screenshot is the least useful form for volunteers.

Second, this looks strongly like a case of "plead to your developers". By this, I mean that the content of the file is structured to defeat data processing. Let me break this down, IF real data structure is as illustrated above. (There is a simple way to test if your illustration reflects the structure of real data. Does Splunk give you fields like {}.Metadata.*, {}.Header.*, {}.Payload.*. If this is not the case, you will need to repost realistic data structure.)

The illustrated structure is an array whose first element is {}.Metadata, followed by an alternate parade of {}.Header's and {}.Payload's. This means that the data processor will have to discern which element represents which kind of data in one of awkward ways:

- Calculate the data type using the index of the element in the array, or

- Extract the root key of the JSON objects sequentially, all the while keeping track of array index of each element.

Either way, this requires data reassembly before processing can begin. By organizing data in this manner, your developer is depriving you of some of the most powerful aka useful features of JSON.

Below, I will demonstrate how a proper data structure helps processing by giving an example in each case.

A properly nested key-value structure (desired)

A normal developer would have structured data like thusly:

{"Metadata":

{

"a": "pc name",

"b": "network ip",

"c": "version"

},

"Events": [

{"Header":

{

"Type": "Event",

"Name": "event 1",

"Timestamp": 133292749467707838

},

"Payload":

{

"Scan Start Timestamp": 133292749443659491,

"Scan Complete Timestamp": 133292749467707824,

"Scan Type": "balala"

}

},

{"Header":

{

"Type": "Event",

"Name": "event 2",

"Timestamp": 133292749494033071

},

"Payload":

{

"Scan Start Timestamp": 133297249443659491,

"Scan Complete Timestamp": 133292749467707830

}

}

]

}

If your files are organized in this fashion, Splunk will give you a set of fields name Metadata.* and Events{}.*; under Events{}, Splunk will give you Events{}.Header.* and Events{}.Payload.*. When you have a clean structure like this, all you need is three lines

| spath path=Events{}

| mvexpand Events{}

| spath input=Events{}

Then, you get

| Header.Name | Header.Timestamp | Header.Type | Metadata.a | Metadata.b | Metadata.c | Payload.Scan Complete Timestamp | Payload.Scan Start Timestamp | Payload.Scan Type |

| event 1 | 133292749467707838 | Event | pc name | network ip | version | 133292749467707824 | 133292749443659491 | balala |

| event 2 | 133292749494033071 | Event | pc name | network ip | version | 133292749467707830 | 133297249443659491 |

Here is an emulation of the clean data structure that you can play with the above code.

| makeresults

| eval _raw = "{\"Metadata\":

{

\"a\": \"pc name\",

\"b\": \"network ip\",

\"c\": \"version\"

},

\"Events\": [

{\"Header\":

{

\"Type\": \"Event\",

\"Name\": \"event 1\",

\"Timestamp\": 133292749467707838

},

\"Payload\":

{

\"Scan Start Timestamp\": 133292749443659491,

\"Scan Complete Timestamp\": 133292749467707824,

\"Scan Type\": \"balala\"

}

},

{\"Header\":

{

\"Type\": \"Event\",

\"Name\": \"event 2\",

\"Timestamp\": 133292749494033071

},

\"Payload\":

{

\"Scan Start Timestamp\": 133297249443659491,

\"Scan Complete Timestamp\": 133292749467707830

}

}

]

}"

``` emulation of clean data structure ```

Linear JSON array (as originally illustrated)

Now, to use the linear array data structure in your original illustration, processing becomes a lot more convoluted.

| spath path={}

| eval nray = mvrange(0, round(mvcount('{}')/2 - 1))

| eval Event = mvmap(nray, json_array(json_extract(mvindex('{}', nray * 2 + 1)), json_extract(mvindex('{}', nray * 2 + 2))))

| mvexpand Event

| spath input=Event

| rename {}.* AS *

Here is an emulation that you can play with this second code, and compare with real data.

| makeresults

| eval _raw = "[

{ \"Metadata\":

{

\"a\": \"pc name\",

\"b\": \"network ip\",

\"c\": \"version\"

}

},

{ \"Header\":

{

\"Type\": \"Event\",

\"Name\": \"event 1\",

\"Timestamp\": 133292749467707838

}

},

{ \"Payload\":

{

\"Scan Start Timestamp\": 133292749443659491,

\"Scan Complete Timestamp\": 133292749467707824,

\"Scan Type\": \"balala\"

}

},

{ \"Header\":

{

\"Type\": \"Event\",

\"Name\": \"event 2\",

\"Timestamp\": 133292749494033071

}

},

{ \"Payload\":

{

\"Scan Start Timestamp\": 133297249443659491,

\"Scan Complete Timestamp\": 133292749467707830

}

}

]"

``` emulation of linear array ```

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for your reply!

1. A properly nested key-value structure:

This was our previous structure, and we prefer to use this struct, but the problem is that the file size can be up to 4MB(40K characters), so the JSON files are cut in the middle.

I received a suggestion to modify the definition of "Truncate" in props.conf (since the default value is restricted to 10,000) but it's not recommended to change it to 40K, therefore we would like to change the structure to linear.

I think that your query uses _raw field by default (I executed your query and 13 rows are extracted instead of 747, since the file is big):

| spath path=Events{}

| mvexpand Events{}

| spath input=Events{}

2. Linear JSON array (as originally illustrated):

I solve it by the following query, but we have time performance issue:

index=myIndex source=mySource sourcetype=_json

| search NOT MetaData

| eval file = source

| join type=left file [

| search index=myIndex source=mySource sourcetype=_json

| search NOT HEADER

| eval file = source

]

Thanks a lot,

Maayan

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me answer the last question first: Define a custom sourcetype is easy, you can do it in Splunk Web or use .conf files. Configure source types contains valuable information; you can also post in Getting Data In to get additional help. (Indeed using the predefined _json type is not desirable for sizable data set.) As you mentioned "a suggestion to modify the definition of "Truncate" in props.conf," and indicated that event size > 40MB (or is it 40KB?) was undesirable. This makes me think that you have already tried custom source type.

I can see that raising event size in limits.conf can risk busting RAM because it affects all sources. In addition, even with a single custom sourcetype in props.conf, you still don't want a single event in the size of megabytes. Depending on how much RAM the indexer has, even 40KB can be risky.

As to the second question, yes, using join in that manner is very inefficient. You effectively run the same search twice. mvexpand in my example can be less demanding. Have you tried?

Back to desirable data structure. Can you explain how ingestion truncation affects only the "normal" nested key-value JSON, but not the linear array? If you compare raw events ingested from the two different structures, do you see one kind is incomplete compared with your original but the other is incomplete? To me, this is impossible. If the indexer cuts off data at some length, it will cut off at the same length for both structures.

You are correct that I constructed search commands based on _raw fields already extracted into a Metadata field (single value) and an Events field (array of two JSON sub nodes). Can you verify that the _raw event contains 747 subnodes? One possible test could be

| rex max_match=0 "(?<subnode>\"Header\":)"

| eval count = mvcount(subnode)

I am uncertain if rex will get similar memory limits as mvexpand. So, it's safer to manually check.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, thanks a lot! I appreciate this!

0. General-

Files weight (not a single event) can be 4M (~40,000 characters).

1. linear solution- It doesn't work for me (I think because my data event includes both Payload and Header and in your structure event is header or payload). In addition, I need that metadata fields will be added to each row, and it needs to be generic (new fields can be added to metadata).

Here is an example for the linear structure:

[

{

"Metadata": {

"a": "pc name",

"b": "network ip",

"c": "version"

}

},

{

"Header": {

"Type": "Event",

"Name": "event 1",

"Timestamp": 133292749467707838

},

"Payload": {

"Scan Start Timestamp": 133292749443659491,

"Scan Complete Timestamp": 133292749467707824,

"Scan Type": "balala"

}

},

{

"Header": {

"Type": "Event",

"Name": "event 2",

"Timestamp": 133292749494033071

},

"Payload": {

"Scan Start Timestamp": 133297249443659491,

"Scan Complete Timestamp": 133292749467707830

}

}

]

2. Key value solution:

2.1 Source type- I haven't started to work on custom source type. The file size should not affect right?

2.2 Json cut in the middle- when i tried your solution the json extracted 13 rows. part of the fields are array or nested fields (Maybe this is the reason?). The file size that i checked is: 511KB.

Example for event from type of array/nested field:

{

"Header": {

"Type": "Event",

"Name": "Event name",

"TimeStamp": 133281773308850774

},

"Payload": {

"DR": "bla",

"DFrame": [],

"Duration": 415990,

"MA": "D1",

"ID": "21",

"RR": "blalala",

"Information": [

{

"ID": 0,

"Number": 2,

"BFrame": [ 0, 0, 48, 0, 47,longArray],

"Probe Response Frame": [ 0, 0, 48, longArray ]

}

]

}

},

2.3 configurations: I don't have permissions to change the configurations settings (but i can ask another team). I will read about extraction_cutoff , i'm not familiar with that, but anyway we don't want to be on stress all the time that we exceed the limit.

thanks a lot,

Maayan

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for posting sample data in text form. I misaligned the linear array format from the screenshot and broke the elements. Now it doesn't look as bad as I initially thought. In fact, because Header and Payload are in the same JSON object, the algorithm is much cleaner:

| spath path={}

| eval Metadata = mvindex('{}', 0)

| eval Event = mvindex('{}', 1, -1)

| mvexpand Event

| spath input=Metadata

| spath input=EventThe main difference from an algo for nested key-value pair is just to use mvindex to populate Metadata and Event. (As opposed to letting Splunk do the job automatically.)

I understand that if you do not control config, it is difficult to experiment. (Although testing on your personal computer with one or two sample file can still be viable.) But I still cannot see how the same default sourcetype ends up breaking JSON when its format is nested key-value, but not breaking when the format is linear array. (On the other hand, 40,000 characters in ASCII would make a 40KB file; in UTF-8 and other common multi-byte character sets, it would be 80KB. There is a large discrepancy from your observed 4MB file size.)

The following is what I use to emulate the corrected linear array sample. You can play with this and compare with real data.

| makeresults

| eval _raw = "[

{ \"Metadata\":

{

\"a\": \"pc name\",

\"b\": \"network ip\",

\"c\": \"version\"

}

},

{

\"Header\": {

\"Type\": \"Event\",

\"Name\": \"event 1\",

\"Timestamp\": 133292749467707838

},

\"Payload\": {

\"Scan Start Timestamp\": 133292749443659491,

\"Scan Complete Timestamp\": 133292749467707824,

\"Scan Type\": \"balala\"

}

},

{

\"Header\": {

\"Type\": \"Event\",

\"Name\": \"event 2\",

\"Timestamp\": 133292749494033071

},

\"Payload\": {

\"Scan Start Timestamp\": 133297249443659491,

\"Scan Complete Timestamp\": 133292749467707830

}

}

]"

``` emulation of linear array ```

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In order to understand if mvexpand can operate on 747 values, I repeated a JSON subnode in your example so Events[] now contains 1,181 elements. On my 8-GB Mac, Splunk with all defaults except

[spath]

# Number of characters to read from an XML or JSON event when

# auto extracting.

extraction_cutoff = 5000I got 1,181 rows; not 13, or any number smaller than in my emulated data. I don't think this has anything to do with extraction_cutoff because that only affects auto extraction. If anything, it may cause automatically extracted Events{}.* to have fewer values than in original data. But because my algorithm does not use those flattened fields, it should have no effect. In all cases, with 1,181 elements in Events[], my file is 300KB, way larger than 5,000 in that limit. (BTW, I'm not doing myself any favor with that override because default property value is 5,000😊)

The fact that the file is < 1MB raises another question: What causes your real files to be > 4MB? Maybe there is a different limit (not truncate) that could be raised? But first, please verify whether individual event contains that full 4MB of data.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

note- regarding option 1- maybe to create custom source type to parse it? if yes, how to do that?