Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Issue with JSON event break regex

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been asked to ingest some JSON logs for auditing purposes but I can't get the event breaking right. I'm pretty good with regex but this one is stumping me. The regex shouldn't need to be complicated!

Here's a snippet from the log. I've truncated the content field with "..." as the content field can be quite large.

{

"_id" : "4befb832-6d00-44d6-8001-f4445a752a6f",

"_t" : ["AuditEvent", "RequestEvent"],

"AppId" : null,

"UserId" : null,

"Timestamp" : "2016-03-02T16:09:42.354Z",

"RequestEventType" : 0,

"RequestEventStatus" : 0,

"Content" : "Email.AddToQueue::xxx@xxx.com::True::<?xml version=\"1.0\"..."

}, {

"_id" : "98dde3f0-f87a-49f5-822a-35862cc9ebfe",

"_t" : ["AuditEvent", "CoopImportExportEvent"],

"AppId" : "14f1d3b7-2bae-488c-8004-818adf991204",

"Timestamp" : "2016-03-02T16:13:05.999Z",

"UserAction" : 0,

"UserActionTxt" : "DeleteAdhocLayer",

"Notes" : "Adhoc layer: Import Regression Test - deleted ",

"UserId" : "00000000-0000-0000-0000-000000000000",

"UserName" : "xxx@xxx.com"

}

I started with the simplest match which should achieve what I need i.e. BREAK_ONLY_BEFORE=\} and also without the escaping slash as I believe in PCRE it shouldn't be needed.

Then tried increasing the regex pattern adding comma, space etc. followed by \"_id\" and other variations. I've been messing around with MUST_BREAK_AFTER and BREAK_ONLY_BEFORE but I can't even get a partial match. Really not sure what's going on with this one.

Any ideas?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the forwarder you need to use a props.conf and leverage

indexed_extractions=JSON

Refer to http://docs.splunk.com/Documentation/Splunk/6.5.0/Admin/Propsconf

Section: Structured Data Header Extraction and configuration

And yes, the forwarder, not the indexer is where the magic happens.

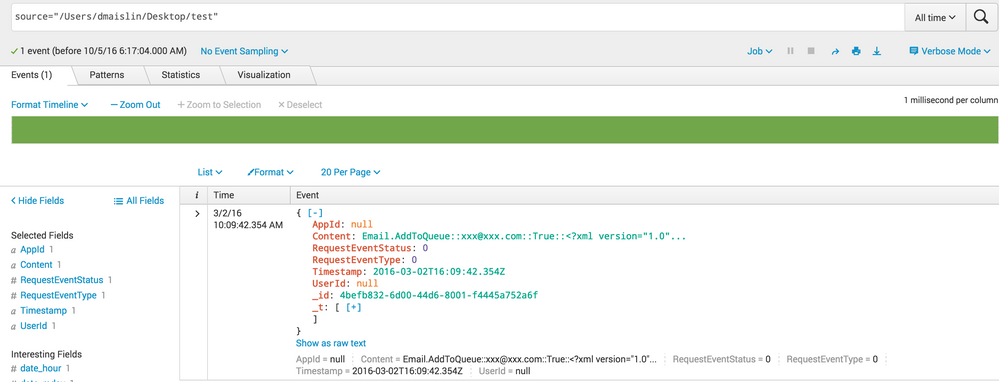

As you see below, all fields, nice structure, syntax highlighting, ready to go.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this in your props.

SHOULD_LINEMERGE=true

BREAK_ONLY_BEFORE=\{

TIME_PREFIX=Timestamp

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks sundareshr. I already tried that and tried again just now but I'm still seeing "No results found." in the event summary.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wouldn't go this route. The newer approach is much faster, already extracts all field automagically, and speed up search and indexing.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the forwarder you need to use a props.conf and leverage

indexed_extractions=JSON

Refer to http://docs.splunk.com/Documentation/Splunk/6.5.0/Admin/Propsconf

Section: Structured Data Header Extraction and configuration

And yes, the forwarder, not the indexer is where the magic happens.

As you see below, all fields, nice structure, syntax highlighting, ready to go.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All line breaking, timestamp recognition and other important parsing steps are done on the forwarder and it also speeds up indexing and search results.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks dmaislin. That'll help once I get my sourcetype built I'm sure but how do I go about building up my sourcetype now before deploying the inputs app with the additional props you've suggested?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Inputs is not a app, but a simple inputs.conf file that will also be on the forwarder.

inputs.conf

[monitor:///var/log/file.json]

sourcetype=MYJSON

props.conf

[MYJSON]

INDEXED_EXTRACTIONS=JSON

TIMESTAMP_FIELDS = Timestamp

If you are new, just do it all locally as a test on a locally installed Splunk instance, like your laptop, go through the UI to add data, select structured data, and ensure you are monitoring the file. Splunk will create the inputs and props for ya.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I updated my answer above with an image showing the data loaded into my local instance to give you context.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That was very useful. Thanks for that. I'm trying it locally now. We push everything out as an app. Inputs, outputs etc. It's easier to deal with down the line.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please accept and upvote the answer if you think it helped.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perfect! Worked a treat. Thanks for taking the time to elaborate as well.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Excellent!