- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to use spath to read dynamic path

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team I have the below Json string coming as an event in Splunk logs .

after data, the next field could be a, b, c, d

I want to read the x and y fields, How to write a single spath query like

| spath input=inputJson path="data.{*}.x"

{data : {a : { x: { } y: { }}} }

{data : {b : { x: { } y: { }}} }

{data : {c : { x: { } y: { }}} }

{data : {d : { x: { } y: { }}} }

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to first extract data beyond the "dynamic" key. (Depending on semantics, I suspect that there is some data design improvement your developers could make so downstream users don't have to do this goaround.)

| spath input=json_data path=data output=beyond

| eval key = json_array_to_mv(json_keys(beyond))

| eval beyond = json_extract(beyond, key) ``` assuming there is only one top key ```

| spath input=beyond path=x

| spath input=beyond path=y

The following is full emulation (I don't see the purpose of all the transposes)

| makeresults count=1

| eval json_data="{\"data\": {\"a\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

| append [ makeresults count=1

| eval json_data="{\"data\": {\"b\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| append [ makeresults count=1

| eval json_data="{\"data\": {\"c\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| append [ makeresults count=1

| eval json_data="{\"data\": {\"d\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| spath input=json_data path=data output=beyond

| eval key = json_array_to_mv(json_keys(beyond))

| eval beyond = json_extract(beyond, key)

| spath input=beyond path=x

| spath input=beyond path=y

| table json_data x y beyond

| json_data | x | y | beyond |

| {"data": {"a": {"x": {"mock_x_field": "value_x"}, "y": {"mock_y_field": "value_y"}}}} | {"mock_x_field":"value_x"} | {"mock_y_field":"value_y"} | {"x":{"mock_x_field":"value_x"},"y":{"mock_y_field":"value_y"}} |

| {"data": {"b": {"x": {"mock_x_field": "value_x"}, "y": {"mock_y_field": "value_y"}}}} | {"mock_x_field":"value_x"} | {"mock_y_field":"value_y"} | {"x":{"mock_x_field":"value_x"},"y":{"mock_y_field":"value_y"}} |

| {"data": {"c": {"x": {"mock_x_field": "value_x"}, "y": {"mock_y_field": "value_y"}}}} | {"mock_x_field":"value_x"} | {"mock_y_field":"value_y"} | {"x":{"mock_x_field":"value_x"},"y":{"mock_y_field":"value_y"}} |

| {"data": {"d": {"x": {"mock_x_field": "value_x"}, "y": {"mock_y_field": "value_y"}}}} | {"mock_x_field":"value_x"} | {"mock_y_field":"value_y"} | {"x":{"mock_x_field":"value_x"},"y":{"mock_y_field":"value_y"}} |

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Note:

1) The spath command can be expensive, especially against large data sets

2) If all you need is to parse a string and get the values, consider regular expressions for json data also.

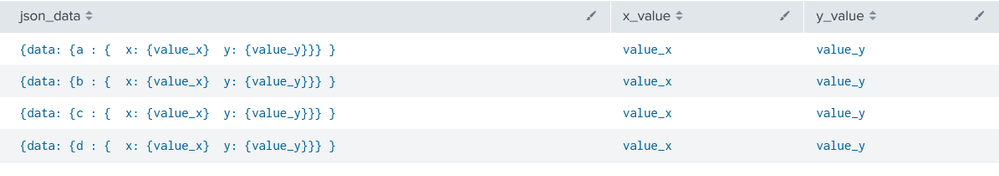

In the rex below, I named the a|b|c|d field "foo", in case it had value later on. If not, it doesn't need to be used

| makeresults ```creating dummy data based on the original question```

| eval json_data="{data: {a : { x: {value_x} y: {value_y}}} }"

| append

[ makeresults

| eval json_data="{data: {b : { x: {value_x} y: {value_y}}} }"

]

| append

[ makeresults

| eval json_data="{data: {c : { x: {value_x} y: {value_y}}} }"

]

| append

[ makeresults

| eval json_data="{data: {d : { x: {value_x} y: {value_y}}} }"

]

```ending the creation of dummy data```

| rex field=json_data "{(?<foo>\w+)\s:\s{\s\sx:\s{(?<x_value>.+)}\s\sy:\s{(?<y_value>.+)}}}" ```parse strings using a regular expression```

| table json_data x_value y_value ```display results of regular expression in a table```Results in:

If this reply helps you, an upvote would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no syntax for this. With spath you have to either provide a precise path or not provide path at all so the whole source field (_raw by default) is parsed.

You can later do some magic based most probably on foreach and matching field name to a pattern but that's kinda ugly and not very efficient.

Anyway, it seems like a badly designed data schema because it looks as if you should rather have an array od objects instead of different objects. Conceptually - different objects are different types of entity so why would you want to treat them the same?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It depends what you want to do next - if you just want to remove the a, b, c, and d from the field names, you could just do this

| spath

| fields - _raw

| transpose 0 column_name=field

| eval field=mvindex(split(field,"."),0).".".mvindex(split(field,"."),2).".".mvindex(split(field,"."),3)

| transpose 0 header_field=field

| fields - column- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

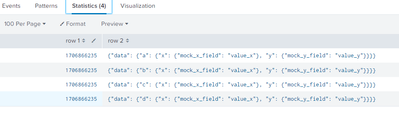

It is producting below result ,

I want to read the x and y field

| makeresults count=1

| eval json_data="{\"data\": {\"a\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

| append [ makeresults count=1

| eval json_data="{\"data\": {\"b\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| append [ makeresults count=1

| eval json_data="{\"data\": {\"c\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| append [ makeresults count=1

| eval json_data="{\"data\": {\"d\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| spath

| fields - _raw

| transpose 0 column_name=field

| eval field=mvindex(split(field,"."),0).".".mvindex(split(field,"."),2).".".mvindex(split(field,"."),3)

| transpose 0 header_field=field

| fields - column

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to first extract data beyond the "dynamic" key. (Depending on semantics, I suspect that there is some data design improvement your developers could make so downstream users don't have to do this goaround.)

| spath input=json_data path=data output=beyond

| eval key = json_array_to_mv(json_keys(beyond))

| eval beyond = json_extract(beyond, key) ``` assuming there is only one top key ```

| spath input=beyond path=x

| spath input=beyond path=y

The following is full emulation (I don't see the purpose of all the transposes)

| makeresults count=1

| eval json_data="{\"data\": {\"a\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

| append [ makeresults count=1

| eval json_data="{\"data\": {\"b\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| append [ makeresults count=1

| eval json_data="{\"data\": {\"c\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| append [ makeresults count=1

| eval json_data="{\"data\": {\"d\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| spath input=json_data path=data output=beyond

| eval key = json_array_to_mv(json_keys(beyond))

| eval beyond = json_extract(beyond, key)

| spath input=beyond path=x

| spath input=beyond path=y

| table json_data x y beyond

| json_data | x | y | beyond |

| {"data": {"a": {"x": {"mock_x_field": "value_x"}, "y": {"mock_y_field": "value_y"}}}} | {"mock_x_field":"value_x"} | {"mock_y_field":"value_y"} | {"x":{"mock_x_field":"value_x"},"y":{"mock_y_field":"value_y"}} |

| {"data": {"b": {"x": {"mock_x_field": "value_x"}, "y": {"mock_y_field": "value_y"}}}} | {"mock_x_field":"value_x"} | {"mock_y_field":"value_y"} | {"x":{"mock_x_field":"value_x"},"y":{"mock_y_field":"value_y"}} |

| {"data": {"c": {"x": {"mock_x_field": "value_x"}, "y": {"mock_y_field": "value_y"}}}} | {"mock_x_field":"value_x"} | {"mock_y_field":"value_y"} | {"x":{"mock_x_field":"value_x"},"y":{"mock_y_field":"value_y"}} |

| {"data": {"d": {"x": {"mock_x_field": "value_x"}, "y": {"mock_y_field": "value_y"}}}} | {"mock_x_field":"value_x"} | {"mock_y_field":"value_y"} | {"x":{"mock_x_field":"value_x"},"y":{"mock_y_field":"value_y"}} |

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @yuanliu this is what I was looking for great !!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| makeresults count=1

| eval json_data="{\"data\": {\"a\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

| append [ makeresults count=1

| eval json_data="{\"data\": {\"b\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| append [ makeresults count=1

| eval json_data="{\"data\": {\"c\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| append [ makeresults count=1

| eval json_data="{\"data\": {\"d\": {\"x\": {\"mock_x_field\": \"value_x\"}, \"y\": {\"mock_y_field\": \"value_y\"}}}}"

]

| spath input=json_data

| fields - json_data

| transpose 0 column_name=field header_field=_time

| eval field=mvindex(split(field,"."),0).".".mvindex(split(field,"."),2).".".mvindex(split(field,"."),3)

| transpose 0 header_field=field

| fields - column